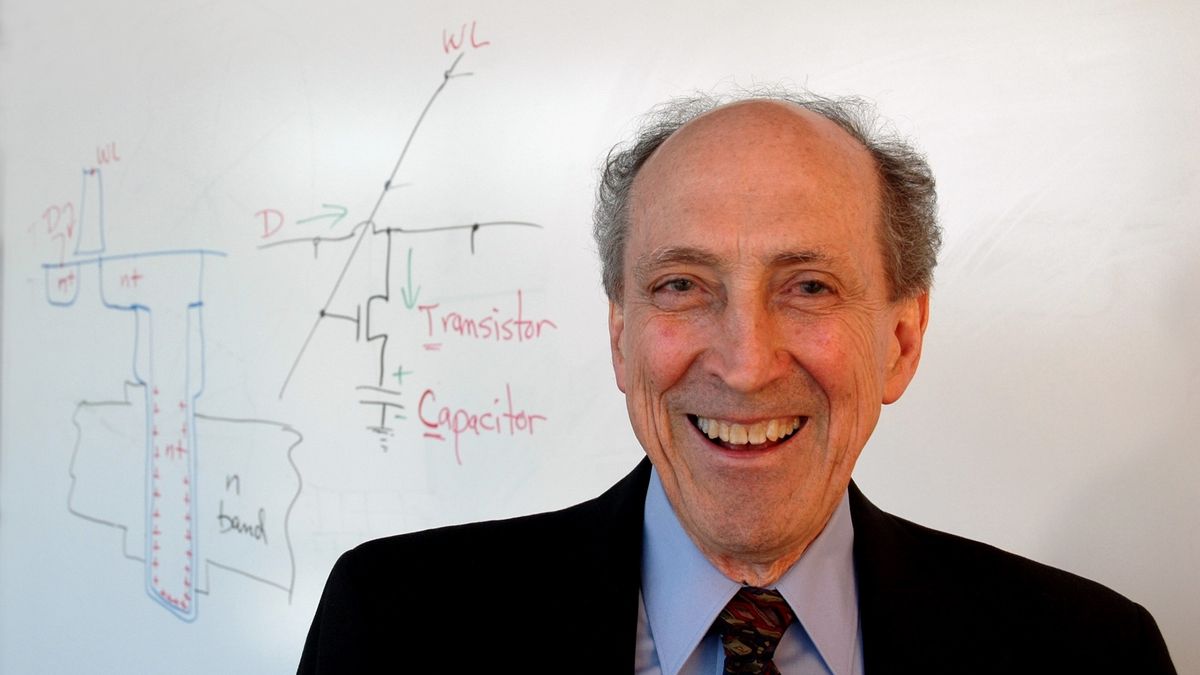

"Robert Dennard, father of DRAM, is deceased -- also known for his foundational Dennard scaling theory."

This obituary is worth noting for futurists because Dennard scaling is indeed a foundational theory, closely related to Moore's Law.

Dennard scaling, in short, is:

When you cut the linear dimensions of a digital circuit in half, you reduce the area to 1/4th of it's original size (area of a square is the side squared), which enables you to pack 4x as many transistors into that area, you reduce the electrical capacitance to 1/4th, you cut the voltage in half, you reduce the current by half, you reduce your power consumption to 1/4th, and you reduce transition time by half, which enables you to double your "clock speed".

This might make you wonder, why in the mid-2000s did clock speed stop increasing and power consumption stop going down, even though transistors continued to get smaller? Well, I researched this question a few years ago, and the surprising answer is: they would have if we had been willing to make our chips colder and colder. To have continued Dennard scaling to the present day, we'd need, like, cryogenically frozen data centers. The relationship to temperature is that, if you don't drop the temperature, then your electrical signals have to overcome the random jiggling of the atoms in the circuit -- which is what temperature is, the average kinetic energy of the molecules in your material. The way you overcome the "thermal noise" this introduces into your electric circuit is with voltage. So, you can't drop your voltage, and you can't drop your power, and, as it turns out, if you can't drop your voltage and power you can't drop your transition time, so you can't double your clock speed.

There are no comments yet.