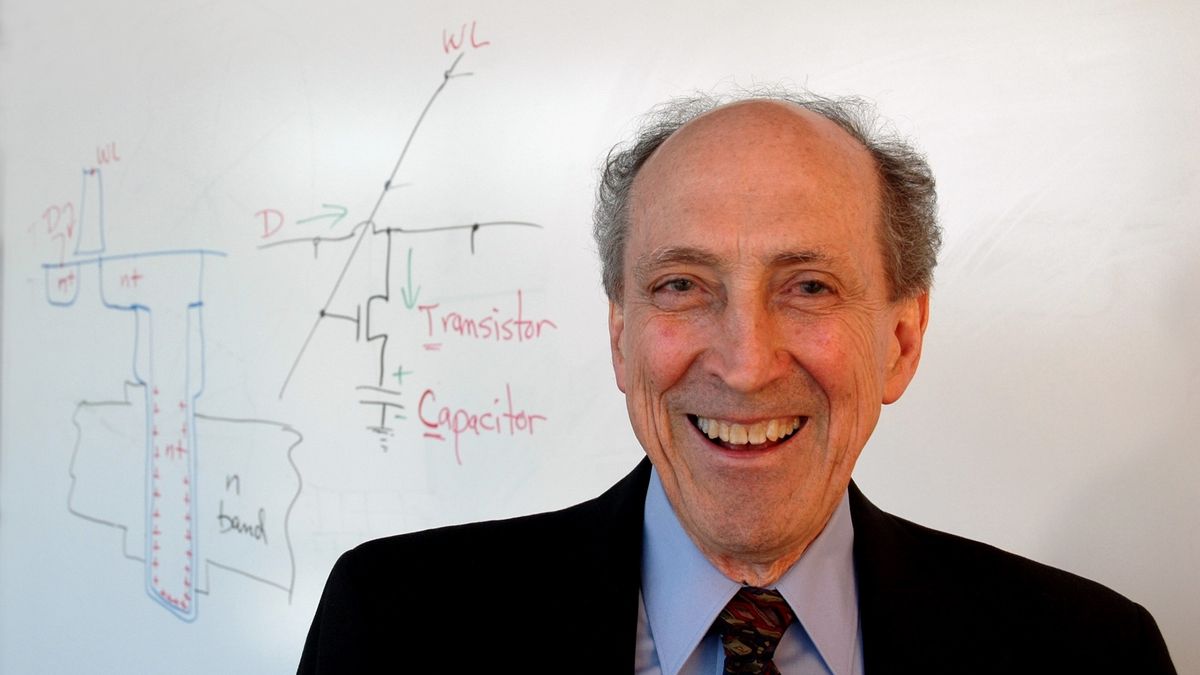

Using large language models to test the predictive power of different schools of political thought. Philip E. Tetlock is a legend in the field of futurology, having tended the predictive ability of public pundits (the topic of his first book), and run a decade+-long forecasting experiment recording and scoring teams of predictors to see who is and who isn't good a predicting the future (the topic of his second book). He now proposes using large language models (LLMs) to reproduce the human practitioners different schools of political thought. He says:

"With current or soon to be available technology, we can instruct large language models (LLMs) to reconstruct the perspectives of each school of thought, circa 1990,and then attempt to mimic the conditional forecasts that flow most naturally from each intellectual school. This too would be a multi-step process:"

"1. Ensuring the LLMs can pass ideological Turing tests and reproduce the assumptions, hypotheses and forecasts linked to each school of thought. For instance, does Mearsheimer see the proposed AI model of his position to be a reasonable approximation? Can it not only reproduce arguments that Mearsheimer explicitly endorsed from 1990-2024 but also reproduce claims that Mearsheimer never made but are in the spirit of his version of neorealism. Exploring views on historical counterfactual claims would be a great place to start because the what-ifs let us tease out the auxiliary assumptions that neo-realists must make to link their assumptions to real-world forecasts. For instance, can the LLMs predict how much neorealists would change their views on the inevitability of Russian expansionism if someone less ruthless than Putin had succeeded Yeltsin? Or if NATO had halted its expansion at the Polish border and invited Russia to become a candidate member of both NATO and the European Union?"

"2. Once each school of thought is satisfied that the LLMs are fairly characterizing, not caricaturing, their views on recent history(the 1990-2024) period, we can challenge the LLMs to engage in forward-in-time reasoning. Can they reproduce the forecasts for 2025-2050 that each school of thought is generating now? Can they reproduce the rationales, the complex conditional propositions, underlying the forecasts -- and do so to the satisfaction of the humans whose viewpoints are being mimicked?"

"3. The final phase would test whether the LLMs are approaching superhuman intelligence. We can ask the LLMs to synthesize the best forecasts and rationales from the human schools of thought in the 1990-2024 period, and create a coherent ideal-observer framework that fits the facts of the recent past better than any single human school of thought can do but that also simultaneously recognizes the danger of over-fitting the facts (hindsight bias). We can also then challenge these hypothesized-to-be-ideal-observer LLM s to make more accurate forecasts on out-of-sample questions, and craft better rationales, than any human school of thought."

I'm glad he included that "soon to be available technology" caveat. I've noticed that LLMs, when asked to imitate someone, imitate the superficial aspects of their speaking style, but rely on the language model's conceptual model for the actual thought content -- they don't successfully imitate that person's way of thinking. The conceptual model the LLM learned during its pretraining is too ingrained so all its deeper thinking will be based on that. If you ask ChatGPT to write a rap about the future of robotics and artificial intelligence in the style of Snoop Dogg, it will make a rap that mimics Snoop's style, superficially, but won't reflect how he thinks on a deeper level -- it won't generate words the real Snoop Dogg would actually say. But it's entertaining. There's one YouTuber I know of who decided, since he couldn't get people who disagreed with him to debate him, he would ask ChatGPT to imitate a particular person with an opposite political point of view. ChatGPT couldn't really imitate that person and the conversations became really boring. Maybe that's why he stopped doing that.

Anyway, it looks like the paper is paywalled, but someone with access to the paywalled paper lifted the above text and put it on their blog, and I lifted it from the blog.

Tetlock on testing grand theories with AI -- Marginal Revolution