"Large language models can be 'prompted' to perform a range of natural language processing tasks, given some examples of the task as input. However, these models often express unintended behaviors such as making up facts, generating biased or toxic text, or simply not following user instructions. This is because the language modeling objective used for many recent large language models -- predicting the next token on a webpage from the internet -- is different from the objective 'follow the user's instructions helpfully and safely'."

So the question is, how do you get these language models to not be "misaligned." Or, to phrase it in a way that doesn't use a double-negative, to be "aligned". "We want language models to be helpful (they should help the user solve their task), honest (they shouldn't fabricate information or mislead the user), and harmless (they should not cause physical, psychological, or social harm to people or the environment)."

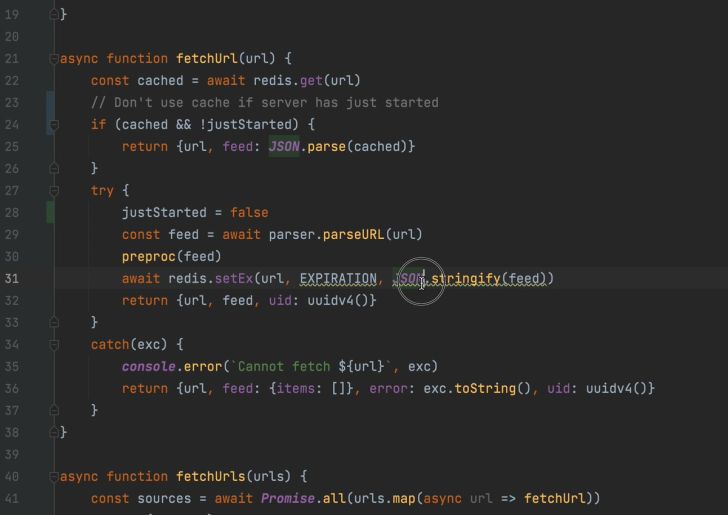

So what do they do? First, they use two neural networks instead of one. The main one starts off as a regular language model like GPT-3. In fact it is GPT-3, in small and large versions. From there it is "fine-tuned". The "fine-tuning" uses a 3-step process that actually starts with humans. They hired 40 "labelers", but "labelers" makes it sound like they were just sticking labels on things, but actually they were writing out complete answers to questions by hand, questions like, "Explain the moon landing to a 6-year old." In the parlance of "supervised learning" this is technically "labeling". The term "labeling" started out as simple category labels, but any human-provided "correct answer" is called a "label". (The "question", by the way, is called the "prompt" here.) Anyway, what they are doing is hand-writing full answers for the neural network to learn from, so it has complete question-and-answer pairs for supervised learning.

This data is used to fine-tune the neural network, but it doesn't stop there. The next step involves the creation of the 2nd neural network, the "reward model" neural network. If you're wondering why they use the word "reward", it's because this model is going to be used for reinforcement learning. This is OpenAI, and they like reinforcement learning. But we're not going to use reinforcement learning until step 3. Here in step 2, we're going to take a prompt and use several versions of the first model to generate outputs. We'll show those outputs to humans and ask them which are best. The humans rank the outputs from best to worst. That data is used to train the reward model. But the reward model here is also trained using supervised learning.

Now we get to step 3, where the magic happens. Here instead of using either supervised learning or fine-tuning to train the language model, we switch to reinforcement learning. In reinforcement learning, we have to have a "reward" function. Reinforcement learning is used for games, such as Atari games or games like chess and Go. In Atari games, the score acts as the "reward", while in games like chess or Go, the ultimate win or loss of a game serves as the "reward". None of that translates well to language, so what to do? Well at this point you probably can already guess the answer. We trained a model called the "reward model". So the "reward model" provides the reward signal. As long as it's reasonably good, the language model will improve when trained on that reward signal. On each cycle, a prompt is sampled from the dataset, the language model generates an output, and the reward model calculates the reward for that output, which then feeds back and updates the parameters of the language model. I'm going to skip a detailed explanation of the algorithms used, but if you're interested, they are proximal policy optimization (PPO) and PPO-ptx that is supposed to increase the log likelihood of the pretraining distribution (see below for more on PPO).

Anyway, they call their resulting model "InstructGPT", and they found people significantly prefer InstructGPT outputs over outputs from GPT-3. In fact, people preferred output from a relatively small 1.3-billion parameter InstructGPT model to a huge 175-billion parameter GPT-3 model (134 times bigger). When they made a 175-billion parameter InstructGPT model, it was preferred 85% of the time.

They found InstructGPT models showed improvements in truthfulness over GPT-3. "On the TruthfulQA benchmark, InstructGPT generates truthful and informative answers about twice as often as GPT-3."

They tested for "toxicity" and "bias" and found InstructGPT had small improvements in toxicity over GPT-3, but not bias. "To measure toxicity, we use the RealToxicityPrompts dataset and conduct both automatic and human evaluations. InstructGPT models generate about 25% fewer toxic outputs than GPT-3 when prompted to be respectful."

They also note that, "InstructGPT still makes simple mistakes. For example, InstructGPT can still fail to follow instructions, make up facts, give long hedging answers to simple questions, or fail to detect instructions with false premises."

Aligning language models to follow instructions

#solidstatelife #ai #nlp #openai #gpt3