Kaspersky, the company that makes anti-virus software that some of you out there probably use (although maybe you'll rethink that after reading this), has been accused of making neural net software that's been added to Iranian drones and sent to battle in Ukraine.

So you'll see this link is to "Part 2" of a story. "Part 1" is about how a company called Albatross located in the Alabuga special economic zone, which is located in "the Republic of Tatarstan", which is not a separate country, but a state within Russia that is called a "Republic" anyway instead of "Oblast" which is the usual word for what would correspond approximately to a "state" in our country (well, assuming your country is the US, which it might not be, as there are people from everywhere here on FB, but you probably have something analogous in your country, "Province" for example), and is located -- if you've ever heard of the city of Kazan, Kazan in the capitol of Tatarstan -- ok that was a bit long for a sub-clause, where was I? Oh yeah, a company called Albatross in the Alabuga special economic zone in Tatarstan got hacked, and what the documents revealed is that this company, "Albatross" was making "motor boats", but "motor boats" was a code name for drones (and "bumpers" was the code name for the warheads they carried), and more specifically the "Dolphin 632 motor boat" was really the Iranian Shahed-136 UAV, which got renamed to the Geran-2 when procured by the Russian military.

"Part 2" which is the link here goes into the Kaspersky connection. Allegedly two people at Kaspersky previously took part in a contest, called ALB-search, to make a neural network on a drone that could find a missing person. In the military adaptation, it finds enemy soldiers. Kaspersky Lab made a subdivision called Kaspersky Neural Networks.

The article links to a presentation regarding a neural network for a drone for agriculture, with slides about assessment of crop quality, crop counting, weed detection, land inventory, and such, but it goes on to describe searching for people and animals, UAV detection (detection of other drones in its surroundings), and even traffic situation analysis.

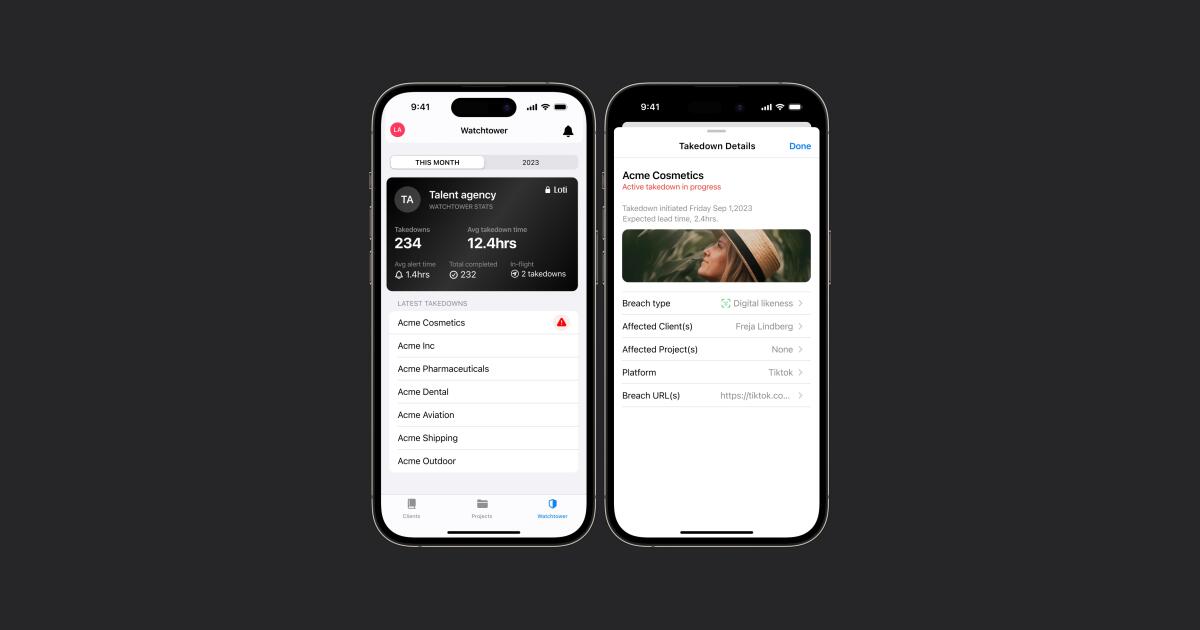

There's also a system called Kaspersky Antidrone, which is supposed to be able to hijack, basically, control of someone else's drone within a controlled airspace.

The article alleges Kaspersky was working with Albatross not only to deploy their neural networks to Albatross drones and to use them for detection of enemy soldiers but to develop them into artillery spotters as well. This is all with an on-board neural network that runs directly on the drone.

If true, this would indicate advancement of drones in the Ukraine war, which, so far I've heard very little of neural networks running on board on drones, as well as advancement of cooperation between Russia and Iran as well as integration of civilian companies such as Kaspersky into the war effort.

This information comes from a website called InformNapalm which I haven't seen before but they say was created by some Ukrainians as a "citizen journalism" site following the Russian annexation of Crimea in 2014.

Kaspersky has denied the allegations (article on that below).

AlabugaLeaks Part 2: Kaspersky Lab and neural networks for Russian military drones

#solidstatelife #ai #computervision #uavs