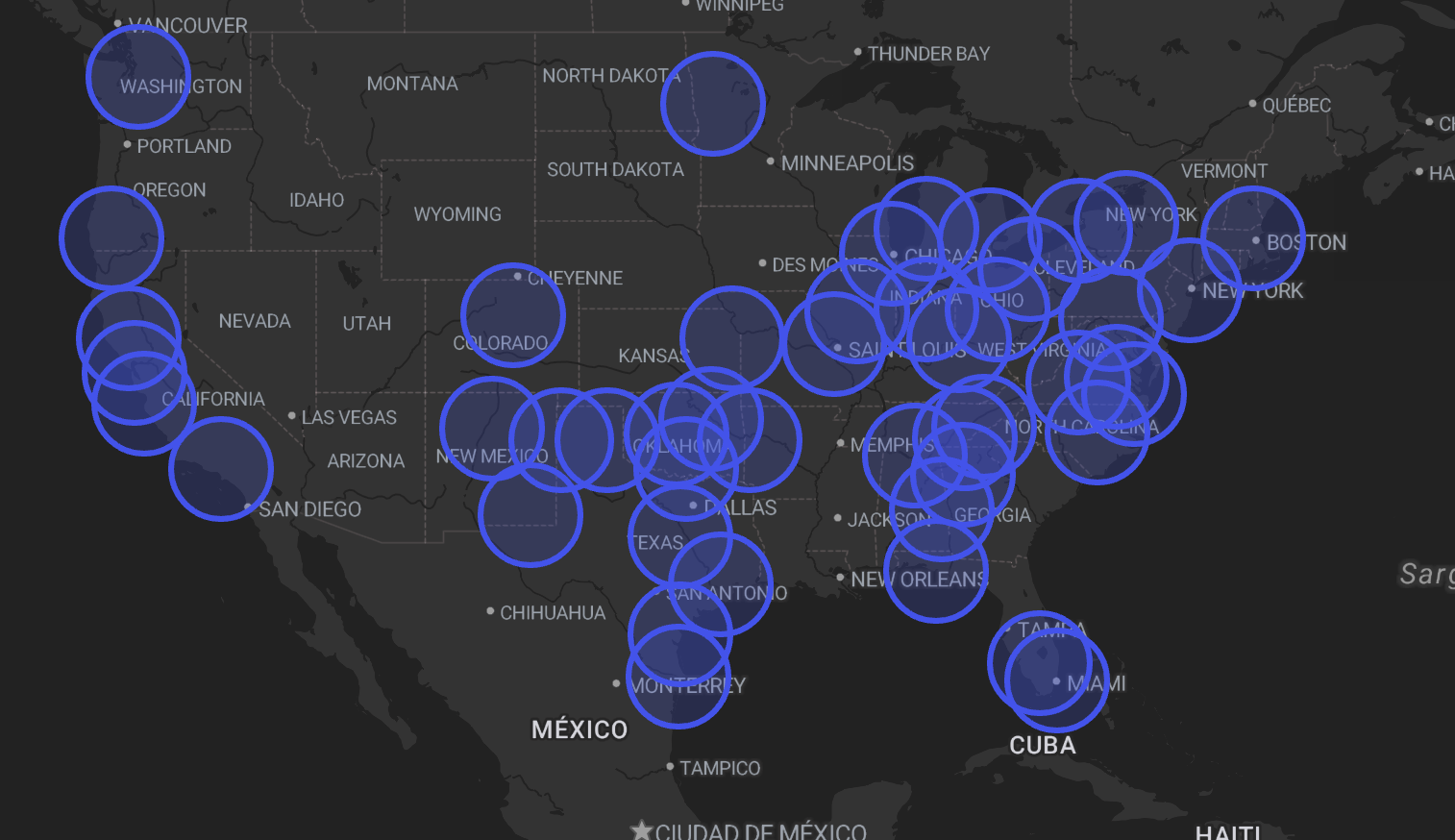

Diffusion Illusions: Flip illusions, rotation overlays, twisting squares, hidden overlays, Parker puzzles...

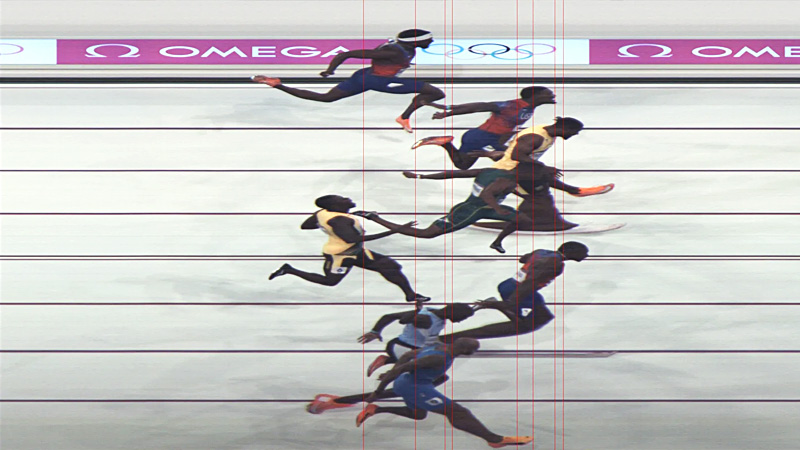

If you've never heard of "Parker puzzles", Matt Parker, the math YouTuber, asked this research team to make him a jigsaw puzzle with two solutions: one is a teacup, and the other is a doughnut.

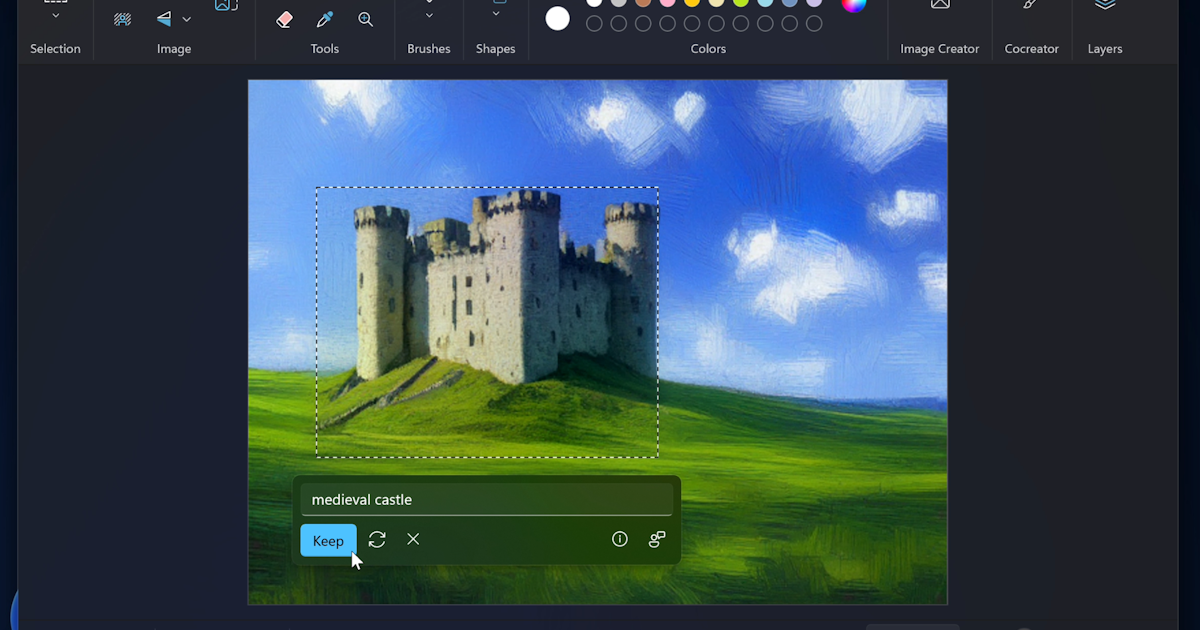

The system they made starts with diffusion models, which are the models you use when you type a text prompt in and it generates the image for you. Napoleon as a cat or unicorn astronauts or whatever.

What if you could generate two images at once that are mathematically related somehow?

That's what the Diffusion Illusions system does. Actually it can even do more than two images.

First I must admit, the system uses an image parameterization system called Fourier Features Networks, and I clicked through to the research paper for Fourier features Networks, but I couldn't understand it. The "Fourier" part suggests sines and cosines, and yes, there's sine and cosine math in there, but there's also "bra-ket" notion, like you normally see in quantum physics, with partial differential equations in the bra-ket notation, and such. So, I don't understand how Fourier Features works.

There's a video of a short talk from SIGGRAPH, and in it (at about 4:30 in), they claim that diffusion models, all by themselves, have "adversarial artifacts" that Fourier Features fixes. I have no idea why diffusion models on their own would have any kind of "adversarial artifacts" problems. So obviously if I have no idea what might cause the problems, I have no idea why Fourier Features might fix them.

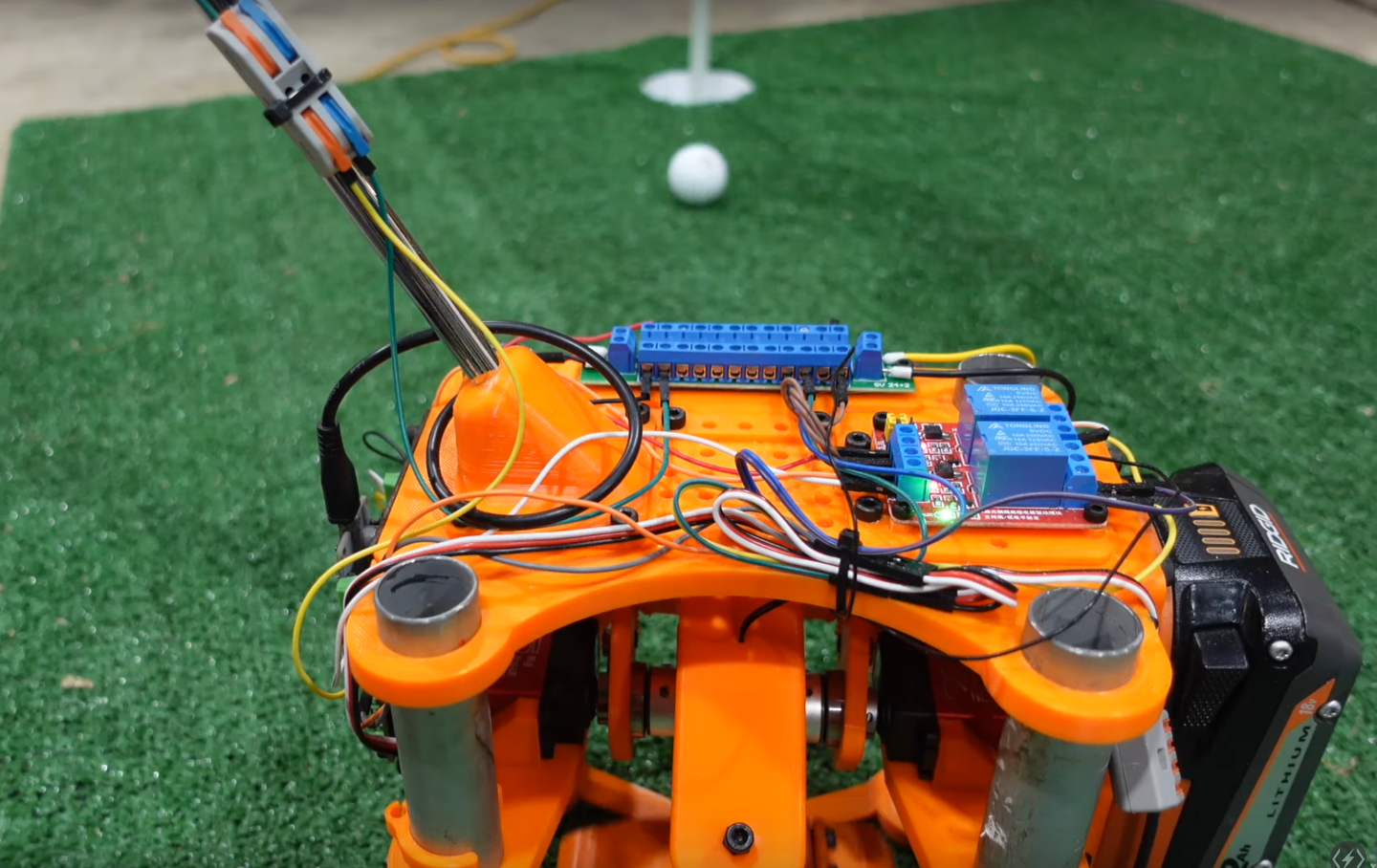

Ok, with that out of the way, the way the system works is there are the output images that the system generates, which they call "prime" images. The fact that they give them a name implies there's an additional type of image in the system, and there is. They call these other images the "dream target" images. Central to the whole thing is the "arrangement process" formulation. The only requirement of the "arrangement process" function is that it is differentiable, so deep learning methods can be applied to it. It is this "arrangement process" that decides whether you're generating flip illusions, rotation overlay illusions, hidden overlay illusions, twisting squares illusions, Parker puzzles, or something else -- you could define your own.

After this, it runs two training processes concurrently. The first is the standard way diffusion illusions are trained. This calculates an "error", also called a loss, from the target text conditioning, which is called the score distillation loss.

Apparently, however, circumstances exist where it is not trivial for prime images to follow the gradients from the Score Distillation Loss to give you images that create the illusion you are asking for. To get the system unstuck, they added the "dream target loss" training system. The "dream target" images are images made from your text prompts individually. So, let's say you want to make a flip illusion that is a penguin viewed one way and a giraffe when flipped upside down. In this instance, the system will take the "penguin" prompt and create an image from it, and take the "giraffe" prompt and create a separate image for it, and flip it upside down. These become the "dream target" images.

The system then computes a loss on the prime images and "dream target" images, as well as the original score distillation loss. If the system has any trouble converging on the "dream target" images, new "dream target" images are generated from the same original text prompts.

In this way, the system creates visual illusions. You can even print the images and turn them into real-life puzzles. For some illusions, you print on transparent plastic and overlap the images using an overhead projector.

Diffusion Illusions

#solidstatelife #ai #computervision #genai #diffusionmodels