"We Are the Robots: Machinic mirrors and evolving human self-conceptions"

Musings on the tendency for actual robots to become more "fluid" and less "robotic" than our conception of the "robotic aesthetic".

"The classic 1978 Kraftwerk song We Are the Robots was oddly popular in India when I was growing up."

"The song has an associated music video, featuring the band members playing their instruments with stereotypically robotic movements and flat affects."

The main point of this essay is his conception of "hyperorganicity". Robots won't just become "fluid" like organic organisms, but will go beyond them.

"In robotics, an emerging example of the rise of flowing, organic operating qualities can be found in drones (which are simpler than ground-based robots in many ways). Early drones, about 20 years ago, were being programmed using what are known as maneuver automata. This was done by having expert human pilots fly the drones around using remote controllers, and recording the maneuvers (such as barrel rolls) that transitioned between trim states (states like steady forward flight). [...] Drones programmed this way had a clear 'pose-to-pose' quality to how they flew."

"But more recently, drones are being programmed to fly in ways no humans could fly. And this isn't just a matter of higher g-forces or tighter turning radii achievable with unpiloted vehicles. Not only can machines now produce motions that no humans can produce (directly with their bodies, or indirectly through pilot controls), no humans can even conceive of them. It takes machine learning to discover and grammatize higher-order motion primitives that exploit the full mobility envelope of a given machine."

"The evolutionary tendency of machines, given improving material and computational capabilities, is not towards perfection of static human notions of the machinic (artistically mimicked or conceptually modeled), but towards the hyperorganic."

"By hyperorganic, I mean an evolutionary mode that drives increasing complexity along both machinic and organic dimensions. This gives us machines from qualitatively distinct evolutionary design regimes. Machines that exhibit organic idioms but defy comparisons with specific biological organisms, and also exhibit alien aspects that don't fit organic idioms."

"Technology gets to parity with the organic, in terms of informational complexity, then begins to go past it to hyperorganic regimes."

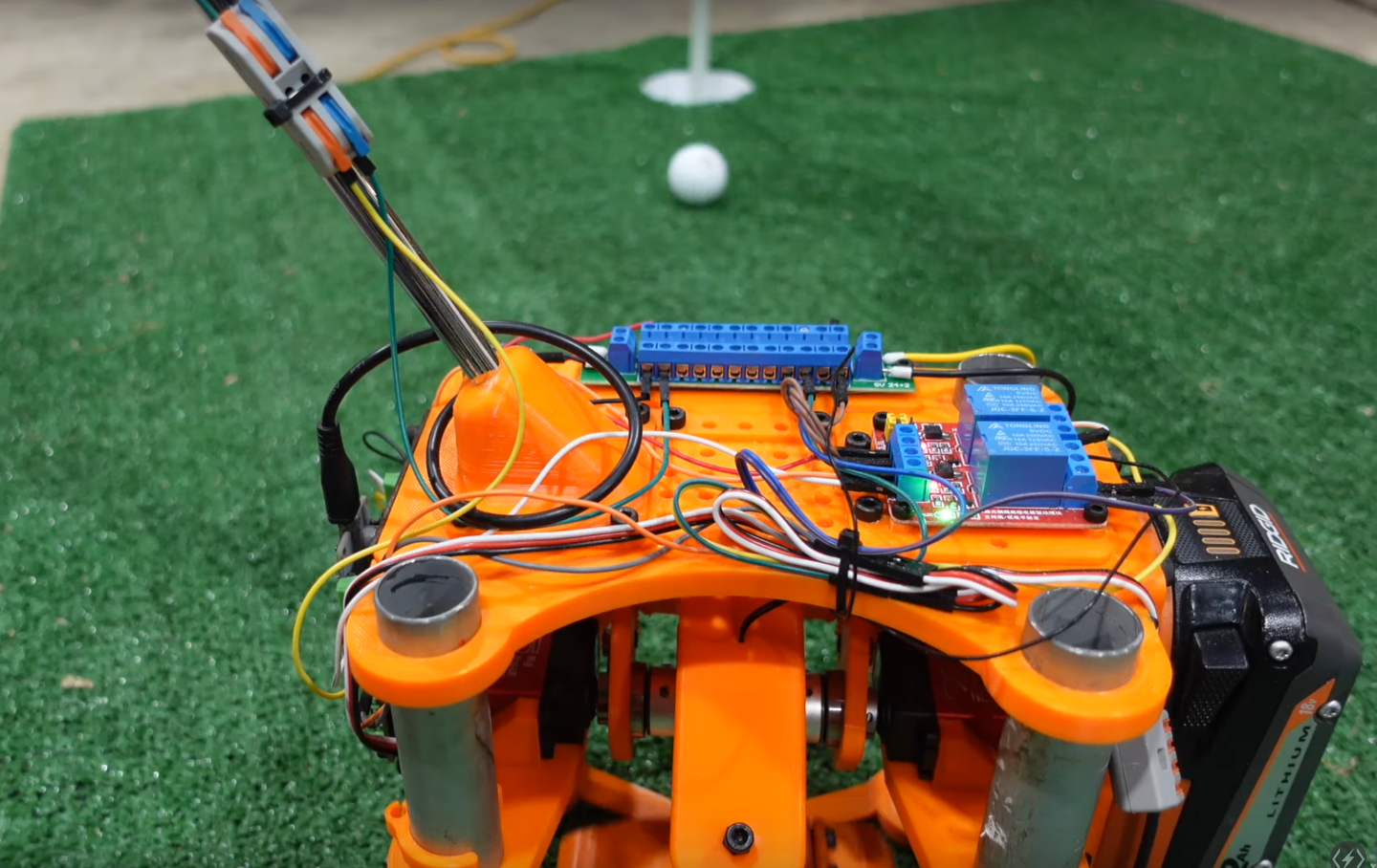

"Today's robots are much more flowing and organic than stereotypically machinic robots that whose movements resemble the robot dance. They will soon be hyperorganic as advances in soft materials, weird actuators, and AI-discovered motion languages continue. The hyperorganic future is arriving not just linguistically, but materially."

He never mentions TRON, but reading this kept making me think of TRON. The orginal 1982 movie had a very "gridlike" feel to it, while the 2010 sequel Tron Legacy had smooth, flowing curves everywhere, and even the colors, which were super bright and saturated in the original, were more pastel with smooth gradients in the sequel. Even though the sequel is in this sense "better", there's still something I find appealing about the "gridlike" aesthetic of bright saturated colors of the original.

/cdn.vox-cdn.com/uploads/chorus_asset/file/25624498/Screenshot_2024_09_16_at_12.46.11_PM.png)