"Full-body haptics via non-invasive brain stimulation."

"We propose & explore a novel concept in which a single on-body actuator renders haptics to multiple body parts -- even as distant as one's foot or one's hand -- by stimulating the user's brain. We implemented this by mechanically moving a coil across the user's scalp. As the coil sits on specific regions of the user's sensorimotor cortex it uses electromagnetic pulses to non-invasively & safely create haptic sensations, e.g., touch and/or forces. For instance, recoil of throwing a projectile, impact on the leg, force of stomping on a box, impact of a projectile on one's hand, or an explosion close to the jaw."

Hmm somehow I don't think everyone's going to be doing this any time soon. Or maybe I'm wrong and this is the missing piece that will let VR take off? Let's continue.

"The key component in our hardware implementation is a robotic gantry that mechanically moves the TMS coil across key areas of the user's scalp. Our design is inspired by a traditional X-Y-Z gantry system commonly found in CNC machines, but with key modifications that allow it to: (1) most importantly, conform to the curvature of the scalp around the pitch axis (i.e., front/back), which is estimated to be 18 degrees for the sensorimotor cortex area, based on our measurement from a standard head shape dataset; (2) accommodate different heads, including different curvatures and sizes; (3) actuate with sufficient force to move a medical-grade TMS coil (~1 kg); (4) actuate with steps smaller than 8.5 mm, as determined by our study; and, finally, (5) provide a structure that can be either directly mounted to a VR headset or suspended from the ceiling."

"We feature three actuators, respectively, to move the coil in the X- (ear-to-ear translation), Y- (nose-to-back translation), and Z- (height away from the scalp) axes. Since the X-axis exhibits most curvature as the coil moves towards the ear, we actuate it via a servo motor with a built-in encoder, this offers a reliable, fairly compact, strong way to actuate the coil."

"To account for curvature, the Z-axis needs to be lifted as the coil traverses the head."

"We used a medically compliant magnetic stimulator (Magstim Super Rapid) with a butterfly coil (Magstim D70)."

"We stimulated the right hemisphere of the sensorimotor cortex (corresponding to the left side of the body) with three consecutive 320 microsecond TMS pulses separated by 50 ms, resulting in a stimulation of ~150 ms. While we opted to only stimulate the right side of the brain to avoid fatigue, the results will be generalizable to the left side."

"We identified two locations on the participant's scalp that yielded minimum stimulation intensities to elicit observable limb movement (i.e., motor threshold) for the hand and foot."

"For each location, the intensity was set to 10% below the hand's motor threshold. The amplitude of TMS stimulation was reported in percentage (100% is the stimulator's maximum). During a trial, the experimenter stimulated the target location. Afterward, the participant reported the strongest point and area of a perceived touch as well as a keyword (or if nothing was felt). Then, the experimenter increased the intensity by 5% while ensuring the participant's comfort & consent, and moved to the next trial. This process continued until the participant reported the same location and same quality of sensations for two consecutive trials, or the intensity reached the maximum (i.e., 100%)."

"After each study session, we organized the participants' responses regarding touch sensations based on where the strongest point of the sensation was. We also annotated each trial to indicate which of the following body parts moved: 'none', 'jaw', 'upper arm', 'forearm', 'hand' (i.e., the palm), 'fingers', 'upper leg' (i.e., the thigh), 'lower leg', and 'foot'."

"Results suggest that we were able to induce, by means of TMS, touch sensations (i.e., only tactile in isolation of any noticeable movements) in two unique locations: hand and foot, which were both experienced by 75% of the participants."

"Our results suggest that we were able to induce, by means of TMS, force-feedback sensations (i.e., noticeable involuntary movements) in six unique locations: jaw (75%), forearm (100%), hand (92%), fingers (83%), lower-leg (92%), and foot (92%), which were all experienced by >75% of the participants. In fact, most of the actuated limbs were observed in almost all participants (>90%) except for the jaw (75%) and fingers (83%). The next most promising candidate would be the upper leg (58%)."

Surely they stopped there and didn't try to do a full-blown VR experience, right? Oh yes they did.

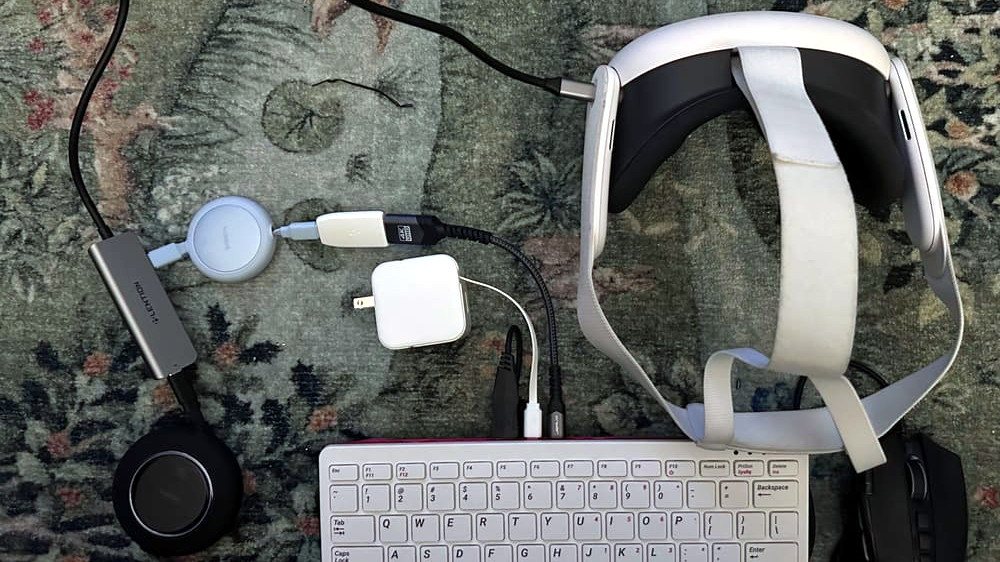

"Participants wore our complete device and a VR headset (Meta Quest 2), as described in Implementation. Their hands and feet were tracked via four HTC VIVE 3.0 Trackers attached with Velcro-straps. Participants wore headphones (Apple Airpods Pro) to hear the VR experience."

"Participants embodied the avatar of a cyborg trying to escape a robotics factory that has malfunctioned. However, when they find the escape route blocked by malfunctioning robots that fire at them, the VR experience commands our haptic device to render tactile sensation on the affected area (e.g., the left hand in this case, but both hands and feet are possible). To advance, participants can counteract by charging up their plasma-hand and firing plasma-projectiles to deactivate the robots. When they open the palm of their hands in a firing gesture, the VR experience detects this gesture and prompts our haptic device to render force-feedback and tactile sensations on the firing hand (e.g., the right hand in the case, but both hands can fire plasma shots). After this, the user continues to counteract any robots that appear, which can fire shots against any of the user's VR limbs (i.e., the hands or feet). The user is shot in their right foot -- just before this happens, the VR prompts our haptic device to render tactile sensation on the right foot. After a while, the user's plasma-hand stops working, and they need to recharge the energy. They locate a crate on the floor and stomp it with their feet to release its charging energy. Just before the stomping releases the energy, the VR commands our haptic device to render force-feedback and tactile sensation on the left leg. The user keeps fighting until they eventually find a button that opens the exit door as they are about to press it, the VR requests that our haptic device render tactile sensation on the right hand. The user has escaped the factory."

Full-body haptics via non-invasive brain stimulation

#solidstatelife #vr #haptics