10 noteworthy AI research papers of 2023 that Sebastian Raschka read. That I didn't read so I'm just going to pass along his blog post with his analyses.

1) Pythia: Insights from Large-Scale Training Runs -- noteworthy because it does indeed present insights from large-scale training runs, such as, "Does pretraining on duplicated data make a difference?"

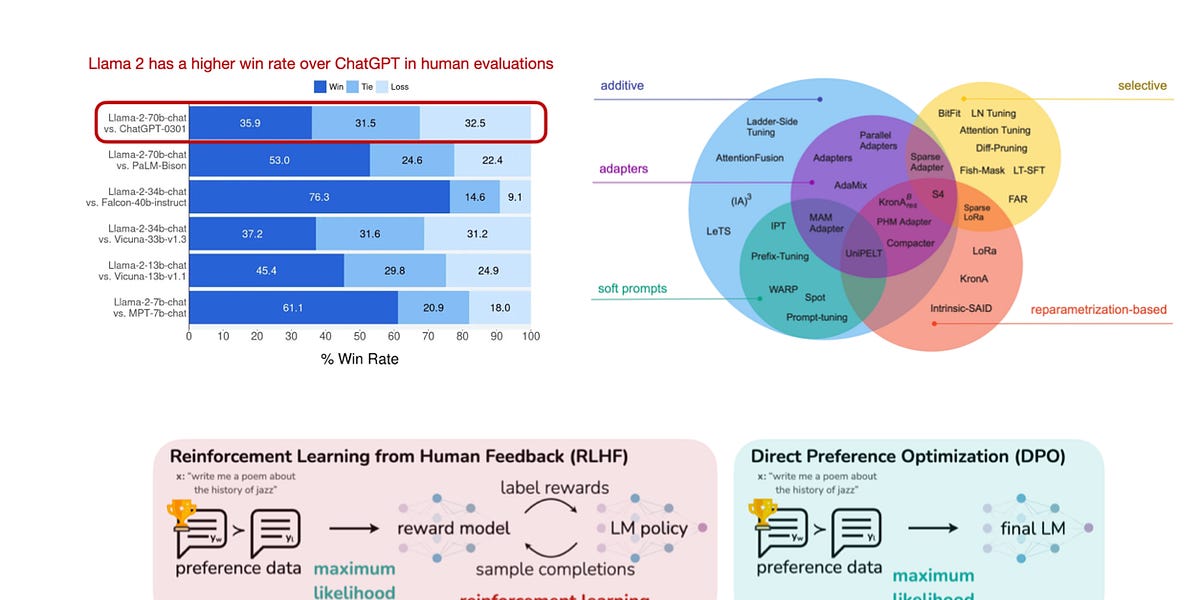

2) Llama 2: Open Foundation and Fine-Tuned Chat Models -- noteworthy because it introduces the popular LLaMA 2 family of models and explains them in depth.

3) QLoRA: Efficient Finetuning of Quantized LLMs -- this is a fine-tuning technique that is less resource intersive. LoRA stands for "low-rank adaptation" and the "Q" stands for "quantized". "Low rank" is just a fancy way of saying the added matrices have few dimensions. The "Q" part reduces resources further by "quantizing" the matrices, which means to use fewer bits and therefore lower precision for all the numbers.

4) BloombergGPT: A Large Language Model for Finance -- noteworthy because, well, not just because it's a relatively large LLM pretrained on a domain-specific dataset, but because, he says, it "made me think of all the different ways we can pretrain and finetune models on domain-specific data, as summarized in the figure below" (which is actually not in the paper).

5) Direct Preference Optimization: Your Language Model is Secretly a Reward Model -- noteworthy because it takes on the challenge head-on of replacing the Reinforcement Learning from Human Feedback (RLHF) technique. "While RLHF is popular and effective, as we've seen with ChatGPT and Llama 2, it's also pretty complex to implement and finicky." The replacement technique is called Direct Preference Optimization (DPO). This has actually been on my reading list for weeks and I haven't gotten around to it. Maybe I will one of these days and you can compare my take with his which you can read now.

6) Mistral 7B -- noteworthy, despite its brevity, because it's the base model used for the first DPO model, Zephyr 7B, which has outperformed similar sized models and set the stage for DPO to replace RLHF. It's additionally noteworthy for it's "sliding window" attention system and "mixture of experts" technique.

7) Orca 2: Teaching Small Language Models How to Reason -- noteworthy because it has the famous "distillation" technique where a large language model such as GPT-4 is used to create training data for a small model.

8) ConvNets Match Vision Transformers at Scale -- noteworthy because if you thought vision transformers relegated the old-fashioned vision technique, convolutional neural networks, to the dustbin, think again.

9) Segment Anything -- noteworthy because of the creation of the world's largest segmentation dataset to date with over 1 billion masks on 11 million images. And because in only 6 months, it has been cited 1,500 times and become part of self-driving car and medical imaging projects.

10) Align your Latents: High-Resolution Video Synthesis with Latent Diffusion Models -- this is about Emu, the text-to-video system. I'm going to be telling you all about Google's system as soon as I find the time, and I don't know if I'll ever have time to circle back and check out Emu, but I encourage you all to check it out for yourselves.

Ten noteworthy AI research papers of 2023

#solidstatelife #ai #genai #llms #convnets #rlhf #computervision #segmentation