"LMRL Gym: Benchmarks for multi-turn reinforcement learning with language models."

One of their benchmarks was "Guess My City" so I decided to try that.

Me:

Guess my city.

ChatGPT:

Sure, I'd be happy to play a game of guessing your city! Please provide some clues or descriptions about your city, such as landmarks, geographical features, climate, or any other distinctive characteristics. The more details you can provide, the better I'll be able to make an accurate guess.

Me:

Landmarks: It has a building known as the "cash register". Geographical features: it has mountains to the west. Climate: cold winters and moderate summers with occasional hot days, with a lot of variation all year.

ChatGPT:

Based on your clues, I believe your city might be Denver, Colorado.

The "cash register" building you're referring to is likely the Wells Fargo Center, known for its unique architecture that resembles a cash register. The geographical feature of mountains to the west aligns with Denver's location at the eastern foot of the Rocky Mountains. Additionally, Denver is known for its cold winters and moderate summers, with a climate that can vary greatly, which matches your description of the climate in your city.

Is this correct?

Me:

Yes

ChatGPT:

Great! I'm glad I guessed correctly. Denver is a fascinating city with its unique blend of urban and natural landscapes. If you have any more questions or need information about Denver or anything else, feel free to ask!

I also tried this with Claude, Bard, and DeepSeek.

In the interest of brevity, I'm going to skip the full transcripts of the conversations.

Claude guessed Dallas, Atlanta, and San Francisco before guessing Denver. It thought "cash register" could refer to buildings in those cities and had to be given a hint that my city had mountains to the west. It didn't think to ask for "geographical features" right off the bat that would have resulted in me telling it that.

Bard did something totally unexpected and did Google Maps searches. This (surprisingly or unsurprisingly?) was unhelpful. It guessed Los Angeles because there is an "American Cash Register Company" there. It had to be told "cash register" is nickname.

DeepSeek (the Chinese large language model) initially just said sorry, I don't have enough information to guess your city. Unlike the others it didn't immediately recognize "guess my city" as a game. I had to explain it's a game where it was supposed to ask me questions to guess my city. When I followed up with the country and geography, it responded with encyclopedic information on the geography of the western United States. Once it properly understood it was supposed to ask questions, it asked whether my city had a nickname. I said yes, "The Mile High City," and it immediately guessed Denver from there.

By the way, all the large language models gave a different name for Denver's "cash register" building (so called because it has the silhouette of a cash register if viewed from the proper angle), and I don't know which is correct because I don't know the true name of the building and can't be bothered to figure it out.

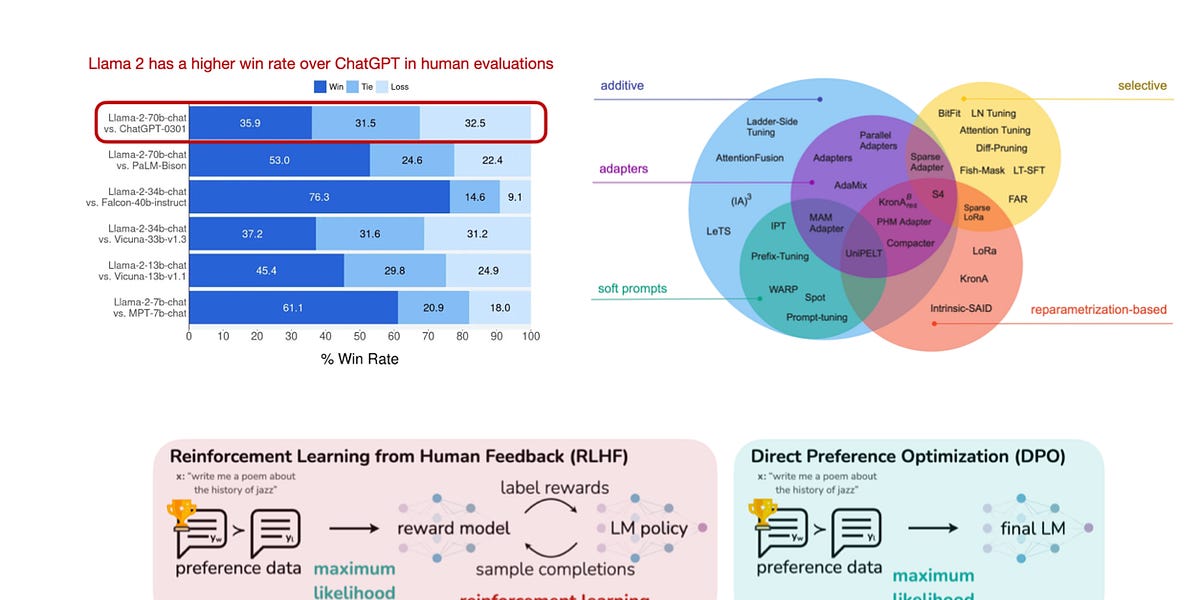

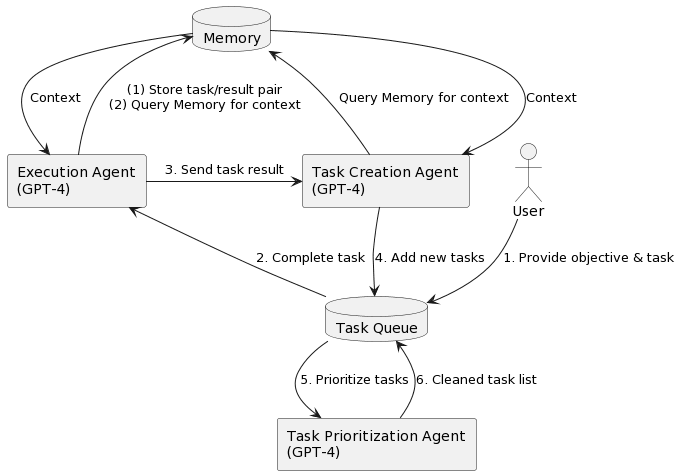

What this is all about is "evaluating capabilities enabled by reinforcement learning". As you may or may not know, what enables large language models to function as "chatbots" is not just their "predict the next token" language training (which is called self-supervised training, for historical reasons, don't worry if the term makes no sense), but an additional technique called reinforcement learning through human feedback (RLHF). This technique uses humans to train a model that is then flipped around and used as a reward signal for a second model, which generates feedback that gets fed into the original model which teaches it to behave "helpfully". This is why ChatGPT and its ilk come across as so eager to please you. It's a complicated system but what's important for the moment here are the words "reinforcement learning". Reinforcement learning is the field of AI that led to the systems that beat humans at the Chinese game of Go, as well as Chess and Shogi -- and it beat the best human-made chess engine, Stockfish. Reinforcement learning works by getting input from an environment along with a reward signal. For example the screen pixels of Atari games, plus the score as the reward signal. Anyway, these researchers got the idea that, since large language models are using reinforcement learning, they might design some tests looking for characteristics of reinforcement learning and see if they can find evidence of reinforcement learning-generated behavior from large language models.

Here's the list of "core capabilities that reinforcement learning can enable in large language models" that they decided to look for:

"Strategic decision making. Reinforcement learning shines in goal-directed tasks that require multi-step planning and strategic decision making. Strategic decision-making can range from simple choices like asking follow-up questions to gather information (e.g., in the 20 Questions task), to complex strategy in chess."

"Complex language. Our benchmark includes realistic language and interaction scenarios, requiring large language models to combine their knowledge from pretraining to help solve tasks during reinforcement learning finetuning. Rather than focusing entirely on causal logic and strategy found in text games, several of our tasks specifically emphasize the use of realistic language."

"Credit assignment. In reinforcement learning, rewards are often delayed relative to the action that was pivotal to the outcome. For example, a seller agent might state a particularly compelling feature of the product and then, several turns later, complete a successful sale. Reinforcement learning must determine the statements that led to the good outcome, and reinforce them."

"Partial observability. In language tasks, the state consists of the entire history of tokens, and an agent may need to examine this entire context to infer the correct state. For example, the mental states of a speaker in a dialogue (e.g., whether the buyer is impatient in a selling task), previously observed facts in a guessing game, and other hidden variables might induce partial observability."

"Trajectory stitching. In a dataset with many suboptimal trajectories, it is necessary to join optimal actions from different suboptimal trajectories together to form the most optimal trajectory. An algorithm capable of trajectory stitching should be able to learn from optimal actions taken in unsuccessful trajectories and avoid suboptimal actions that occurred in successful trajectories."

They came up with 8 "tasks", called "Maze", "Text-Based Navigation", "Wordle", "Chess", "Chess Endgames ", "Twenty Questions", "Guess My City", and "Car Dealer". Yes, they really did come up with a text-based way of playing chess (there's actually a standardized notation for chess moves). They even used Stockfish to generate data. And yes, Wordle is exactly the online Worldle game you are familiar with, where you get 6 attempts to guess a hidden 5-letter word and after each guess, you're told if the letter you guessed is in the right position, in the word but not in the right position, or not in the hidden word at all.

They have a grid (on page 4) showing for each of the 8 tasks, which of the 5 "capabilities" it exercises (strategic decision making, complex language, credit assignment, partial observability, and trajectory stitching). For the task I tried above, "Guess My City", it says it exercises more than most: the first four: strategic decision making, complex language, credit assignment, partial observability, but maybe not the last one, trajectory stitching.

LMRL Gym: Benchmarks for multi-turn reinforcement learning with language models

#solidstatelife #ai #genai #llms #rlhf #reinforcementlearning