The 2024 AI Index Report from Stanford's Human-Centered Artificial Intelligence lab.

It says between 2010 and 2022, the number of AI research papers per year nearly tripled, from 88,000 to 240,000. So if you're wondering why I'm always behind in my reading of AI research papers, well, there's your answer.

Besides that, I'm just going to quote from the highlights in the report itself, because it seems I can't improve on them, at least not in short order and I've decided I'd like to get this report out to you all quickly. I'll continue browsing through the charts & graphs in all the chapters, but for now I'll just give you their highlights and you can decide if you want to download the report and read it or part of it more thoroughly.

"Chapter 1: Research and Development"

"1. Industry continues to dominate frontier AI research. In 2023, industry produced 51 notable machine learning models, while academia contributed only 15. There were also 21 notable models resulting from industry-academia collaborations in 2023, a new high."

"2. More foundation models and more open foundation models. In 2023, a total of 149 foundation models were released, more than double the amount released in 2022. Of these newly released models, 65.7% were open-source, compared to only 44.4% in 2022 and 33.3% in 2021."

"3. Frontier models get way more expensive. According to AI Index estimates, the training costs of state-of-the-art AI models have reached unprecedented levels. For example, OpenAI's GPT-4 used an estimated $78 million worth of compute to train, while Google's Gemini Ultra cost $191 million for compute."

"4. The United States leads China, the EU, and the UK as the leading source of top AI models. In 2023, 61 notable AI models originated from US-based institutions, far outpacing the European Union's 21 and China's 15."

"5. The number of AI patents skyrockets. From 2021 to 2022, AI patent grants worldwide increased sharply by 62.7%. Since 2010, the number of granted AI patents has increased more than 31 times."

"6. China dominates AI patents. In 2022, China led global AI patent origins with 61.1%, significantly outpacing the United States, which accounted for 20.9% of AI patent origins. Since 2010, the US share of AI patents has decreased from 54.1%."

"7. Open-source AI research explodes. Since 2011, the number of AI-related projects on GitHub has seen a consistent increase, growing from 845 in 2011 to approximately 1.8 million in 2023. Notably, there was a sharp 59.3% rise in the total number of GitHub AI projects in 2023 alone. The total number of stars for AI-related projects on GitHub also significantly increased in 2023, more than tripling from 4.0 million in 2022 to 12.2 million."

"8. The number of AI publications continues to rise. Between 2010 and 2022, the total number of AI publications nearly tripled, rising from approximately 88,000 in 2010 to more than 240,000 in 2022. The increase over the last year was a modest 1.1%."

"Chapter 2: Technical Performance"

"1. AI beats humans on some tasks, but not on all. AI has surpassed human performance on several benchmarks, including some in image classification, visual reasoning, and English understanding. Yet it trails behind on more complex tasks like competition-level mathematics, visual commonsense reasoning and planning."

"2. Here comes multimodal AI. Traditionally AI systems have been limited in scope, with language models excelling in text comprehension but faltering in image processing, and vice versa. However, recent advancements have led to the development of strong multimodal models, such as Google's Gemini and OpenAI's GPT-4. These models demonstrate flexibility and are capable of handling images and text and, in some instances, can even process audio."

"3. Harder benchmarks emerge. AI models have reached performance saturation on established benchmarks such as ImageNet, SQuAD, and SuperGLUE, prompting researchers to develop more challenging ones. In 2023, several challenging new benchmarks emerged, including SWE-bench for coding, HEIM for image generation, MMMU for general reasoning, MoCa for moral reasoning, AgentBench for agent-based behavior, and HaluEval for hallucinations."

"4. Better AI means better data which means ... even better AI. New AI models such as SegmentAnything and Skoltech are being used to generate specialized data for tasks like image segmentation and 3D reconstruction. Data is vital for AI technical improvements. The use of AI to create more data enhances current capabilities and paves the way for future algorithmic improvements, especially on harder tasks."

"5. Human evaluation is in. With generative models producing high-quality text, images, and more, benchmarking has slowly started shifting toward incorporating human evaluations like the Chatbot Arena Leaderboard rather than computerized rankings like ImageNet or SQuAD. Public sentiment about AI is becoming an increasingly important consideration in tracking AI progress."

"6. Thanks to LLMs, robots have become more flexible. The fusion of language modeling with robotics has given rise to more flexible robotic systems like PaLM-E and RT-2. Beyond their improved robotic capabilities, these models can ask questions, which marks a significant step toward robots that can interact more effectively with the real world."

"7. More technical research in agentic AI. Creating AI agents, systems capable of autonomous operation in specific environments, has long challenged computer scientists. However, emerging research suggests that the performance of autonomous AI agents is improving. Current agents can now master complex games like Minecraft and effectively tackle real-world tasks, such as online shopping and research assistance."

"8. Closed LLMs significantly outperform open ones. On 10 select AI benchmarks, closed models outperformed open ones, with a median performance advantage of 24.2%. Differences in the performance of closed and open models carry important implications for AI policy debates."

"Chapter 3: Responsible AI"

"1. Robust and standardized evaluations for LLM responsibility are seriously lacking. New research from the AI Index reveals a significant lack of standardization in responsible AI reporting. Leading developers, including OpenAI, Google, and Anthropic, primarily test their models against different responsible AI benchmarks. This practice complicates efforts to systematically compare the risks and limitations of top AI models."

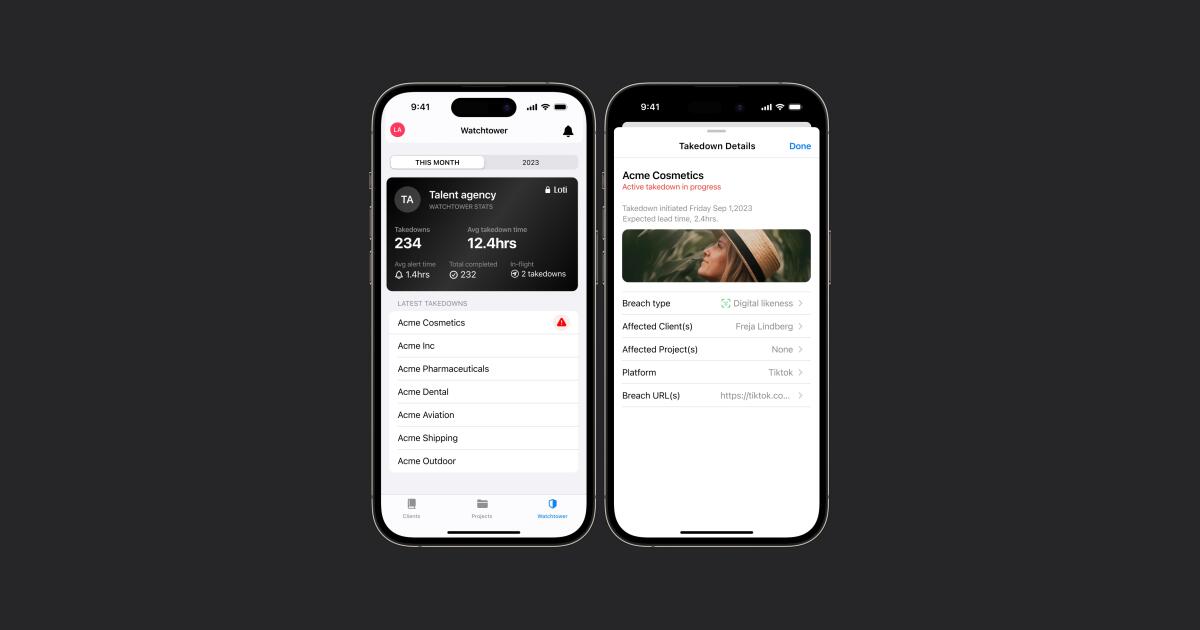

"2. Political deepfakes are easy to generate and difficult to detect. Political deepfakes are already affecting elections across the world, with recent research suggesting that existing AI deepfake methods perform with varying levels of accuracy. In addition, new projects like CounterCloud demonstrate how easily AI can create and disseminate fake content."

"3. Researchers discover more complex vulnerabilities in LLMs. Previously, most efforts to red team AI models focused on testing adversarial prompts that intuitively made sense to humans. This year, researchers found less obvious strategies to get LLMs to exhibit harmful behavior, like asking the models to infinitely repeat random words."

"4. Risks from AI are becoming a concern for businesses across the globe. A global survey on responsible AI highlights that companies' top AI-related concerns include privacy, data security, and reliability. The survey shows that organizations are beginning to take steps to mitigate these risks. Globally, however, most companies have so far only mitigated a small portion of these risks."

"5. LLMs can output copyrighted material. Multiple researchers have shown that the generative outputs of popular LLMs may contain copyrighted material, such as excerpts from The New York Times or scenes from movies. Whether such output constitutes copyright violations is becoming a central legal question."

"6. AI developers score low on transparency, with consequences for research. The newly introduced Foundation Model Transparency Index shows that AI developers lack transparency, especially regarding the disclosure of training data and methodologies. This lack of openness hinders efforts to further understand the robustness and safety of AI systems."

"7. Extreme AI risks are difficult to analyze. Over the past year, a substantial debate has emerged among AI scholars and practitioners regarding the focus on immediate model risks, like algorithmic discrimination, versus potential long-term existential threats. It has become challenging to distinguish which claims are scientifically founded and should inform policymaking. This difficulty is compounded by the tangible nature of already present short-term risks in contrast with the theoretical nature of existential threats."

"8. The number of AI incidents continues to rise. According to the AI Incident Database, which tracks incidents related to the misuse of AI, 123 incidents were reported in 2023, a 32.3 percentage point increase from 2022. Since 2013, AI incidents have grown by over twentyfold. A notable example includes AI-generated, sexually explicit deepfakes of Taylor Swift that were widely shared online."

"9. ChatGPT is politically biased. Researchers find a significant bias in ChatGPT toward Democrats in the United States and the Labour Party in the UK. This finding raises concerns about the tool's potential to influence users' political views, particularly in a year marked by major global elections."

"Chapter 4: Economy"

"1. Generative AI investment skyrockets. Despite a decline in overall AI private investment last year, funding for generative AI surged, nearly octupling from 2022 to reach $25.2 billion. Major players in the generative AI space, including OpenAI, Anthropic, Hugging Face, and Inflection, reported substantial fundraising rounds."

"2. Already a leader, the United States pulls even further ahead in AI private investment. In 2023, the United States saw AI investments reach $67.2 billion, nearly 8.7 times more than China, the next highest investor. While private AI investment in China and the European Union, including the United Kingdom, declined by 44.2% and 14.1%, respectively, since 2022, the United States experienced a notable increase of 22.1% in the same time frame."

"3. Fewer AI jobs in the United States and across the globe. In 2022, AI-related positions made up 2.0% of all job postings in America, a figure that decreased to 1.6% in 2023. This decline in AI job listings is attributed to fewer postings from leading AI firms and a reduced proportion of tech roles within these companies."

"4. AI decreases costs and increases revenues. A new McKinsey survey reveals that 42% of surveyed organizations report cost reductions from implementing AI (including generative AI), and 59% report revenue increases. Compared to the previous year, there was a 10 percentage point increase in respondents reporting decreased costs, suggesting AI is driving significant business efficiency gains."

"5. Total AI private investment declines again, while the number of newly funded AI companies increases. Global private AI investment has fallen for the second year in a row, though less than the sharp decrease from 2021 to 2022. The count of newly funded AI companies spiked to 1,812, up 40.6% from the previous year."

"6. AI organizational adoption ticks up. A 2023 McKinsey report reveals that 55% of organizations now use AI (including generative AI) in at least one business unit or function, up from 50% in 2022 and 20% in 2017."

"7. China dominates industrial robotics. Since surpassing Japan in 2013 as the leading installer of industrial robots, China has significantly widened the gap with the nearest competitor nation. In 2013, China's installations accounted for 20.8% of the global total, a share that rose to 52.4% by 2022."

"8. Greater diversity in robot installations. In 2017, collaborative robots represented a mere 2.8% of all new industrial robot installations, a figure that climbed to 9.9% by 2022. Similarly, 2022 saw a rise in service robot installations across all application categories, except for medical robotics. This trend indicates not just an overall increase in robot installations but also a growing emphasis on deploying robots for human-facing roles."

"9. The data is in: AI makes workers more productive and leads to higher quality work. In 2023, several studies assessed AI's impact on labor, suggesting that AI enables workers to complete tasks more quickly and to improve the quality of their output. These studies also demonstrated AI's potential to bridge the skill gap between low- and high-skilled workers. Still, other studies caution that using AI without proper oversight can lead to diminished performance."

"10. Fortune 500 companies start talking a lot about AI, especially generative AI. In 2023, AI was mentioned in 394 earnings calls (nearly 80% of all Fortune 500 companies), a notable increase from 266 mentions in 2022. Since 2018, mentions of AI in Fortune 500 earnings calls have nearly doubled. The most frequently cited theme, appearing in 19.7% of all earnings calls, was generative AI."

"Chapter 5: Science and Medicine"

"1. Scientific progress accelerates even further, thanks to AI. In 2022, AI began to advance scientific discovery. 2023, however, saw the launch of even more significant science-related AI applications-- from AlphaDev, which makes algorithmic sorting more efficient, to GNoME, which facilitates the process of materials discovery."

"2. AI helps medicine take significant strides forward. In 2023, several significant medical systems were launched, including EVEscape, which enhances pandemic prediction, and AlphaMissence, which assists in AI-driven mutation classification. AI is increasingly being utilized to propel medical advancements."

"3. Highly knowledgeable medical AI has arrived. Over the past few years, AI systems have shown remarkable improvement on the MedQA benchmark, a key test for assessing AI's clinical knowledge. The standout model of 2023, GPT-4 Medprompt, reached an accuracy rate of 90.2%, marking a 22.6 percentage point increase from the highest score in 2022. Since the benchmark's introduction in 2019, AI performance on MedQA has nearly tripled."

"4. The FDA approves more and more AI-related medical devices. In 2022, the FDA approved 139 AI-related medical devices, a 12.1% increase from 2021. Since 2012, the number of FDA-approved AI-related medical devices has increased by more than 45-fold. AI is increasingly being used for real-world medical purposes."

"Chapter 6: Education"

"1. The number of American and Canadian CS bachelor's graduates continues to rise, new CS master's graduates stay relatively flat, and PhD graduates modestly grow. While the number of new American and Canadian bachelor's graduates has consistently risen for more than a decade, the number of students opting for graduate education in CS has flattened. Since 2018, the number of CS master's and PhD graduates has slightly declined."

"2. The migration of AI PhDs to industry continues at an accelerating pace. In 2011, roughly equal percentages of new AI PhDs took jobs in industry (40.9%) and academia (41.6%). However, by 2022, a significantly larger proportion (70.7%) joined industry after graduation compared to those entering academia (20.0%). Over the past year alone, the share of industry-bound AI PhDs has risen by 5.3 percentage points, indicating an intensifying brain drain from universities into industry."

"3. Less transition of academic talent from industry to academia. In 2019, 13% of new AI faculty in the United States and Canada were from industry. By 2021, this figure had declined to 11%, and in 2022, it further dropped to 7%. This trend indicates a progressively lower migration of high-level AI talent from industry into academia."

"4. CS education in the United States and Canada becomes less international. Proportionally fewer international CS bachelor's, master's, and PhDs graduated in 2022 than in 2021. The drop in international students in the master's category was especially pronounced."

"5. More American high school students take CS courses, but access problems remain. In 2022, 201,000 AP CS exams were administered. Since 2007, the number of students taking these exams has increased more than tenfold. However, recent evidence indicates that students in larger high schools and those in suburban areas are more likely to have access to CS courses."

"6. AI-related degree programs are on the rise internationally. The number of English-language, AI-related postsecondary degree programs has tripled since 2017, showing a steady annual increase over the past five years. Universities worldwide are offering more AI-focused degree programs."

"7. The United Kingdom and Germany lead in European informatics, CS, CE, and IT graduate production. The United Kingdom and Germany lead Europe in producing the highest number of new informatics, CS, CE, and information bachelor's, master's, and PhD graduates. On a per capita basis, Finland leads in the production of both bachelor's and PhD graduates, while Ireland leads in the production of master's graduates."

"Chapter 7: Policy and Governance"

"1. The number of AI regulations in the United States sharply increases. The number of AI-related regulations has risen significantly in the past year and over the last five years. In 2023, there were 25 AI-related regulations, up from just one in 2016. Last year alone, the total number of AI-related regulations grew by 56.3%."

"2. The United States and the European Union advance landmark AI policy action. In 2023, policymakers on both sides of the Atlantic put forth substantial proposals for advancing AI regulation The European Union reached a deal on the terms of the AI Act, a landmark piece of legislation enacted in 2024. Meanwhile, President Biden signed an Executive Order on AI, the most notable AI policy initiative in the United States that year."

"3. AI captures US policymaker attention. The year 2023 witnessed a remarkable increase in AI-related legislation at the federal level, with 181 bills proposed, more than double the 88 proposed in 2022."

"4. Policymakers across the globe cannot stop talking about AI. Mentions of AI in legislative proceedings across the globe have nearly doubled, rising from 1,247 in 2022 to 2,175 in 2023. AI was mentioned in the legislative proceedings of 49 countries in 2023. Moreover, at least one country from every continent discussed AI in 2023, underscoring the truly global reach of AI policy discourse."

"5. More regulatory agencies turn their attention toward AI. The number of US regulatory agencies issuing AI regulations increased to 21 in 2023 from 17 in 2022, indicating a growing concern over AI regulation among a broader array of American regulatory bodies. Some of the new regulatory agencies that enacted AIrelated regulations for the first time in 2023 include the Department of Transportation, the Department of Energy, and the Occupational Safety and Health Administration."

"Chapter 8: Diversity"

"1. US and Canadian bachelor's, master's, and PhD CS students continue to grow more ethnically diverse. While white students continue to be the most represented ethnicity among new resident graduates at all three levels, the representation from other ethnic groups, such as Asian, Hispanic, and Black or African American students, continues to grow. For instance, since 2011, the proportion of Asian CS bachelor's degree graduates has increased by 19.8 percentage points, and the proportion of Hispanic CS bachelor's degree graduates has grown by 5.2 percentage points."

"2. Substantial gender gaps persist in European informatics, CS, CE, and IT graduates at all educational levels. Every surveyed European country reported more male than female graduates in bachelor's, master's, and PhD programs for informatics, CS, CE, and IT. While the gender gaps have narrowed in most countries over the last decade, the rate of this narrowing has been slow."

"3. US K12 CS education is growing more diverse, reflecting changes in both gender and ethnic representation. The proportion of AP CS exams taken by female students rose from 16.8% in 2007 to 30.5% in 2022. Similarly, the participation of Asian, Hispanic/Latino/Latina, and Black/African American students in AP CS has consistently increased year over year."

"Chapter 9: Public Opinion"

"1. People across the globe are more cognizant of AI's potential impact--and more nervous. A survey from Ipsos shows that, over the last year, the proportion of those who think AI will dramatically affect their lives in the next three to five years has increased from 60% to 66%. Moreover, 52% express nervousness toward AI products and services, marking a 13 percentage point rise from 2022. In America, Pew data suggests that 52% of Americans report feeling more concerned than excited about AI, rising from 38% in 2022."

"2. AI sentiment in Western nations continues to be low, but is slowly improving. In 2022, several developed Western nations, including Germany, the Netherlands, Australia, Belgium, Canada, and the United States, were among the least positive about AI products and services. Since then, each of these countries has seen a rise in the proportion of respondents acknowledging the benefits of AI, with the Netherlands experiencing the most significant shift."

"3. The public is pessimistic about AI's economic impact. In an Ipsos survey, only 37% of respondents feel AI will improve their job. Only 34% anticipate AI will boost the economy, and 32% believe it will enhance the job market."

"4. Demographic differences emerge regarding AI optimism. Significant demographic differences exist in perceptions of AI's potential to enhance livelihoods, with younger generations generally more optimistic. For instance, 59% of Gen Z respondents believe AI will improve entertainment options, versus only 40% of baby boomers. Additionally, individuals with higher incomes and education levels are more optimistic about AI's positive impacts on entertainment, health, and the economy than their lower-income and less-educated counterparts."

"5. ChatGPT is widely known and widely used. An international survey from the University of Toronto suggests that 63% of respondents are aware of ChatGPT. Of those aware, around half report using ChatGPT at least once weekly."

AI Index Report 2024 -- Artificial Intelligence Index

#solidstatelife #ai #genai

/static.texastribune.org/media/files/845135797d4460298c4c03f52b0fc261/0913%20Odessa%20School%20Tours%20EH%2021.jpg)