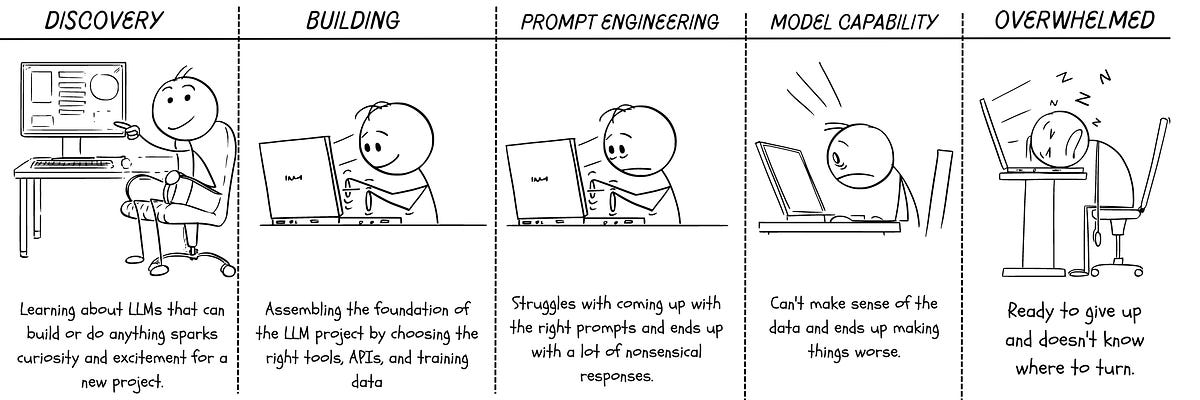

"'It worked when I prompted it' or the challenges of building a large language model (LLM) product".

"In no particular order, here are the major challenges we have faced when building this product."

"One of the significant challenges with using LLM APIs is the lack of SLAs or commitments on endpoint uptime and latency from the API provider."

"Prompt engineering, which involves crafting prompts for the model, is another challenge, as results using the same prompt can be unpredictable."

"Complex products with chains of prompts can further increase inconsistencies, leading to incorrect and irrelevant outputs, often called hallucinations."

"Another significant challenge is the lack of adequate evaluation metrics for the output of the Language Model."

"An incorrect result in the middle of the chain can cause the remaining chain to go wildly off track."

"Our biggest problem that led to the most delays? API endpoint deprecation."

"Trust and security issues also pose a challenge for deploying Language Models."

"The next trust issue is knowing what data was used to train these models."

"Finally, attacks on Language Models pose another challenge, as malicious actors can trick them into outputting harmful or inaccurate results."

They go on to provide a list of "Best practices for building LLM products", categorized as "finetuning and training", "prompt engineering", "vector databases", and "chains, agents, watchers".

"It worked when I prompted it" or the challenges of building an LLM product

There are no comments yet.