Sparks of artificial general intelligence (AGI): Early experiments with GPT-4. So, I still haven't finished reading the "Sparks of AGI" paper, but I discovered this video of a talk by the leader of the team that did the research, Sébastien Bubeck. So you can get a summary of the research from one of the people that did it instead of me.

He talks about how they invented tests of basic knowledge of how the world works that would be exceedingly unlikely to appear anywhere in the training data, so it can't just regurgitate something it read somewhere. What they came up with is asking it how to stack a book, 9 eggs, a laptop, a bottle, and a nail onto each other in a stable manner.

They invented "theory of mind" tests, like asking where John and Mark think the cat is when they both saw John put the cat in a basket, but then John left the room and went to school and Mark took the cat out of the basket and put it in a box. GPT-4 not only says where John and Mark think the cat is, but, actually, since the way the exact question was worded, to just ask what "they" think, GPT-4 also says where the cat thinks it is.

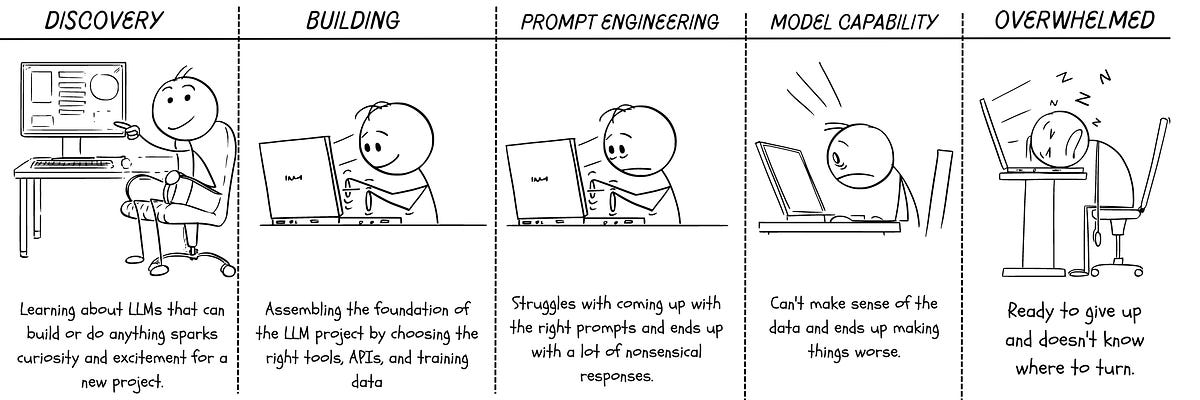

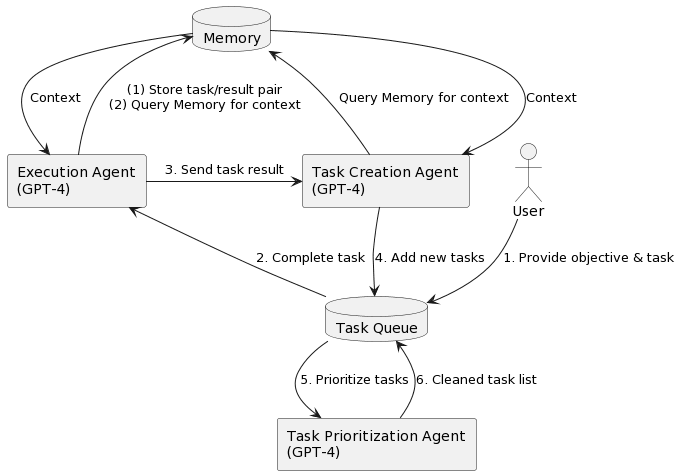

Next he gets into definitions of intelligence that date back to the 1990s, and see how well GPT-4 does at those definitions. This is the main focus of the paper. These definitions are such things as the ability to reason, plan, solve problems, think abstractly, comprehend complex ideas, learn quickly, and learn from experience. GPT-4 succeeds at some of these but not others. For example, GPT-4 doesn't do planning. (This was before AutoGPT, for what it's worth). And GPT-4 doesn't learn from experience, as when you interact with it, it relies on its training data and its interactions with you are not part of that. (It does have a buffer that acts as short-term memory that makes the back-and-forth chat interaction coherent.)

"Can you write a proof that there are infinitely many primes, with every line that rhymes?" Just a "warm up" question.

"Draw a unicorn in TikZ." This is supposed to be hard because it should be hard to tell what code in TikZ, an annoyingly cryptic programming language, apparently (I never heard of it before) for vector graphics drawing (intended to be invoked inside LaTeX, a language for typesetting mathematical notation), creates any particular visual image without being able to "see". This was before GPT had its "multimodal" vision input added. It managed to come it with a very cartoony "unicorn", suggesting it had some ability to "see" even though it was only a language model.

"Can you write a 3D game in HTML with Javascript, I want: There are three avatars, each is a sphere. The player controls its avatar using arrow keys to move. The enemy avatar is trying to catch the player. The defender avatar is trying to block the enemy. There are also random obstacles as cubes spawned randomly at the beginning and moving randomly. The avatars cannot cross those cubes. The player moves on a 2D plane surrounded by walls that he cannot cross. The wall should cover the boundary of the entire plane. Add physics to the environment using cannon. If the enemy catches the player, the game is over. Plot the trajectories of all the three avatars."

Going from ChatGPT (GPT-3.5) to GPT-4, it goes from generating a 2D game to a 3D game as asked for.

He then gets into the coding interview questions. Here is where GPT-4's intelligence really shines. 100% of Amazon's On-Site Interview sample questions, 10 out of 10 problems solved, took 3 minutes 59 seconds out of the allotted 2 hour time slot. (Most of that time was Yi Zhang cutting and pasting back and forth.)

The paper goes far beyond the talk in this. In the paper they describe LeetCode's Interview Assessment platform, which provides simulated coding interviews for software engineer positions at major tech companies. GPT-4 solves all questions from all three rounds of interviews (titled online assessment, phone interview, and on-site interview) using only 10 minutes in total, with 4.5 hour allotted.

They challenged it to do a visualization of IMDb data. They challenge it to do a Pyplot (Matplotlib) visualization of a math formula with vague instructions about colors, and it creates an impressive visualization. They challenged it to create a GUI for a Python program that draws arrows, curves, rectangles, etc.

They challenged GPT-4 to give instructions on how to find the password in a macOS executable, which it does by telling the user to use a debugger called LLDB and a Python script. (The password was simply hardcoded into the file, so wasn't done in a way that uses modern cryptographic techniques.)

They tested GPT-4's ability to reason about (mentally "execute") pseudo-code in a nonexistent programming language (that looks something like R), which it is able to do.

"Can one reasonably say that a system that passes exams for software engineering candidates is not really intelligent?"

"In its current state, we believe that GPT-4 has a high proficiency in writing focused programs that only depend on existing public libraries, which favorably compares to the average software engineer's ability. More importantly, it empowers both engineers and non-skilled users, as it makes it easy to write, edit, and understand programs. We also acknowledge that GPT-4 is not perfect in coding yet, as it sometimes produces syntactically invalid or semantically incorrect code, especially for longer or more complex programs. [...] With this acknowledgment, we also point out that GPT-4 is able to improve its code by responding to both human feedback (e.g., by iteratively refining a plot) and compiler / terminal errors."

The reality of this capability really hit me when Google Code Jam was canceled. I've done it every year for 15 years and poof! Gone. It's because of AI. If they did Code Jam this year, they wouldn't be testing people's programming ability, they'd be testing people's ability to cut-and-paste into AI systems and prompt AI systems. And since Code Jam is a recruiting tool for Google, the implication of this is that coding challenges as a way of hiring programmers is over. And the larger implication of that is that employers don't need people who are algorithm experts who can determine what algorithm applies to a problem and competently code it any more. Or very soon. They need "programmer managers" who will manage AI systems that actually write the code.

Going back from the paper, where GPT-4 succeeded a everything, pretty much, back to the talk, in the talk he talks about GPT-4 limitations at math ability. I feel this is pretty much a moot point since GPT-4 has been integrated with Wolfram|Alpha which can perform all the arithmetic calculations desired without mistakes. But that all happened after the paper was published and this talk was recorded. Even though that was only 3 weeks ago. Things are going fast. Anyway, what he shows here is that GPT-4, as a language model, isn't terribly good at arithmetic. It does pretty well at linguistic reasoning about mathematical problems, though, to a point.

Sparks of AGI: Early experiments with GPT-4 - Sebastien Bubeck

#solidstatelife #ai #generativemodels #nlp #lmms #gpt #agi