3 Likes

#computergraphics

One person like that

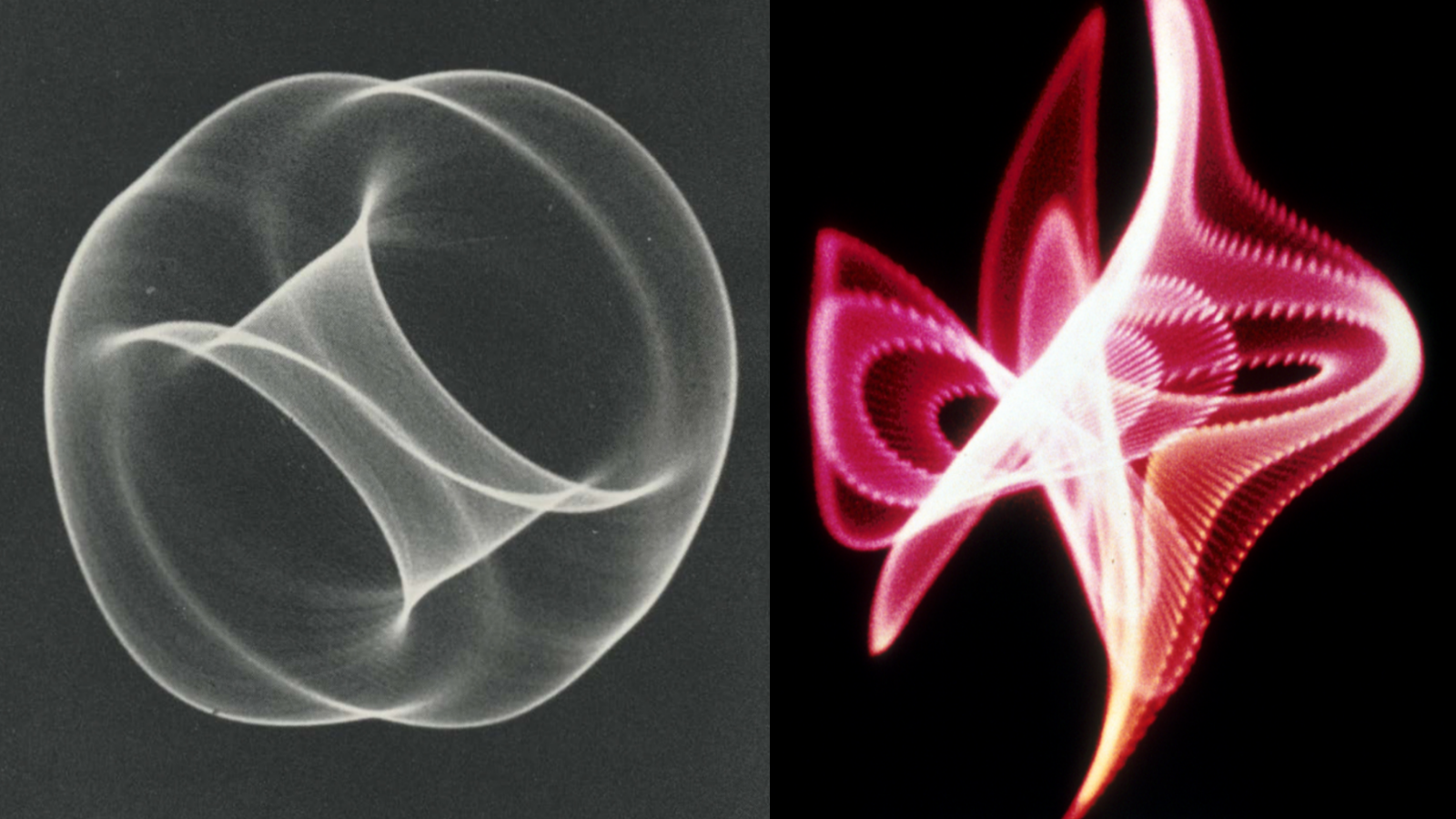

Gaussian splatting: A new technique for rendering 3D scenes -- a successor to neural radiance fields (NeRF). In traditional computer graphics, scenes are represented as meshes of polygons. The polygons have a surface that reflects light, and the GPU will calculate what angle the light hits the polygons and how the polygon surface affects the reflected light -- color, diffusion, transparency, etc.

In the world of neural radiance fields (NeRF), a neural network, trained on a set of photos from a scene, will be asked: from this point in space, with the light ray going in this direction, how should the pixel rendered to the screen be colored? In other words, you are challenging a neural network to learn ray tracing. The neural network is challenged to learn all the details of the scene and store those in its parameters. It's a crazy idea, but it works. But it also has limitations -- you can't view the scene from an angle very far from the original photos, it doesn't work for scenes that are too large, and so on. At no point does it ever translate the scene into traditional computer graphics meshes, so the scene can't be used in a video game or anything like that.

The new technique goes by the unglamorous name "Gaussian splatting". This time, instead of asking for a neural network to tell you the result of a ray trace, you're asking it, initially, to render the scene as a "point cloud" -- that is to say, simply a collection of points, not even polygons. This is just the first step. Once you have the initial point cloud, then you switch to Gaussians.

The concept of a 3D "Gaussian" may take a minute to wrap your brain around. We're all familiar with the "bell curve", also called the normal distribution, also called the Gaussian distribution. This function is a function of 1 dimension, that is to say, G = f(x). To make the 3D Gaussian, you do that with all 3 dimensions. So G = f(x, y, z).

Not only that, but they make a big issue in the paper about the fact that their 3D Gaussians are "anisotropic". What this means is that they are not nice, spherical gaussians, but rather, they are stretched -- and have a direction. When rendering 3D graphics, many materials are directional, such as wood grain, brushed metal, fabric, and hair. They even have further uses, such as for scenes where the light source is not spherical, the viewing angle is very oblique, the texture is viewed from a sharp angle, and scenes that have sharp edges.

At this point you might be thinking: this all sounds a lot more complicated than simple polygons. What does using 3D Gaussians get us? The answer is that, unlike polygons, 3D Gaussians are differentiable. That magic word means you can calculate a gradient, which you might remember from your calculus class. Having a gradient means you can train it using stochastic gradient descent -- in other words, you can train it with standard deep learning training techniques. Now you've brought your 3D representation into the world of neural networks.

Even so, more cleverness is required to make the system work. The researchers made a special training system that enables geometry to be created, deleted, or moved within a scene, because, inevitably, geometry gets incorrectly placed due to the ambiguities of the initial 3D to 2D projection. After every 100 iterations, Gaussians that are "essentially transparent" are removed, while new ones are added to "densify" and fill gaps in the scene. To do this, they made an algorithm to detect regions that are not yet well reconstructed.

With these in place, the training system generates 2D images and compares them to the training views provided, and iterates until it can render them well.

To do the rendering, they developed their own fast renderer for Gaussians, which is where the word "splats" in the name comes from. When the Gaussians are rendered, they are called "splats". The reason they took the trouble to create their own rendering system was -- you guessed it -- to make the entire rendering pipeline differentiable.

#solidstatelife #ai #genai #computervision #computergraphics

One person like that

1 Shares

5 Likes

1 Shares

DreamWorks has announced its intention to open source MoonRay, their Monte Carlo raytracer. A Monte Carlo raytracer (MCRT) is a renderer which produces photorealistic images after full convergence, given high enough quality assets and enough time and computing power to converge. It is called Monte Carlo because it incorporates random numbers. It uses a global illumination model, which enables it to render soft shadows, color bleeding, caustics, which refers to the projection of reflected rays from a curved surface or object on another surface, and other optical phenomena.

"MoonRay is DreamWorks' open-source, award-winning, state-of-the-art production MCRT renderer, which has been used on feature films such as How to Train Your Dragon: The Hidden World, Trolls World Tour, The Bad Guys, the upcoming Puss In Boots: The Last Wish, as well as future titles. MoonRay was developed at DreamWorks and is in continuous active development and includes an extensive library of production-tested, physically based materials, a USD Hydra render delegate, multi-machine and cloud rendering via the Arras distributed computation framework."

"MoonRay is developed in-house and is maintained by DreamWorks Animation for all of their feature film production. Many thanks go to all of the engineers for building and delivering this state of the art MCRT renderer since the very beginning, and to DreamWorks Animation for continuing a long tradition of contributing back to the wider computer graphics community."

MoonRay will be released under the Apache License, Version 2.0.

Definitely check out the Sizzle Reel.

DreamWorks has announced its intention to open source MoonRay, their Monte Carlo raytracer. A Monte Carlo raytracer (MCRT) is a renderer which produces photorealistic images after full convergence, given high enough quality assets and enough time and computing power to converge. It is called Monte Carlo because it incorporates random numbers. It uses a global illumination model, which enables it to render soft shadows, color bleeding, caustics, which refers to the projection of reflected rays from a curved surface or object on another surface, and other optical phenomena.

"MoonRay is DreamWorks' open-source, award-winning, state-of-the-art production MCRT renderer, which has been used on feature films such as How to Train Your Dragon: The Hidden World, Trolls World Tour, The Bad Guys, the upcoming Puss In Boots: The Last Wish, as well as future titles. MoonRay was developed at DreamWorks and is in continuous active development and includes an extensive library of production-tested, physically based materials, a USD Hydra render delegate, multi-machine and cloud rendering via the Arras distributed computation framework."

"MoonRay is developed in-house and is maintained by DreamWorks Animation for all of their feature film production. Many thanks go to all of the engineers for building and delivering this state of the art MCRT renderer since the very beginning, and to DreamWorks Animation for continuing a long tradition of contributing back to the wider computer graphics community."

MoonRay will be released under the Apache License, Version 2.0.

Definitely check out the Sizzle Reel.