2 Likes

#nerf

Lors d'un exercice physique, les muscles favorisent la croissance des neurones - Sciences et Avenir ⬅️ URL principale utilisée pour la prévisualisation Diaspora* et avec plus de garantie de disponibilité.

Archivez vous même la page s'il n'existe pas encore d'archive et évitez ainsi les pisteurs, puis ulilisez µBlockOrigin pour supprimer d'éventuelles bannières qui subsisteraient sur la page sauvegardée.

💾 archive.org

#santé #sport #exercicephysique #activitéphysique #neurogénèse #neurones #motoneurones #croissancenerveuse #activitémusculaire #nerf #biologie

La myéline entourant les fibres nerveuses générerait des intrications quantiques ⬅️ URL principale utilisée pour la prévisualisation Diaspora* et avec plus de garantie de disponibilité.

Archivez vous même la page s'il n'existe pas encore d'archive et évitez ainsi les pisteurs, puis ulilisez µBlockOrigin pour supprimer d'éventuelles bannières qui subsisteraient sur la page sauvegardée.

💾 archive.org

#physiquequantique #intricationquantique #fibrenerveuse #nerf #systèmenerveux #myéline #étude #conscience #photon #biophotons #biophotonique

2 Likes

3 Likes

One person like that

1 Comments

One person like that

One person like that

4 Likes

3 Shares

One person like that

2 Likes

One person like that

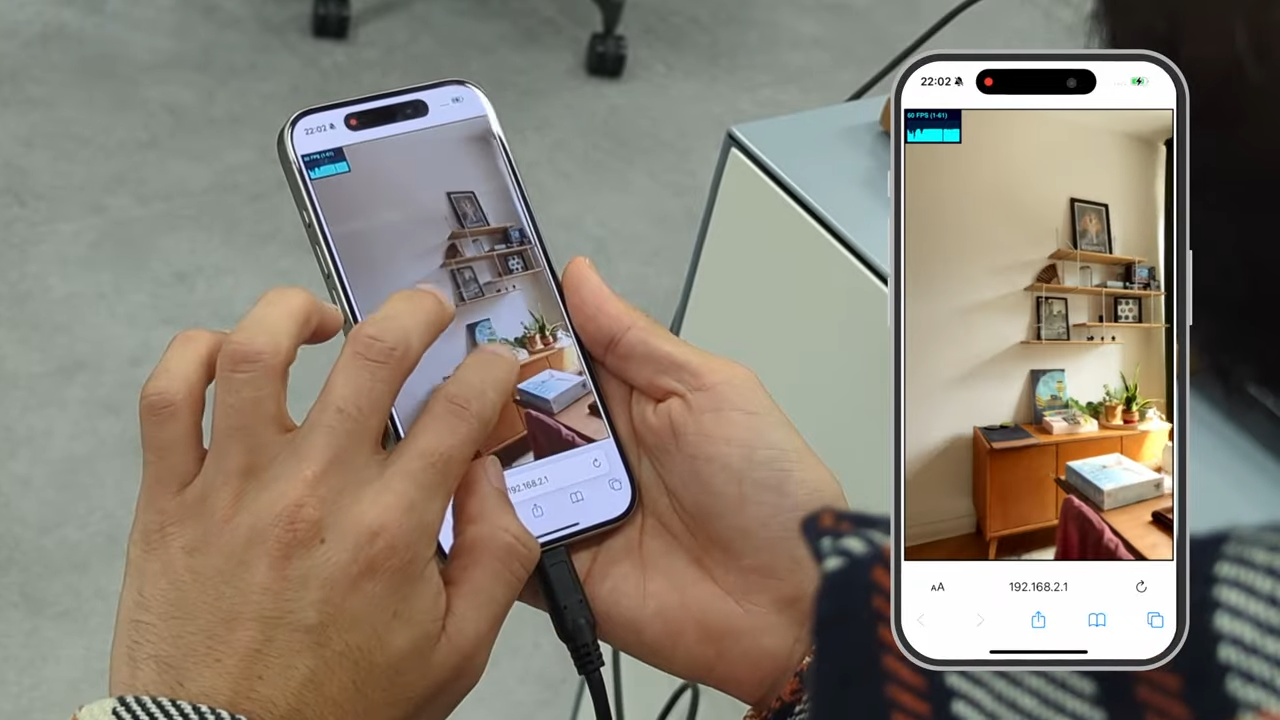

CityNeRF: NeRF at city scale. As the name suggests, this is about NeRF, which stands for Neural Radiance Fields, which, is an alternate way of using neural networks to render a scene by training the neural network using light rays as input and outputting colors as output (actually colors and densities, which allows for things like degrees of transparency). Previous NeRF systems were limited to a bounded scene and in the range of points of view that scene could be viewed from.

It took me a while to figure out what these researchers did to get around these limitations. There's basically two tricks that they employed. The first is to train the neural network successively from distant viewpoints to close-up viewpoints -- and to train the neural network on transitions in between these "levels". This was inspired by "level of detail" systems currently in use by traditional 3D computer rendering systems. "Joint training on all scales results in blurry texture in close views and incomplete geometry in remote views. Separate training on each scale yields inconsistent geometries and textures between successive scales." So the system starts at the most distant level and incorporates more and more information from the next closer level as it progresses from level to level.

The next trick was to modify the neural network itself at each level. The way this is done is by adding what they call a "block". If you're wondering why they didn't just call it a "layer" or "set of layers", it's because a "block" is more complicated than that, with two separate information flows, one for the more distant and one for the more close up level being trained at that moment. It's designed in such a way that a set of information called "base" information is determined for the more distant level, and then "residual" information (in the form of colors and densities) that modifies the "base" and adds detail is calculated from there.

Watch the example results clips full screen to get an idea of the results of the system. The cityscapes these examples were trained on come from Google Earth Studio. Fourier analysis was used to demonstrate that high-frequency detail is added in the expected amounts as the system is trained from one level of detail to the next.

One person like that

1 Shares

One person like that

1 Shares