The focal length of lenses doesn't cause perspective distortion, which is often explained as "lens compression." Period. Now before you call me crazy and dismiss whatever I have to say, I’d like to invite you to take a dive into how lenses work and what actually causes perspective distortion.

Definition

There are two types of distortion in photography: optical and perspective. It is quite easy to confuse the two as we commonly associate wide-angle lenses with distortion. In simple terms, distortion is an optical effect where the lens seems to alter the real perception that we see through our eyes.

Optical Distortion

Optical distortion has to do with the lens only. It is when a lens takes straight lines and makes them appear skewed. Often they are spherical with the least optical distortion at the center and most at the edges. This can be seen with a simple test against a radiator that originally has straight vertical lines:

50mm

50mm  24mm

24mm

As we can see, at 50mm the lens is producing straight lines, however, at 24mm it is already starting to distort the photograph when captured from the same distance.

There are three types of optical distortion: pincushion, barrel, mustache. Each has its own unique characteristic, however, discussing them is beyond the scope of this article.

A Word on Lens Types

Before I prove that lenses don’t affect perspective distortion, I need to clear up two lens types that exist in photography: curvilinear and rectilinear. The difference is in how these optical types render images.

A curvilinear lens will curve the photo very unnaturally, making it look fisheye. Canon’s 8-16mm f/4 lens is a perfect example of that.

A rectilinear lens, on the other hand, will render lines straight and will be close to human vision. This distinction is most appropriate to wide-angle lenses that have the most optical distortion. Canon’s 16-35m f/2.8 lens is a great rectilinear lens. Here is how they look

Perspective Distortion

This is the juicy part of the article. Often optical and perspective distortions are confused. Let me clear up what perspective distortion is.

What a camera does is simply create an image of a 3D space on a 2D sensor. This, of course, has its drawbacks because an object placed closer to the camera will appear much larger compared to the background.

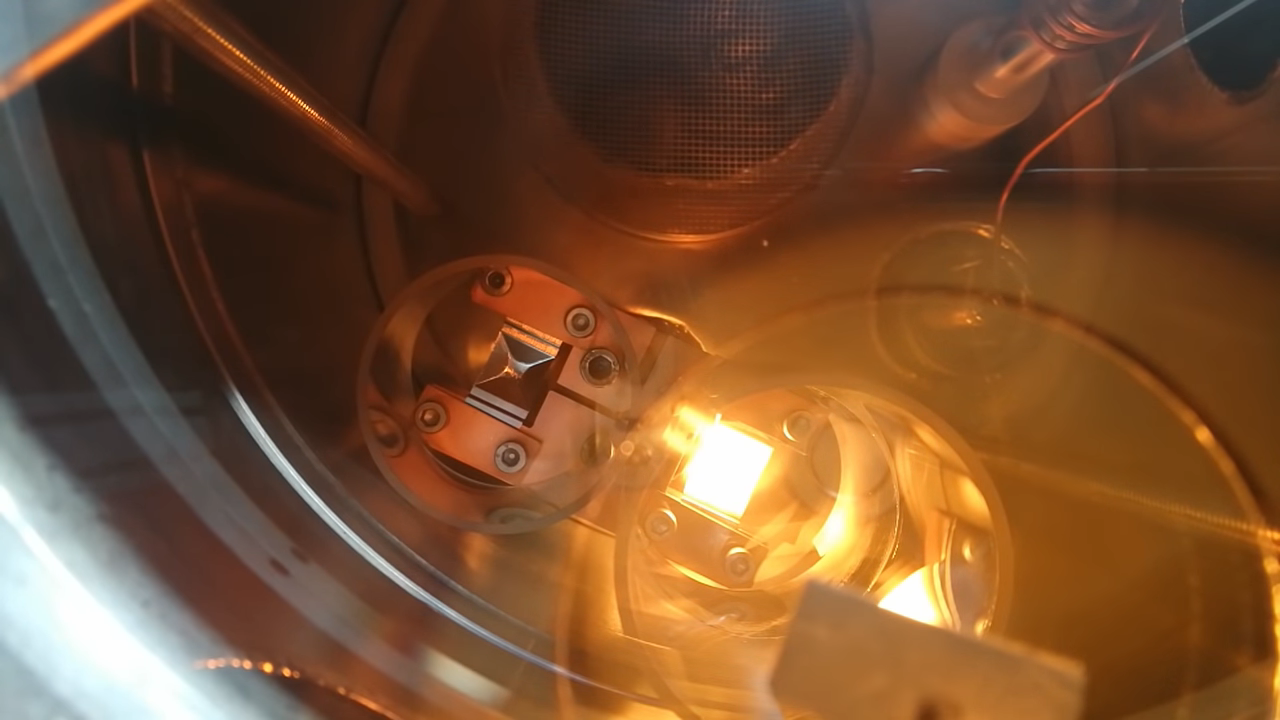

Here is what I mean:

Can you see how when I move the 24-70mm closer to the camera it starts to appear larger and becomes the same size as a 70-200mm?

Our brain was trained to see this and recognize that this is just one thing being closer than the other. (It is suspected that babies don’t have this, therefore they see whatever is closest to them as the biggest.)

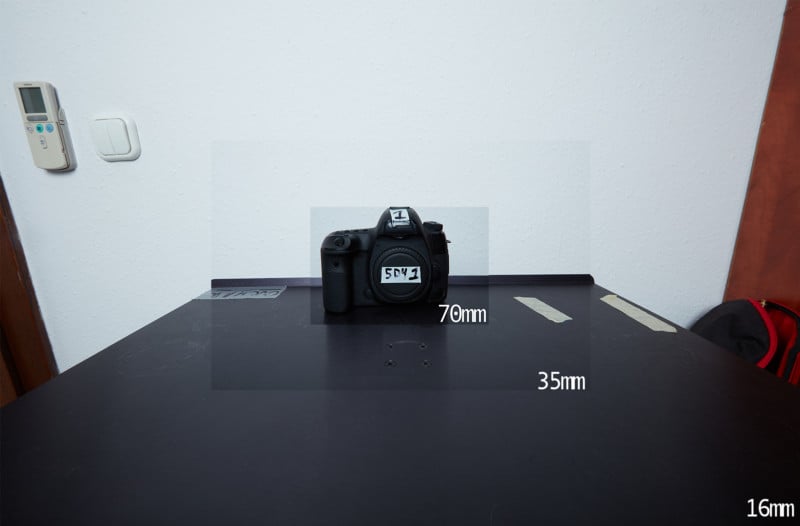

So, how would an image taken with a wide-angle lens differ from that of a mid-range and telephoto lens? To find out I took three photos. One at 16mm, the other at 35mm, and one more at 70mm. The image plane remained the same for all images, and the camera was not moved.

Here is how they look:

Afterward, I cropped each picture to show the same part of the image and overlayed them on top of each other.

Now I moved the camera to a position where the center of the frame would be approximately the same size as in the 16mm image. In fact, it was rather difficult to do so as the lens couldn't focus as close. Still, there is clear perspective distortion.

Closing Thoughts

As you can see, there are different types of distortion that exist. When it comes to perspective distortion, only the image plane (camera) position affects it. Hence, you can take the exact same picture with a 16mm lens and a 200mm lens simply by cropping the wide-angle photo into the telephoto one.

Different focal lengths will cause a photographer to choose different distances to their subjects, and this distance is what causes perspective distortion, not the focal length. Hence, it could more accurately be called "distance compression" rather than "lens compression."

#educational #distortion #explainer #illyaovchar #learn #lenscompression #lenses #misconception #myth #optics #perspectivedistortion #photography