THE most controversial filesytem in the known universe: ZFS - so ext4 is faster on single disk systems - btrfs with snapshots but without the zfs licensing problems

ZFS is probably THE most controversial filesytem in the known universe:

“FOSS means that effort is shared across organizations and lowers maintenance costs significantly” (src: comment by JohnFOSS on itsfoss.com)

“The whole purpose behind ZFS was to provide a next-gen filesystem for UNIX and UNIX-like operating systems.” (src: comment by JohnK3 on itsfoss.com)

“The performance is good, the reliability and protection of data is unparalleled, and the flexibility is great, allowing you to configure pools and their caches as you see fit. The fact that it is independent of RAID hardware is another bonus, because you can rescue pools on any system, if a server goes down. No looking around for a compatible RAID controller or storage device.”

“after what they did to all of SUN’s open source projects after acquiring them. Oracle is best considered an evil corporation, and anti-open source.”

“it is sad – however – that licensing issues often get in the way of the best solutions being used” (src: comment by mattlach on itsfoss.com)

“Zfs is greatly needed in Linux by anyone having to deal with very large amounts of data. This need is growing larger and larger every year that passes.” (src: comment by Tman7 on itsfoss.com)

“I need ZFS, because In the country were I live, we have 2-12 power-fails/week. I had many music files (ext4) corrupted during the last 10 years.” (src: comment by Bert Nijhof on itsfoss.com)

“some functionalities in ZFS does not have parallels in other filesystems. It’s not only about performance but also stability and recovery flexibility that drives most to choose ZFS.” (src: comment by Rubens on itsfoss.com)

“Some BtrFS features outperform ZFS, to the point where I would not consider wasting my time installing ZFS on anything. I love what BtrFS is doing for me, and I won’t downgrade to ext4 or any other fs. So at this point BtrFS is the only fs for me.” (src: comment by Russell W Behne on itsfoss.com)

“Btrfs Storage Technology: The copy-on-write (COW) file system, natively supported by the Linux kernel, implements features such as snapshots, built-in RAID, and self-healing via checksumming for data and metadata. It allows taking subvolume snapshots and supports offline storage migration while keeping snapshots. For users of enterprise storage systems, Btrfs provides file system integrity after unexpected power loss, helps prevent bitrot, and is designed for high-capacity and high-performance storage servers.” (src: storagereview.com)

BTRFS is GPL 3.0 licenced btw.

bachelor projects are written about btrfs vs zfs (2015)

so…

ext4 is good for notebooks & desktops & workstations (that do regular backups on a separate, external, then disconnected medium)

is zfs “better” on/for servers? (this user says: even on single disk systems, zfs is “better” as it prevents bit-rot-file-corruption)

with server-hardware one means:

- computers with massive computational resources (CPUs, RAM & disks)

- at least 2 disks for RAID1 (mirroring = safety)

- or better: 4 disks for RAID10 (striping + mirroring = speed + safety)

- zfs wants direct access to disks without any hardware raid controller or caches in between, so it is “fine” with simple SATA onboard connections or hba cards that do nothing but provide SATA / SAS / NVMe ports or hardware raid controllers that behave like hba cards (JBOD, some need firmware flashed, some need to be jumpered)

- fun fact: this is not the default for servers. servers (usually) come with LSI (or other vendor) hardware raid cards, that might be possible to JBOD jumper or flash) but that would mean: zfs is only good for servers WITHOUT hardware raid cards X-D (and those are (currently still) rare X-D)

- but they would be “perfect” fit for a consumer-hardware PC (having only SATA ports) used as server (many companies not only Google but also Proxmox and even Hetzner test out that way of operation, but it might not be the perfect fit for every admin, that rather spends some bucks extra and wants to provide companies with the most reliable hardware possible (redundant power supplies etc.)

- maybe that is also a cluster vs mainframe “thinking”

- so in a cluster, if some nodes fail, it does not matter, as other nodes take over and are replaced fast (but some server has to store the central database, that is not allowed to fail X-D)

- in a non-cluster environment, things might be very different

- “to EEC or not to EEC the RAM”, that is the question?:

- zfs also runs on machines without EEC but:

- in semi-professional purposes non-EEC might be okay

- for companies with critical data maximum error correction EEC is a must (as magnetic fields / sunflares could potentially flip some bits in RAM, then write the faulty data back to disk, ZFS can not correct that)

- “authors of a 2010 study that examined the ability of file systems to detect and prevent data corruption, with particular focus on ZFS, observed that ZFS itself is effective in detecting and correcting data errors on storage devices, but that it assumes data in RAM is “safe”, and not prone to error”

- “One of the main architects of ZFS, Matt Ahrens, explains there is an option to enable checksumming of data in memory by using the ZFS_DEBUG_MODIFY flag (zfs_flags=0x10) which addresses these concerns.[73]” (wiki)

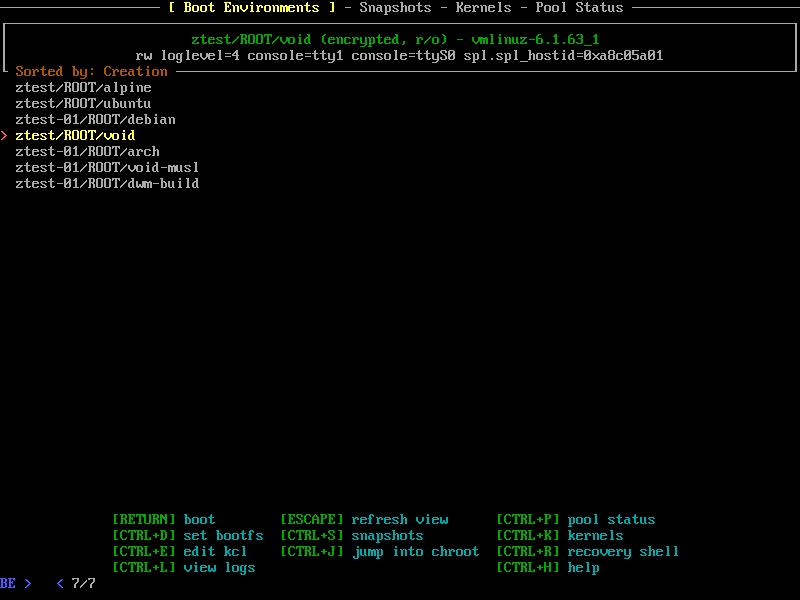

zfs: snapshots!

zfs has awesome features such as:

- snapshots:

- ext4 can do snapshots as well with lvm2, but those lvm2 snapshots are more temporary “buffers” that can run out of changes-written-space and are then disgarded, so not “real” and “permanent” snapshots that can be restored or copied to a different system without time-limitation.

- zfs comes with data compression to safe disk space (won’t work well for “binary” data such as jpg or mp4 but anything text)

many more featuers:

- Protection against data corruption. Integrity checking for both data and metadata.

- Continuous integrity verification and automatic “self-healing” repair

- Data redundancy with mirroring, RAID-Z1/2/3 [and DRAID]

- Support for high storage capacities — up to 256 trillion yobibytes (2^128 bytes)

- Space-saving with transparent compression using LZ4, GZIP or ZSTD

- Hardware-accelerated native encryption

- Efficient storage with snapshots and copy-on-write clones

- Efficient local or remote replication — send only changed blocks with ZFS send and receive

(src)

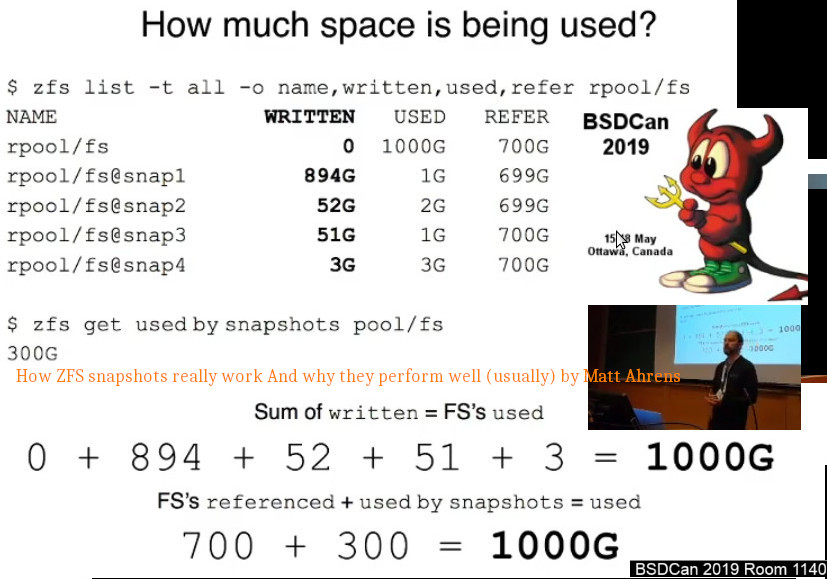

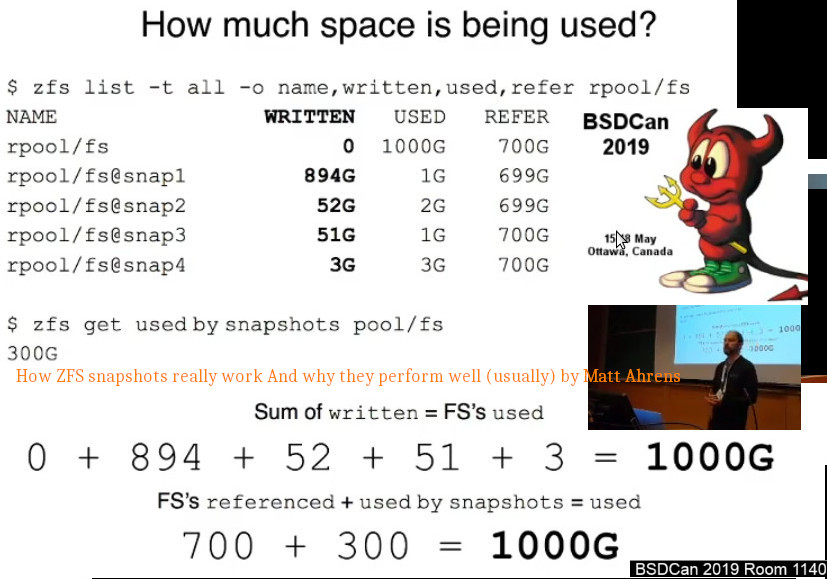

how much space do snapshots use?

look at WRITTEN, not at USED.

https://ytpak.net/watch?v=NXg86uBDSqI

https://papers.freebsd.org/2019/bsdcan/ahrens-how_zfs_snapshots_really_work/

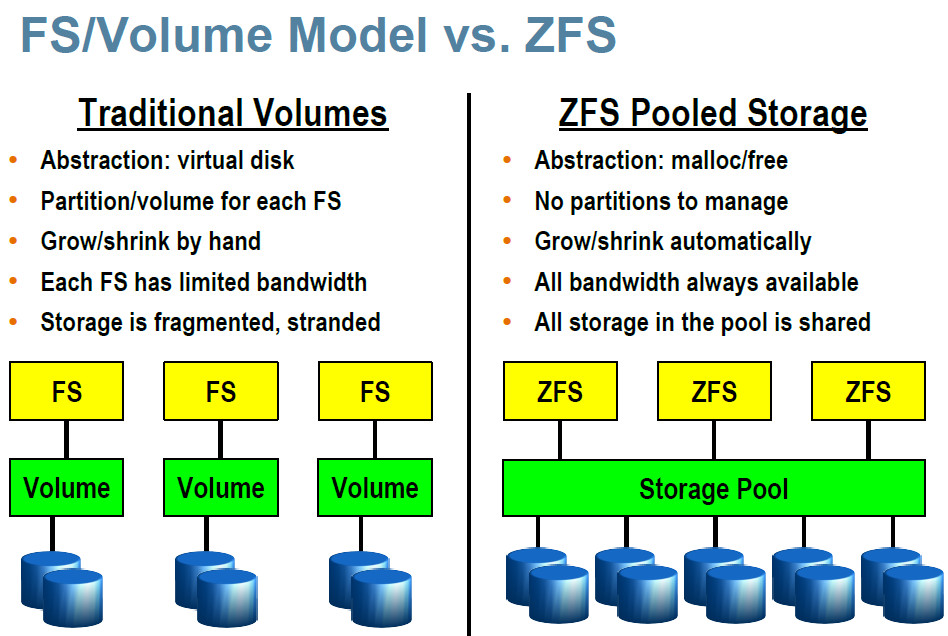

performance?

so on a single-drive system, performance wise ext4 is what the user wants.

on multi-drive systems, the opposite might be true, zfs outperforming ext4.

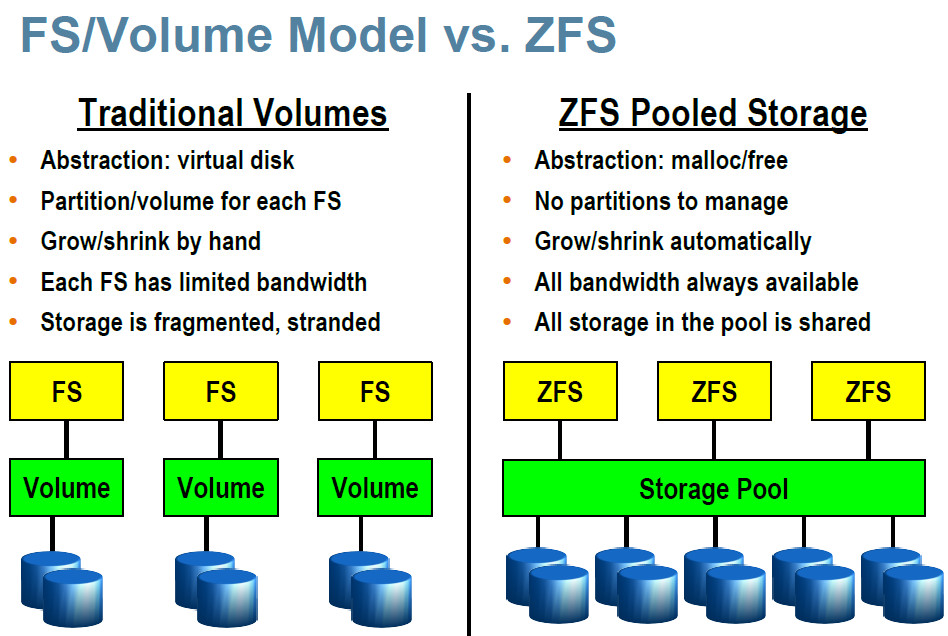

it is a filesystem + a volumen manager! 🙂

“is not necessary nor recommended to partition the drives before creating the zfs filesystem” (src, src of src)

http://perftuner.blogspot.com/2017/02/zfs-zettabyte-file-system.html

RAID10?

there is no raid10 in zfs, only raid5, which means: at least one disk is used for checksums

- “raid5 or raidz distributes parity along with the data

- can lose 1x physical drive before a raid failure.

- Because parity needs to be calculated raid 5 is slower then raid0, but raid 5 is much safer.

- RAID 5 requires at least 3x hard disks in which one(1) full disk of space is used for parity.

- raid6 or raidz2 distributes parity along with the data

- can lose 2x physical drives instead of just one like raid 5.

- Because more parity needs to be calculated raid 6 is slower then raid5, but raid6 is safer.

- raidz2 requires at least 4x disks and will use two(2) disks of space for parity.

- raid7 or raidz3 distributes parity just like raid 5 and 6

- but raid7 can lose 3x physical drives.

- Since triple parity needs to be calculated raid 7 is slower then raid5 and raid 6, but raid 7 is the safest of the three.

- raidz3 requires at least 4x, but should be used with no less then 5x disks, of which 3x disks of space are used for parity.

- raid10 or raid1+0 is mirroring and striping of data.

- The simplest raid10 array has 4x disks and consists of two pairs of mirrors.

- Disk 1 and 2 are mirrors and separately disk 3 and 4 are another mirror.

- Data is then striped (think raid0) across both mirrors.

- One can lose one drive in each mirror and the data is still safe.

- One can not lose both drives which make up one mirror, for example drives 1 and 2 can not be lost at the same time.

- Raid 10 ‘s advantage is reading data is fast.

- The disadvantages are the writes are slow (multiple mirrors) and capacity is low.”

(src, src)

ZFS supports SSD/NVMe caching + RAM caching:

more RAM is better than an dedicated SSD/NVMe cache, BUT zfs can do both! which is remarkable.

(the optimum probably being RAM + SSD/NVMe caching)

ubuntu makes zfs the default filesystem

ZFS & Ubuntu 20.04 LTS

“our ZFS support with ZSys is still experimental.”

https://ubuntu.com/blog/zfs-focus-on-ubuntu-20-04-lts-whats-new

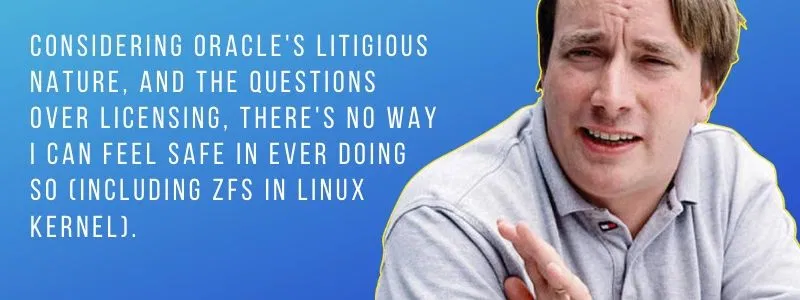

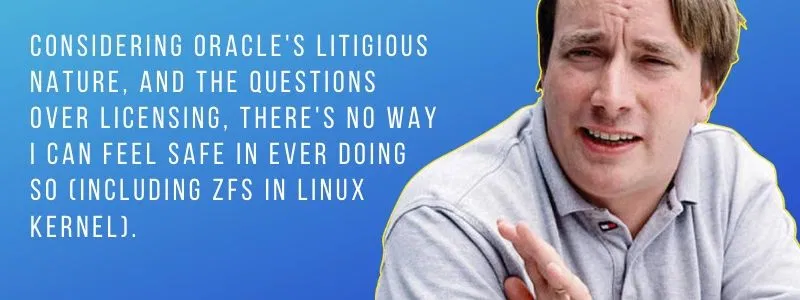

ZFS licence problems/incompatibility with GPL 2.0 #wtf Oracle! again?

Linus: “And honestly, there is no way I can merge any of the ZFS efforts until I get an official letter from Oracle that is signed by their main legal counsel or preferably by Larry Ellison himself that says that yes, it’s ok to do so and treat the end result as GPL’d.” (itsfoss.com)

comment by vagrantprodigy: “Another sad example of Linus letting very limited exposure to something (and very out of date, and frankly, incorrect information about it’s licensing) impact the Linux world as a whole. There are no licensing issues, OPENZFS is maintained, and the performance and reliability is better than the alternatives.” (itsfoss.com)

https://itsfoss.com/linus-torvalds-zfs/

https://itsfoss.com/linus-torvalds-zfs/

it is Open Source, but not GPL licenced: for Linus, that’s a no go and quiet frankly, yes it is a problem.

“this article missed the fact that CDDL was DESIGNED to be incompatible with the GPL” (comment by S O on itsfoss.com)

it can also be called “bait”

“There is always a thing called “in roads”, where it can also be called “bait”.

“The article says a lot in this respect.

“That Microsoft founder Bill Gate comment a long time ago was that “nothing should be for free.”

That too rings out loud, especially in today’s American/European/World of “corporate business practices” where they want what they consider to be their share of things created by others.

Just to be able to take, with not doing any of the real work.

That the basis of the GNU Gnu Pub. License (GPL) 2.0 basically says here it is, free, and the Com. Dev. & Dist.

License (CDDL) 1.0 says use it for free, find our bugs, but we still have options on its use, later on downstream.

..

And nothing really is for free, when it is offered by some businesses, but initial free use is one way to find all the bugs, and then begin charging costs.

And it it has been incorporated into a linux distribution, then the linux distribution could later come to a legal halt, a legal gotcha in a court of law.

In this respect, the article is a good caution to bear in mind, that the differences in licensing can have consequences, later in time.Good article to encourage linux users to also bear in mind, that using any programs that are not GNU Gen. Pub. License (GPL) 2.0 can later on have consequences for use having affect on a lot of people, big time.

That businesses (corportions have long life spans) want to dominate markets with their products, and competition is not wanted.

So, how do you eliminate or hinder the competition?

… Keep Linux free as well as free from legal downstream entanglements.”

(comment by Bruce Lockert on itsfoss.com)

Imagine this: just as with Java, Oracle might decide to change the licence on any day Oracle seems fit to “cash in” on the ZFS users and demand purchasing a licence… #wtf Oracle

Guess one is not alone with that thinking: “Linus has nailed the coffin of ZFS! It adds no value to open source and freedom. It rather restricts it. It is a waste of effort. Another attack at open source. Very clever disguised under an obscure license to trap the ordinary user in a payed environment in the future.” (comment by Tuxedo on itsfoss.com)

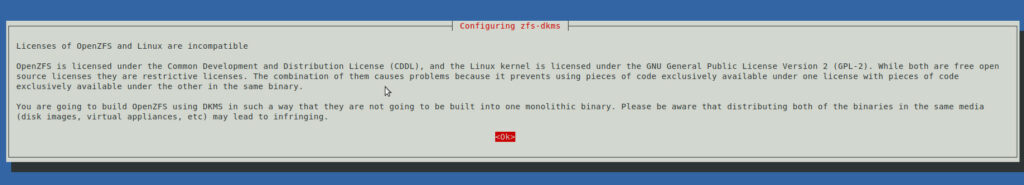

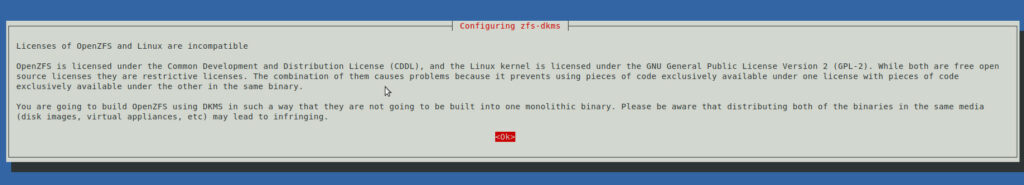

GNU Linux Debian warns during installation:

“Licenses of OpenZFS and Linux are incompatible”

- OpenZFS is licensed under the Common Development and Distribution License (CDDL), and the Linux kernel is licensed under the GNU General Public License Version 2 (GPL-2).

- While both are free open source licenses they are restrictive licenses.

- The combination of them causes problems because it prevents using pieces of code exclusively available under one license with pieces of code exclusively available under the other in the same binary.

- You are going to build OpenZFS using DKMS in such a way that they are not going to be built into one monolithic binary.

- Please be aware that distributing both of the binaries in the same media (disk images, virtual appliances, etc) may lead to infringing.

“You cannot change the license when forking (only the copyright owners can), and with the same license the legal concerns remain the same. So forking is not a solution.” (comment by MestreLion on itsfoss.com)

OpenZFS 2.0

“This effort is fast-forwarding delivery of advances like dataset encryption, major performance improvements, and compatibility with Linux ZFS pools.” (src: truenas.com)

https://arstechnica.com/gadgets/2020/12/openzfs-2-0-release-unifies-linux-bsd-and-adds-tons-of-new-features/

tricky.

of course users can say “haha”  “accidentally deleted millions of files” “no backups” “now snapshots would be great”

“accidentally deleted millions of files” “no backups” “now snapshots would be great”

or come up with a smart file system, tha can do snapshots.

how to on GNU Linux Debian 11:

https://openzfs.github.io/openzfs-docs/Getting%20Started/Debian/index.html

https://wiki.debian.org/ZFS

note:

with ext4 it was recommended to put GNU Linux /root and /swap on a dedicated SSD/NVMe (that then regularly backs up to the larger raid10)

but than the user would miss out on the zfs awesome restore snapshot features, which would mean:

- no more fear of updates

- take snapshot before update

- do system update (moving between major versions of Debian 9 -> 10 can be problematic, sometimes it works, sometimes it will not)

- test the system according to list of use cases (“this used to work, this too”)

- if update breaks stuff -> boot from a usb stick -> roll back snapshot (YET TO BE TESTED!)

Links:

https://openzfs.org/wiki/Main_Page

#linux #gnu #gnulinux #opensource #administration #sysops #zfs #openzfs #filesystem #filesystems #ubuntu #btrfs #ext4 #gnu-linux #oracle #licence

Originally posted at: https://dwaves.de/2022/01/20/the-most-controversial-filesytem-in-the-known-universe-zfs-so-ext4-is-faster-on-single-disk-systems-btrfs-with-snapshots-but-without-the-zfs-licensing-problems/