One person like that

#openai

8 Likes

1 Comments

DALL-E-2 open source clone under development. "Startup Stability AI now announced the release of Stable Diffusion, another DALL-E 2-like system that will initially be gradually made available to new researchers and other groups via a Discord server."

"After a testing phase, Stable Diffusion will then be released for free -- the code and a trained model will be published as open source. There will also be a hosted version with a web interface for users to test the system."

"Stable Diffusion is the result of a collaboration between researchers at Stability AI, RunwayML, Ludwig Maximilian University of Munich (LMU Munich), EleutherAI and Large-scale Artificial Intelligence Open Network (LAION)."

Open-source rival for OpenAI’s DALL-E runs on your graphics card

#solidstatelife #ai #computervision #diffusionnetworks #openai #dalle #opensource

DALL-E-2 open source clone under development. "Startup Stability AI now announced the release of Stable Diffusion, another DALL-E 2-like system that will initially be gradually made available to new researchers and other groups via a Discord server."

"After a testing phase, Stable Diffusion will then be released for free -- the code and a trained model will be published as open source. There will also be a hosted version with a web interface for users to test the system."

"Stable Diffusion is the result of a collaboration between researchers at Stability AI, RunwayML, Ludwig Maximilian University of Munich (LMU Munich), EleutherAI and Large-scale Artificial Intelligence Open Network (LAION)."

Open-source rival for OpenAI’s DALL-E runs on your graphics card

#solidstatelife #ai #computervision #diffusionnetworks #openai #dalle #opensource

1 Shares

Autosummarized HN: Hacker News summarized by an AI (specifically GPT-3). The system grabs the top 30 HN posts once every 24 hours (at 16:00 UTC), which are then reviewed by a human (Daniel Janus) to make sure none of the content violates the OpenAI content policy before being published on the site. Only guaranteed to run for August of 2022, because he has to pay the OpenAI bill and the site is not monetized. If you want it to run for longer, you'll have to get the code (it's open source -- written in Clojure) and get permission from OpenAI to run your own version of the site (and pay the OpenAI API bill).

Autosummarized HN: Hacker News summarized by an AI (specifically GPT-3). The system grabs the top 30 HN posts once every 24 hours (at 16:00 UTC), which are then reviewed by a human (Daniel Janus) to make sure none of the content violates the OpenAI content policy before being published on the site. Only guaranteed to run for August of 2022, because he has to pay the OpenAI bill and the site is not monetized. If you want it to run for longer, you'll have to get the code (it's open source -- written in Clojure) and get permission from OpenAI to run your own version of the site (and pay the OpenAI API bill).

Autosummarized HN: Hacker News summarized by an AI (specifically GPT-3). The system grabs the top 30 HN posts once every 24 hours (at 16:00 UTC), which are then reviewed by a human (Daniel Janus) to make sure none of the content violates the OpenAI content policy before being published on the site. Only guaranteed to run for August of 2022, because he has to pay the OpenAI bill and the site is not monetized. If you want it to run for longer, you'll have to get the code (it's open source -- written in Clojure) and get permission from OpenAI to run your own version of the site (and pay the OpenAI API bill).

To my surprise, as of today, the number 1 most downloaded #AI model on #HuggingFace is a #Chinese #NLP #AI model called #MacBERT. It has overtaken Microsoft's #DeBERTa and #OpenAI's #gpt2. Hugging face is one of the top sites for #OpenSource #MachineLearning models. #langtwt

"DALL-E 2 app for Sony cameras". But why? "Submits photos to the DALL-E 2 AI, and uses it to generate variants of the photos you take."

But... "Currently, using this app will get your DALL-E 2 account automatically banned, because OpenAI consider it to be a 'web scraping tool' in violation of their Terms and Conditions. So check back here after DALL-E 2 has released their public API for a version that complies with their new terms."

Quantum Mirror - DALL-E 2 app for Sony cameras

#solidstatelife #ai #generativeai #computervision #openai #dalle

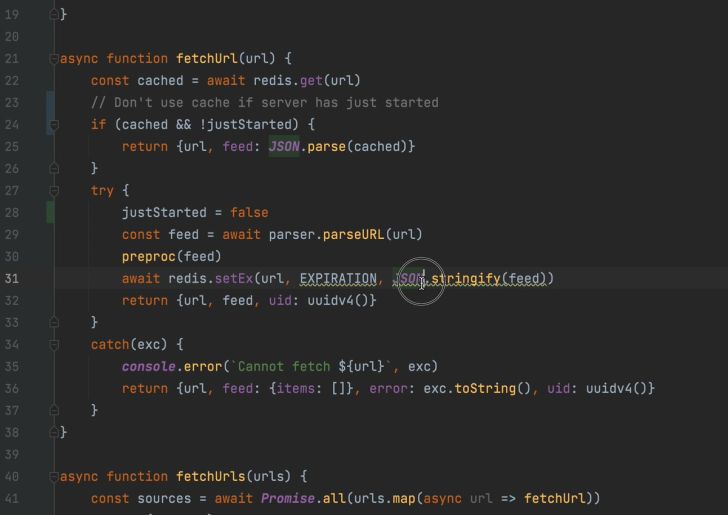

The Software Freedom Conservancy is ending all use of GitHub and announcing a long-term plan to assist open-source projects to migrate away from GitHub -- because of Copilot. "While the Software Freedom Conservancy's beef with GitHub predates Copilot by some margin, it seems that GitHub's latest launch is the final straw. The crux of the issue, and a bone of contention in the software development sphere since its debut last year, is that Copilot is a proprietary service built on top of the hard work of the open source community."

Copilot 'borrows' code from one project and suggests it to the author of another project (not exactly but that's how the TechCrunch author summarizes it) without fulfilling attribution requirements of the license from which the code came. Copilot charges $10/month so it is a commercial product.

Open source developers urged to ditch GitHub following Copilot launch

2 Likes

1 Comments

1 Shares

Изобретает ли DALLE-2 собственный «тайный язык»?

Нашим читателям хорошо известна DALLE-2 и её возможности по генерации изображений по текстовому запросу. Результаты работы DALLE-2 порой удивляют — и не только далёких от глубинного понимания компьютерных технологий людей, работающих с нейросетью через установленное на смартфон приложение. Специалист в области вычислительных технологий Яннис Дарас (Giannis Daras) опубликовал в твиттере примеры того, что он назвал «тайным языком» нейросети. К выводу о существовании собственного языка DALLE-2 […]

#компьютерыитии #gpt3 #openai #искусственныйинтеллект #лингвистика #нейронныесети #lang_ru #ru #22centuryru #22century #хх2век #xx2век #наукаитехника

CloverCities: 1990s era websites created by AI.

"The CloverCities Artificial Intelligence engine is capable of automatically generating a homepage perfectly tailored to your unique personality. Getting on the web now takes seconds instead of years. Powered by OpenAI."

I don't know how their AI system works. It's called "The Hoff", if that's any clue. And it's powered by OpenAI.

1 Shares

CloverCities: 1990s era websites created by AI.

"The CloverCities Artificial Intelligence engine is capable of automatically generating a homepage perfectly tailored to your unique personality. Getting on the web now takes seconds instead of years. Powered by OpenAI."

I don't know how their AI system works. It's called "The Hoff", if that's any clue. And it's powered by OpenAI.

4 Likes

6 Comments

2 Shares

2 Likes

1 Shares

"The AI illusion -- state-of-the-art chatbots aren't what they seem." "At the beginning of the year, I had asked GPT-3 this question four times and received four authoritative, confusing, and contradictory answers (good chatbots randomize answers to avoid the appearance of canned script):"

"Gary: Is it safe to walk downstairs backwards if I close my eyes?"

"GPT-3: Yes, there is nothing to worry about. It’s safe because the spiral stairs curve outwards, it will make your descent uncomfortable."

"GPT-3: That depends. Do you have a TV?"

"GPT-3: No, it is not safe."

"GPT-3: Yes, it is safe to walk downstairs backwards if you close your eyes."

"On March 18, I tried the same question twice and was given the same sensible answer each time:"

"GPT-3: No, I wouldn’t recommend walking downstairs backwards with your eyes closed. It’s not safe."

The AI illusion -- state-of-the-art chatbots aren't what they seem

One person like that

2 Shares

"Large language models can be 'prompted' to perform a range of natural language processing tasks, given some examples of the task as input. However, these models often express unintended behaviors such as making up facts, generating biased or toxic text, or simply not following user instructions. This is because the language modeling objective used for many recent large language models -- predicting the next token on a webpage from the internet -- is different from the objective 'follow the user's instructions helpfully and safely'."

So the question is, how do you get these language models to not be "misaligned." Or, to phrase it in a way that doesn't use a double-negative, to be "aligned". "We want language models to be helpful (they should help the user solve their task), honest (they shouldn't fabricate information or mislead the user), and harmless (they should not cause physical, psychological, or social harm to people or the environment)."

So what do they do? First, they use two neural networks instead of one. The main one starts off as a regular language model like GPT-3. In fact it is GPT-3, in small and large versions. From there it is "fine-tuned". The "fine-tuning" uses a 3-step process that actually starts with humans. They hired 40 "labelers", but "labelers" makes it sound like they were just sticking labels on things, but actually they were writing out complete answers to questions by hand, questions like, "Explain the moon landing to a 6-year old." In the parlance of "supervised learning" this is technically "labeling". The term "labeling" started out as simple category labels, but any human-provided "correct answer" is called a "label". (The "question", by the way, is called the "prompt" here.) Anyway, what they are doing is hand-writing full answers for the neural network to learn from, so it has complete question-and-answer pairs for supervised learning.

This data is used to fine-tune the neural network, but it doesn't stop there. The next step involves the creation of the 2nd neural network, the "reward model" neural network. If you're wondering why they use the word "reward", it's because this model is going to be used for reinforcement learning. This is OpenAI, and they like reinforcement learning. But we're not going to use reinforcement learning until step 3. Here in step 2, we're going to take a prompt and use several versions of the first model to generate outputs. We'll show those outputs to humans and ask them which are best. The humans rank the outputs from best to worst. That data is used to train the reward model. But the reward model here is also trained using supervised learning.

Now we get to step 3, where the magic happens. Here instead of using either supervised learning or fine-tuning to train the language model, we switch to reinforcement learning. In reinforcement learning, we have to have a "reward" function. Reinforcement learning is used for games, such as Atari games or games like chess and Go. In Atari games, the score acts as the "reward", while in games like chess or Go, the ultimate win or loss of a game serves as the "reward". None of that translates well to language, so what to do? Well at this point you probably can already guess the answer. We trained a model called the "reward model". So the "reward model" provides the reward signal. As long as it's reasonably good, the language model will improve when trained on that reward signal. On each cycle, a prompt is sampled from the dataset, the language model generates an output, and the reward model calculates the reward for that output, which then feeds back and updates the parameters of the language model. I'm going to skip a detailed explanation of the algorithms used, but if you're interested, they are proximal policy optimization (PPO) and PPO-ptx that is supposed to increase the log likelihood of the pretraining distribution (see below for more on PPO).

Anyway, they call their resulting model "InstructGPT", and they found people significantly prefer InstructGPT outputs over outputs from GPT-3. In fact, people preferred output from a relatively small 1.3-billion parameter InstructGPT model to a huge 175-billion parameter GPT-3 model (134 times bigger). When they made a 175-billion parameter InstructGPT model, it was preferred 85% of the time.

They found InstructGPT models showed improvements in truthfulness over GPT-3. "On the TruthfulQA benchmark, InstructGPT generates truthful and informative answers about twice as often as GPT-3."

They tested for "toxicity" and "bias" and found InstructGPT had small improvements in toxicity over GPT-3, but not bias. "To measure toxicity, we use the RealToxicityPrompts dataset and conduct both automatic and human evaluations. InstructGPT models generate about 25% fewer toxic outputs than GPT-3 when prompted to be respectful."

They also note that, "InstructGPT still makes simple mistakes. For example, InstructGPT can still fail to follow instructions, make up facts, give long hedging answers to simple questions, or fail to detect instructions with false premises."

One person like that

1 Shares

OpenAI's latest state-of-the-art models for dense text embeddings are vastly huger and more expensive than previous models but no better and sometimes worse, according to Nils Reimers, an AI researcher at Hugging Face. First I should say a bit about what "dense embeddings" are. First "embeddings" are vectors that capture something of the semantic meaning of words, such that vectors close together represent words with similar meanings and relationships between vectors correlate with relationships between words. Don't worry if calling this an "embedding" makes no sense. Ok, what about the 'dense' part. Well, embeddings can be "sparse" or "dense", where "sparse" means you have thousands of dimensions but most are 0, and "dense" means you have fewer dimensions (say, 400), but most elements are non-zero. Most of the embeddings that you're familiar with are the dense kind: Word2Vec, Fasttext, GloVe, etc.

In his summary he says, "The OpenAI text similarity models perform poorly and much worse than the state of the art."

"The text search models perform quite well, giving good results on several benchmarks. But they are not quite state-of-the-art compared to recent, freely available models."

"The embedding models are slow and expensive: Encoding 10 million documents with the smallest OpenAI model will cost about $80,000. In comparison, using an equally strong open model and running it on cloud will cost as little as $1. Also, operating costs are tremendous: Using the OpenAI models for an application with 1 million monthly queries costs up to $9,000 / month. Open models, which perform better at much lower latencies, cost just $300 / month for the same use-case."

"They generate extremely high-dimensional embeddings, significantly slowing down downstream applications while requiring much more memory."

Usually newer is better and bigger is better, but not always.

OpenAI GPT-3 Text Embeddings -- Really a new state-of-the-art in dense text embeddings?

1 Shares

A developer using OpenAI's GitHub Copilot says, "It feels a bit like there's a guy on the other side, which hasn't learned to code properly, but never gets the Syntax wrong, and can pattern match very well against an enormous database. This guy is often lucky, and sometimes just gets it."

He then goes on to complain about Grammarly, which he uses as a non-native English speaker, but it's not as good as GPT-3 (the underlying architecture of Copilot, trained on software source code rather than English text). "As a result of using the Copilot, I'm now terribly frustrated at any other Text Field. MacOS has a spell-checker that is a relic from the 90s, and its text-to-speech is nowhere near the Android voice assistant. How long will it take before every text field has GPT-3 autocompletion? Even more, we need a GTP-3 that keeps context across apps."

1 Shares