Goldman Sachs' take on AI: "Gen AI: Too much spend, too little benefit?"

"Tech giants and beyond are set to spend over $1tn on AI capex in coming years, with so far little to show for it. So, will this large spend ever pay off? MIT's Daron Acemoglu and Goldman Sachs' Jim Covello are skeptical, with Acemoglu seeing only limited US economic upside from AI over the next decade and Covello arguing that the technology isn't designed to solve the complex problems that would justify the costs, which may not decline as many expect. But Goldman Sachs' Joseph Briggs, Kash Rangan, and Eric Sheridan remain more optimistic about AI's economic potential and its ability to ultimately generate returns beyond the current 'picks and shovels' phase, even if AI's 'killer application' has yet to emerge. And even if it does, we explore whether the current chips shortage (with Goldman Sachs' Toshiya Hari) and looming power shortage (with Cloverleaf Infrastructure's Brian Janous) will constrain AI growth. But despite these concerns and constraints, we still see room for the AI theme to run, either because AI starts to deliver on its promise, or because bubbles take a long time to burst. Generative AI has the potential to fundamentally change the process of scientific discovery, research and development, innovation, new product and material testing, etc. as well as create new products and platforms. But given the focus and architecture of generative AI technology today, these truly transformative changes won't happen quickly and few -- if any -- will likely occur within the next 10 years. Over this horizon, AI technology will instead primarily increase the efficiency of existing production processes by automating certain tasks or by making workers who perform these tasks more productive."

Some choice quotes (these may seem like a lot but are a small fraction of the 31-page document):

Daron Acemoglu:

"I began with Eloundou et al.'s comprehensive study that found that the combination of generative AI, other AI technology, and computer vision could transform slightly over 20% of value-added tasks in the production process. But that's a timeless prediction. So, I then looked at another study by Thompson et al. on a subset of these technologies -- computer vision -- which estimates that around a quarter of tasks that this technology can perform could be cost-effectively automated within 10 years. If only 23% of exposed tasks are cost effective to automate within the next ten years, this suggests that only 4.6% of all tasks will be impacted by AI. Combining this figure with the 27% average labor cost savings estimates from Noy and Zhang's and Brynjolfsson et al.'s studies implies that total factor productivity effects within the next decade should be no more than 0.66% -- and an even lower 0.53% when adjusting for the complexity of hard-to-learn tasks. And that figure roughly translates into a 0.9% GDP impact over the decade."

Joseph Briggs:

"We are very sympathetic to Acemoglu's argument that automation of many AI-exposed tasks is not cost effective today, and may not become so even within the next ten years. AI adoption remains very modest outside of the few industries -- including computing and data infrastructure, information services, and motion picture and sound production -- that we estimate will benefit the most, and adoption rates are likely to remain below levels necessary to achieve large aggregate productivity gains for the next few years. This explains why we only raised our US GDP forecast by 0.4pp by the end of our forecast horizon in 2034 (with smaller increases in other countries) when we incorporated an AI boost into our global potential growth forecasts last fall. When stripping out offsetting growth impacts from the partial redirection of capex from other technologies to AI and slower productivity growth in a non-AI counterfactual, this 0.4pp annual figure translates into a 6.1% GDP uplift from AI by 2034 vs. Acemoglu's 0.9% estimate."

"We also disagree with Acemoglu's decision not to incorporate productivity improvements from new tasks and products into his estimates, partly given his questioning of whether AI adoption will lead to labor reallocation and the creation of new tasks."

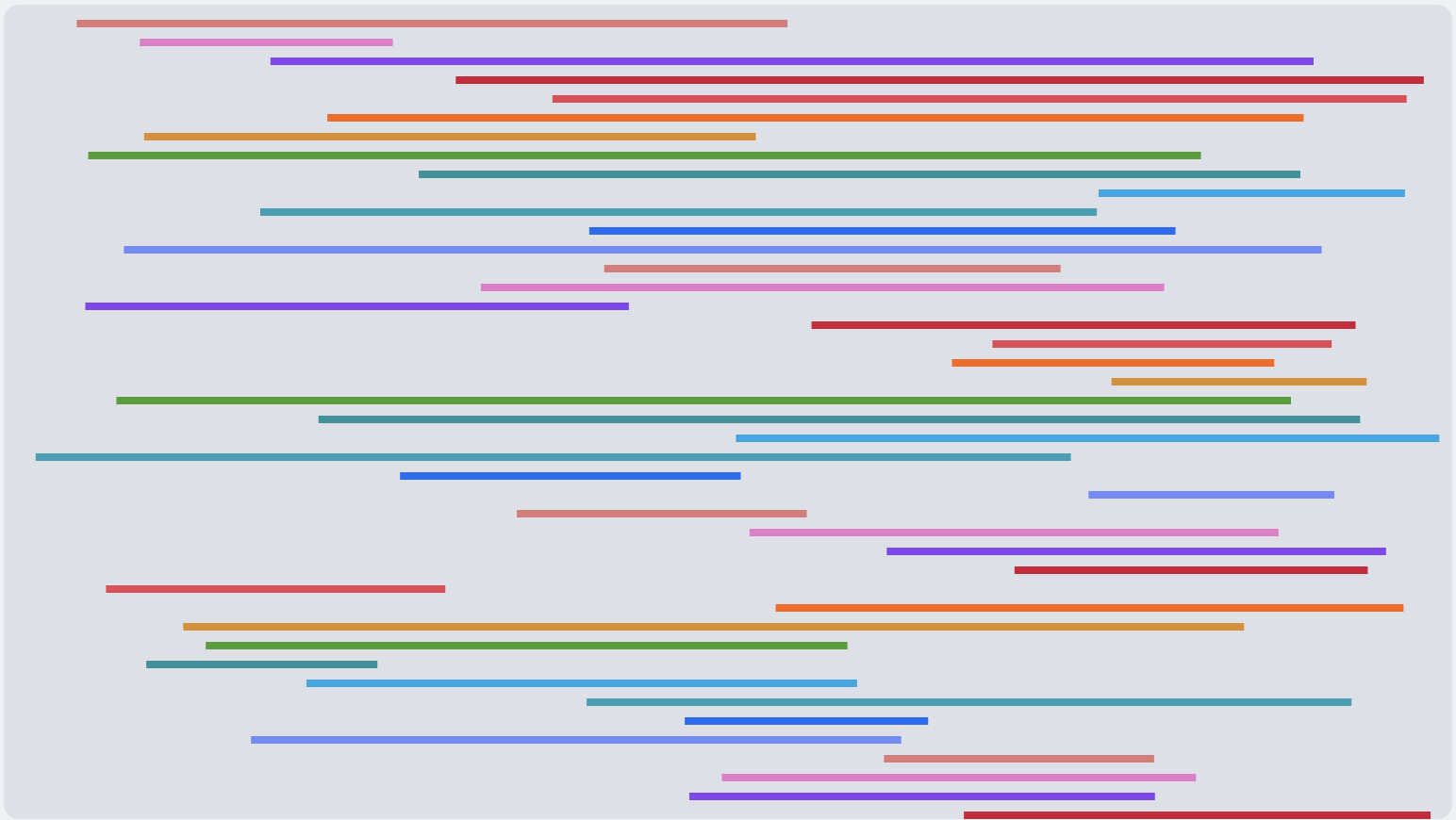

This section has interesting charts and graphs on which industries have been adopting AI and which haven't.

Jim Covello:

"What $1tn problem will AI solve? Replacing low-wage jobs with tremendously costly technology is basically the polar opposite of the prior technology transitions I've witnessed in my thirty years of closely following the tech industry."

Kash Rangan and Eric Sheridan:

"We have yet to identify AI's 'killer application', akin to the Enterprise Resource Planning (ERP) software that was the killer application of the late 1990s compute cycle, the search and e-commerce applications of the 2000-10 tech cycle that achieved massive scale owing to the rise of x86 Linux open-source databases, or cloud applications, which enabled the building of low-cost compute infrastructure at massive scale during the most recent 2010-20 tech cycle."

"Those who argue that this is a phase of irrational exuberance focus on the large amounts of dollars being spent today relative to two previous large capex cycles -- the late 1990s/early 2000s long-haul capacity infrastructure buildout that enabled the development of Web 1.0, or desktop computing, as well as the 2006-2012 Web 2.0 cycle involving elements of spectrum, 5G networking equipment, and smartphone adoption. But such an apples-to-apples comparison is misleading; the more relevant metric is dollars spent vs. company revenues. Cloud computing companies are currently spending over 30% of their cloud revenues on capex, with the vast majority of incremental dollar growth aimed at AI initiatives. For the overall technology industry, these levels are not materially different than those of prior investment cycles that spurred shifts in enterprise and consumer computing habits. And, unlike during the Web 1.0 cycle, investors now have their antenna up for return on capital. They're demanding visibility on how a dollar of capex spending ties back to increased revenues, and punishing companies who can't draw a dotted line between the two."

Brian Janous:

"Utilities are fielding hundreds of requests for huge amounts of power as everyone chases the AI wave, but only a fraction of that demand will ultimately be realized. AEP, one of the largest US electric utility companies, has reportedly received 80-90 gigawatts (GW) of load requests. Only 15 GW of that is likely real because many of the AI projects that companies are currently envisioning will never actually see the light of day. But 15 GW is still massive given that AEP currently owns/operates around 23 GW of generating capacity in the US. And even if overall grid capacity grows by only 2% annually -- which seems like a reasonable forecast -- utilities would still need to add well in excess of 100 GW of peak capacity to a system that currently handles around 800 GW at peak. The increase in power demand will also likely be hyperlocalized, with Northern Virginia, for example, potentially requiring a doubling of grid capacity over the next decade given the concentration of data centers in the area."

Carly Davenport:

"After stagnating over the last decade, we expect US electricity demand to rise at a 2.4% compound annual growth rate (CAGR) from 2022-2030, with data centers accounting for roughly 90bp of that growth. Indeed, amid AI growth, a broader rise in data demand, and a material slowdown in power efficiency gains, data centers will likely more than double their electricity use by 2030. This implies that the share of total US power demand accounted for by data centers will increase from around 3% currently to 8% by 2030, translating into a 15% CAGR in data center power demand from 2023-2030."

Toshiya Hari, Anmol Makkar, David Balaban:

"AI applications use two types of dynamic random-access memory (DRAM): HBM and DDR SDRAM. HBM is a revolutionary memory technology that stacks multiple DRAM dies -- small blocks of semiconducting material on which integrated circuits are fabricated -- on top of a base logic die, thereby enabling higher levels of performance through more bandwidth when interfacing with a GPU or AI chips more broadly. We expect the HBM market to grow at a ~100% compound annual growth rate (CAGR) over the next few years, from $2.3bn in 2023 to $30.2bn in 2026, as the three incumbent suppliers of DRAM (Samsung, SK Hynix, and Micron) allocate an increasing proportion of their total bit supply to meet the exponential demand growth."

"Despite this ramp-up, HBM demand will likely outstrip supply over this period owing to growing HBM content requirements and major suppliers' supply discipline. We therefore forecast HBM undersupply of 3%/2%/1% in 2024/2025/2026. Indeed, as Nvidia and AMD recently indicated, updated data center GPU product roadmaps suggest that the amount of HBM required per chip will grow on a sustained basis. And lower manufacturing yield rates in HBM than in traditional DRAM given the increased complexity of the stacking process constrains suppliers' ability to increase capacity."

"The other key supply bottleneck is a specific form of advanced packaging known as CoWoS, a 2.5-dimensional wafer-level multi-chip packaging technology that incorporates multiple dies side-by-side on a silicon interposer to achieve better interconnect density and performance for high-performance computing (HPC) applications. This advanced packaging capacity has been in short supply since the emergence of ChatGPT in late 2022."

"We outline four phases of the AI trade. 'Phase 1', which kicked off in early 2023, focuses on Nvidia, the clearest near-term AI beneficiary. 'Phase 2' focuses on AI infrastructure, including semiconductor firms more broadly, cloud providers, data center REITs, hardware and equipment companies, security software stocks, and utilities companies. 'Phase 3' focuses on companies with business models that can easily incorporate AI into their product offerings to boost revenues, primarily software and IT services. 'Phase 4' includes companies with the biggest potential earnings boost from widespread AI adoption and productivity gains."

Summary of key forecasts is on page 25.

Gen AI: Too much spend, too little benefit?

#solidstatelife #ai #investment #goldmansachs