"Intel unveiled a new roadmap that includes a new 14A node, the industry's first to use High-NA EUV, here at its Intel Foundry Services Direct Connect 2024 event."

Intel no longer uses "nanometers" to refer to its "process nodes", so I don't know what "14A" means, but, there is a quote somewhere of Intel CEO Pat Gelsinger saying 14A produces "1.4 nanometer technology." Maybe "14A" means 14 angstroms.

The other term in there is "High-NA EUV". "NA" stands for "numerical aperture". But to understand the significance of that we have to take a few steps back.

The company that makes the semiconductor manufacturing equipment is ASML (Advanced Semiconductor Materials Lithography).

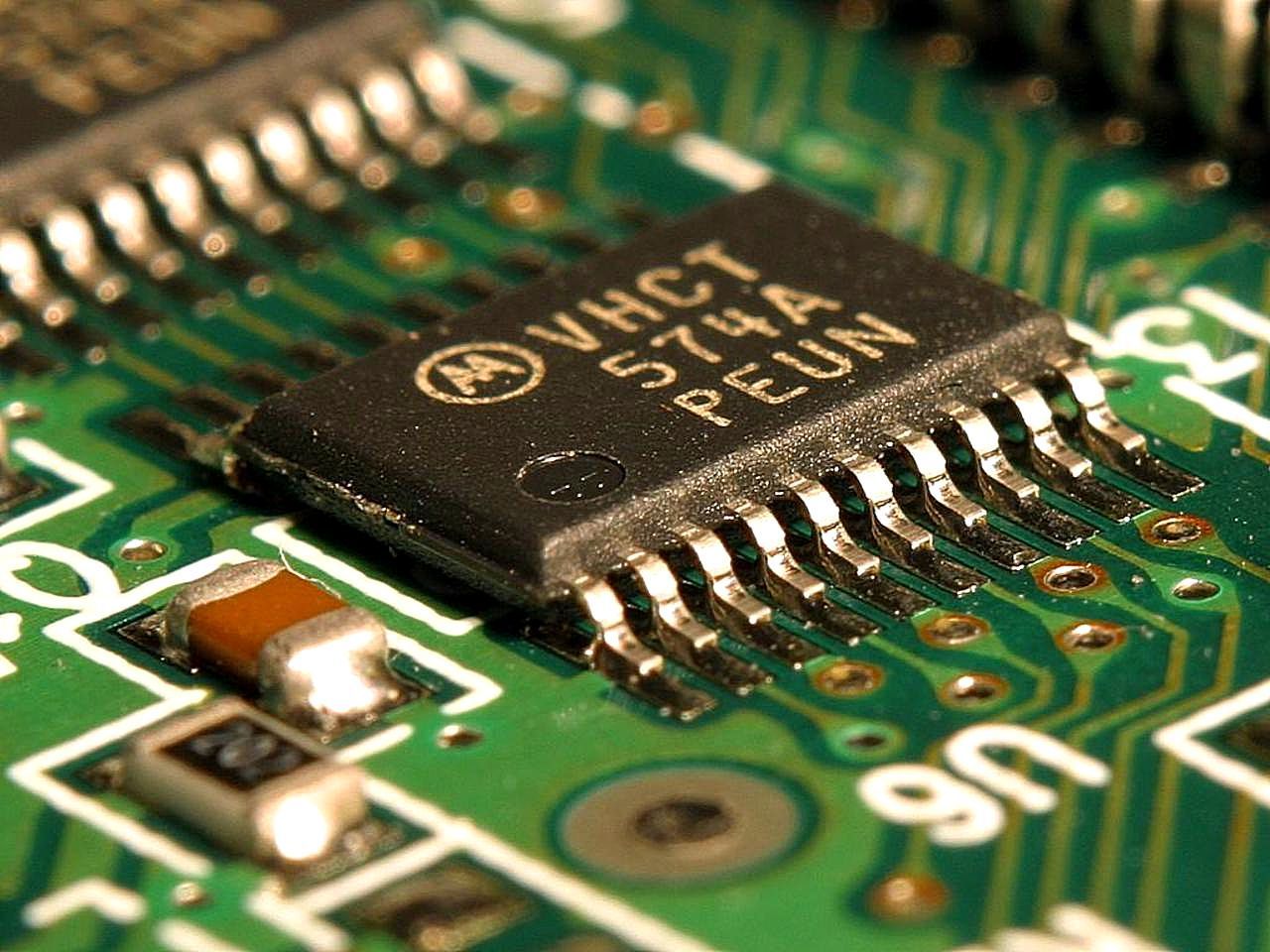

Chips are made through a process called photolithography, which involves shining light through a chip design in such a way that it is miniaturized, and through a process using a lot of complicated chemistry, that pattern can be etched into the surface of the silicon and turned into an electronic circuit. These circuits have gotten so small that visible light has wavelengths too big to make the chip. Chipmakers predictably went to ultraviolet light, which has shorter wavelengths. That worked for a time, but suddenly a problem came up, which is that air was opaque to the wavelengths they wanted to use.

To us, we think of air as transparent, and for the visible wavelengths that our eyes use, it is pretty much perfectly transparent. But it is not transparent at all wavelengths. At certain ultraviolet wavelengths, it's opaque like black smoke.

This is why the semiconductor industry had to make the sudden jump from using lasers that emit light at 193 nanometers to lasers that emit light at 13.5 nanometers. (13.5 was chosen because people just happened to know how to make light at that frequency with a tin plasma laser.) Jumping the chasm from 193 to 13.5 jumps across the wavelengths where air is opaque. 193 has been called "deep ultraviolet", or DUV. 13.5 is called "extreme ultraviolet", or EUV. So whenever you see "EUV", which we see here in the phrase "Nigh-NA EUV", that's what it's talking about.

Making this jump required rethinking all the optics involved in making chips. Mainly this involved replacing all the lenses with mirrors. Turns out at 13.5 nanometers, it's easier to do optics with reflective mirrors than transparent lenses.

Besides decreasing the wavelength (and increasing the frequency) of the light, what else can be done?

It turns out there's two primary things that determine the limit of the size you cat etch: the light wavelength and the numerical aperture. There's some additional factors that have to do with the chemistry you're using for the photoresists and so fourth, but we'll not concern ourselves with those factors at the moment.

So what is numerical aperture? If you're a photographer, you probably already know, but it has to do with the angle at which a lens can collect light.

"The numerical aperture of an optical system such as an objective lens is defined by:

NA = n sin(theta)

where n is the index of refraction of the medium in which the lens is working (1.00 for air, 1.33 for pure water, and typically 1.52 for immersion oil), and theta is the half-angle of the maximum cone of light that can enter or exit the lens."

As for "the medium in which the lens is working", note that ASML used water immersion with deep ultraviolet (193 nanometer light and higher) to achieve an NA greater than 1. This hasn't been done for extreme ultraviolet (13.5 nanometer light).

The increase in numerical aperture that ASML has recently accomplished, and that Intel is announcing they are using, is an increase from 0.33 to 0.55. (Numerical aperture is a dimensionless number.)

How did ASML achieve this increase? Their page on "5 things you should know about High NA EUV lithography" (link below) gives a clue. One of the 5 things is, "larger, anamorphic optics for sharper imaging".

The page refers to "EXE" and "NXE". These refer to ASML's own equipment. NXE systems have a numerical aperture of 0.33, but with the EXE systems, ASML has increased it to 0.55.

"Implementing this increase in NA meant using bigger mirrors. But the bigger mirrors increase the angle at which light hit the reticle, which has the pattern to be printed."

You're probably not familiar with the term "reticle". Here the meaning is different from normal optics. In normal optics, it refers to a scale that you might see in a microscope scope. But here, it has to do with the fact that chips are no longer manufactured with the entire pattern for the whole chip all in one shot. Instead, a pattern for only a small portion of the wafer is used at a time, and then stepper motors move the wafer and the process is repeated. This small portion of the pattern that is used at a time is called the "reticle".

"At the larger angle the reticle loses its reflectivity, so the pattern can't be transferred to the wafer. This issue could have been addressed by shrinking the pattern by 8x rather than the 4x used in NXE systems, but that would have required chipmakers to switch to larger reticles."

"Instead, the EXE uses an ingenious design: anamorphic optics. Rather than uniformly shrinking the pattern being printed, the system's mirrors demagnify it by 4x in one direction and 8x in the other. That solution reduced the angle at which the light hit the reticle and avoided the reflection issue. Importantly, it also minimized the new technology's impact on the semiconductor ecosystem by allowing chipmakers to continue using traditionally sized reticles."

Intel announces new roadmap at IFS Direct Connect 2024: New 14A node, Clearwater Forest taped-in, five nodes in four years remains on track

#solidstatelife #mooreslaw #semiconductors