This research paper, published May 5th of this year, says "People cannot distinguish GPT-4 from a human in a Turing Test". Can we declare May 5, 2024, as the date machines passed the Turing Test?

I asked ChatGPT to tell me what all the implications are of AI passing the Turing Test. (Is it an irony I ask an AI that passed the Turning Test what the implications are of AI passing the Turing Test?)

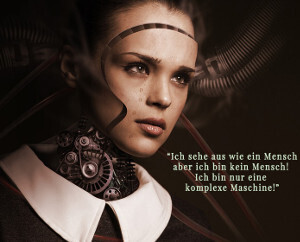

It said, for "Philosophical and ethical implications", that we'd have to redefine what it means to be "intelligent" and what "human intelligence" means, what it means to be "conscious", and the ability to simulate human conversation could lead to ethical dilemmas (deceptive automated customer service systems or deceptive medical or legal automated systems).

For "Social implications", it said "Impact on employment", especially roles that involve interpersonal communication (e.g., customer service, therapy, teaching), "AI in media and entertainment" -- writing novels, creating art, or generating music -- and "Public trust and misinformation" such as deepfakes and fake identities.

For "Legal and regulatory implications", it said "Legal accountability" -- who is accountable for an AI's actions -- "Regulation and oversight" especially in sectors where trust and human judgment are paramount (e.g., healthcare, legal advice, financial trading), and "Personhood and rights" -- does AI deserve rights?

Under "Technological implications", "Advances in human-AI interaction" -- sophisticated, seamless, and natural interaction using language -- resulting in personal assistants, customer service, and virtual companions, "Enhanced autonomous systems" (self driving cars, really?), and "AI as creative agents", but not just content creation, also "emotional work" such as therapy and help with decision-making

Under "Economic implications", "Market disruption", disruption of many industries, particularly those reliant on human communication and customer service, "Increased AI investment", well that's certainly happened, hasn't it, look how many billions OpenAI spends per year, but people will seek to capitalize on AI in specific sectors, e.g., healthcare, education, finance, and "AI-driven Productivity", particularly "in sectors where human-like interaction or decision-making is critical".

Under "Cultural Implications", it listed "Changing social interactions", meaning developing social bonds with AI entities, and over-reliance on AI, and "Education and knowledge", transform education and enabling personalized learning. (Except the point of education isn't really learning, it's credentials, but that's a story for another time).

Under "Security implications", it listed "Cybersecurity threats", especially social engineering attacks (e.g., phishing, fraud) enabled by AI's conversational abilities, and "Autonomous decision-making in security", in areas like national defense or policing, where bias could be a problem.

And finally under "Scientific implications", it listed "Advances in cognitive science", how understanding and building AI that can pass the Turing Test might yield insights into human cognition and intelligence -- eh, not yet, not that I've seen anyway -- and "AI in research", with AI taking on hypothesis generation, data analysis, or even autonomous experimentation.

I put the same question to Google's Gemini and (after the disclaimer that "The assertion that AI has recently passed the Turing Test is debatable") it... mostly listed the same items with slightly different categorization. The one new item it put in was "New benchmarks for AI", "Passing the Turing Test may necessitate the development of new, more comprehensive tests to evaluate AI's capabilities beyond just mimicking human conversation." That's a good point, Gemini.

I put the same question to Claude and it listed the same results, as short points, inviting me to ask it elaborate more.

Asked Meta.AI (from the company formerly known as Facebook), but it didn't seem to yield any new items.

I asked Grok (from X, Elon Musk's company), and it gave me the same list without any categorization.

I asked Perplexity and it mentioned "Multimodal AI development": "Success in language-based Turing Tests could accelerate progress in other areas of AI, such as visual reasoning and abstract problem-solving." Similarly under "Scientific and research implications", it listed "Shift in AI research focus: Passing the Turing Test might redirect AI research towards other aspects of intelligence beyond language processing." It also listed "Interdisciplinary collaboration": "There would likely be increased collaboration between AI researchers, cognitive scientists, and ethicists."

Perplexity also added "New Business Models: Industries could emerge around creating, managing, and regulating human-like AI interactions". Other systems highlighted increased investment in existing "sectors".

I also put the question to DeepSeek, the Chinese LLM, which gave a similar list but put legitimacy and ethical considerations first. It was also the first to mention "Data privacy": "Increased reliance on AI systems" "may raise concerns about data privacy and the collection of sensitive information."

Finally, I put the question to ChatGLM, another Chinese LLM, which, under "Educational Implications", added "Pedagogical shifts: Educators may need to adapt their teaching methods to incorporate AI that can engage in dialogue with students." Also under "Security implications", it listed "Defense and military": "The use of AI in military applications could become more sophisticated, leading to new arms races."

People cannot distinguish GPT-4 from a human in a Turing test

#solidstatelife #ai #llms #genai #turingtest