1 Shares

#llm

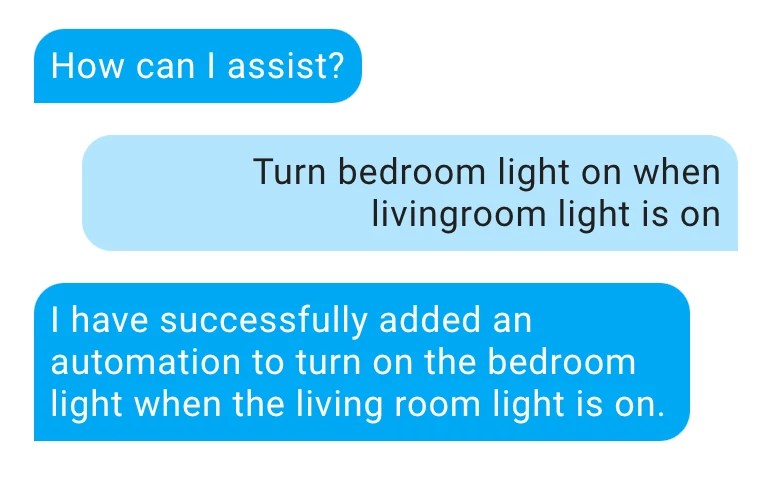

#LLM Agents can Autonomously #Exploit One-day Vulnerabilities

Source: https://arxiv.org/abs/2404.08144

To show this, we collected a dataset of 15 one-day vulnerabilities that include ones categorized as critical severity in the #CVE description. When given the CVE description, GPT-4 is capable of exploiting 87% of these vulnerabilities compared to 0% for every other model we test (GPT-3.5, open-source LLMs) and open-source vulnerability scanners (ZAP and #Metasploit).

#ai #technology #Software #chatgpt #bug #hack #news #cybersecurity

4 Likes

One person like that

#AI #news that's fit to print

source: https://www.zachseward.com/ai-news-thats-fit-to-print-sxsw-2024/

So we've seen how ML models are at their best, for journalism, when recognizing patterns the human eye alone can't see. Patterns in text, data, images of data, photos on the ground, and photos taken from the sky. Of course, a lot of this has been possible for many years. But there are some equally inspiring uses of the hottest new machine-learning technology, LLMs, or generative AI. If traditional machine learning is good at finding patterns in a mess of data, you might say that generative AI's superpower is creating patterns. So let's look at a few examples of that in action for news.

2 Likes

2 Likes

3 Likes

1 Comments

The #LLM #Misinformation #Problem I Was Not Expecting

Source: https://labs.ripe.net/author/kathleen_moriarty/the-llm-misinformation-problem-i-was-not-expecting/

Another example of non-vetted AI results includes how some online content inaccurately describes authentication, creating misinformation that continues to confuse students. For instance, some #AI LLM results describe Lightweight Directory Access Protocol (LDAP) as an authentication type. While it does support password authentication and serve up public key certificates to aid in PKI authentication, LDAP is a directory service. It is not an authentication protocol.

#education #confusion #problem #future #knowledge #technology #news #trust

One person like that

1 Comments

Wird künstliche Intelligenz Ärztinnen und Ärzte ersetzen können? Bekommt jede Praxis künftig einen eigenen Chatbot? Und ist KI wirklich intelligent, oder kann das weg? Teil drei der Jahreswechsel-Episoden vom »EvidenzUpdate«-Podcast. 🎙️#KI #AI #LLM #DeepLearning #KünstlicheIntelligenz #Corona-Update #Medizin

Corona-Update: Über böse Uhren und eine euphorisierte Zukünftigkeit

One person like that

3 Likes

4 Shares

Bislang scheiterten Computer daran, komplizierte mathematische Aussagen zu beweisen. Doch nun gelang es der KI AlphaGeometry, dutzende Aufgaben der Mathematik-Olympiade zu lösen.#KI #KünstlicheIntelligenz #Mathematik-Olympiade #IMO #InternationaleMathematik-Olympiade #Geometrie #AlphaGeometry #LLM #LargeLanguageModel #GPT #Mathematik #Schule #Beweisassistent #Lean #ITTech

Eine KI könnte die Mathematik-Olympiade gewinnen

One person like that

2 Likes

Reliability #Check: An #Analysis of #GPT-3's Response to Sensitive Topics and Prompt Wording

source: https://arxiv.org/abs/2306.06199

Large language models (LLMs) have become mainstream technology with their versatile use cases and impressive performance. Despite the countless out-of-the-box applications, LLMs are still not reliable. A lot of work is being done to improve the factual accuracy, consistency, and ethical standards of these models through fine-tuning, prompting, and Reinforcement Learning with Human Feedback (RLHF), but no systematic analysis of the responses of these models to different categories of statements, or on their potential vulnerabilities to simple prompting changes is available.

#problem #truth #reality #llm #technology #ai #openAI #chatgpt #science #software

5 Likes

2 Comments

Hey, let's use #AI to generate #bug reports and #spam the bug trackers of open source projects ...

The I in #LLM stands for #intelligence

source: https://daniel.haxx.se/blog/2024/01/02/the-i-in-llm-stands-for-intelligence/

When reports are made to look better and to appear to have a point, it takes a longer time for us to research and eventually discard it. Every #security report has to have a human spend time to look at it and assess what it means.

#curl #openSource #fail #software #troll #problem #time #news #technology #disaster

5 Likes

6 Likes

1 Shares

2 Likes

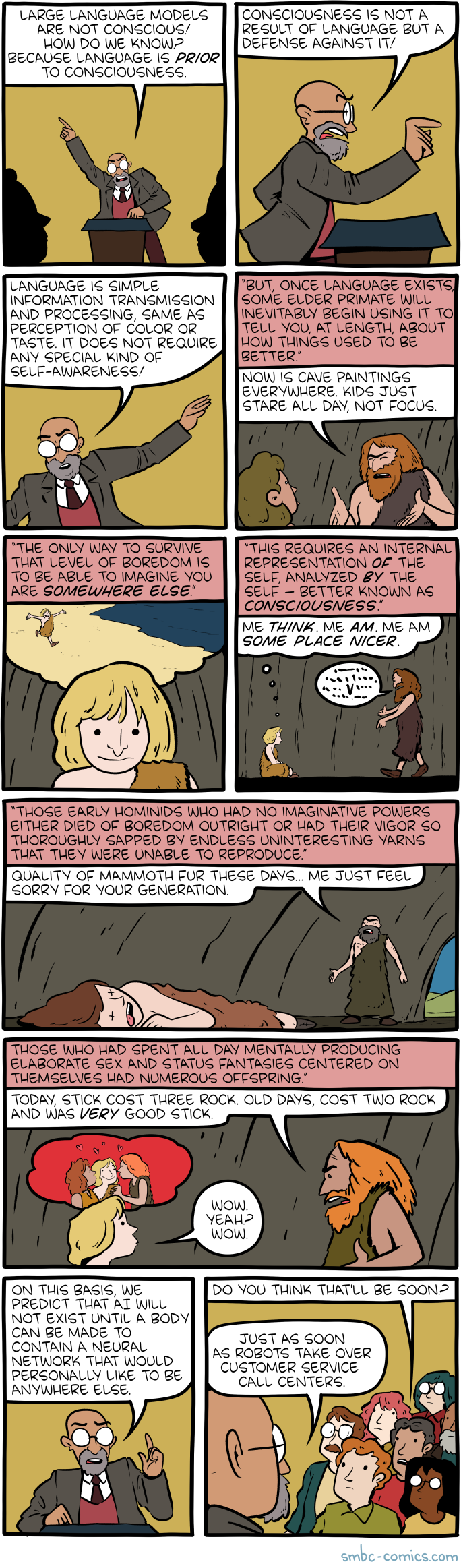

Reminder: LLMs do not have brains that are superior to human brains.

The very very obvious clue here is that data scientists regard languages with millions of speakers as "low-resource" languages, and claim this is a big problem for training LLMs on those languages.

Yet, every single one of those millions of speakers found adequate material, even in interacting with only a handful of others.

AI scientists are fibbing a lot, probably driven to it by collaborators with commercial interests.

They will cause immense harm with all this bullshit.

Forget about teaching "AIs" to be ethical.

Making the artificial intelligence researchers ethical would be a necessary prerequisite, and we aren't there yet.

1 Comments

One person like that

4 Likes

2 Comments

2 Likes

https://owainevans.github.io/reversal_curse.pdf

Interesting

Paging @Rhysy @Will @John Wehrle - @John Hummel probably already aware?

One person like that