High Level Steps to Migrate my Docker Hosting to a Different Hosting Service

The planning took a lot longer than the actual move time, and the downtime for my main blog was really only around 10 minutes. But this has inspired me to also document some of the steps I took for my own future reference. Also, many videos and guides I watched, often only dealt with a single aspect of the migration, such as the Docker volumes backup and restore.

I’m not going to make this a detailed step by step guide as everyone’s situation is different, but hopefully this conceptual overview, and some detail with links, will help many others. I’m not sure yet if I’m going to do an explainer video, but maybe this post will help me make up my mind.

My hosting environment is a hosted VPS service running Ubuntu Linux, with Docker and Portainer, which host various web services, each in their own Docker container and related Docker volumes for persistent storage. One of those Docker containers is a Nginx Proxy Manager reverse proxy which routes incoming requests to the correct web services. There is also an OpenVPN container that allows me to authenticate and drop into the LAN environment to do maintenance. The DNS (for resolving the URL for each service) is handled through a free CloudFlare service. That DNS service points to the correct main public IP address for the server. The theory is, as soon as the service has been backed up and restored (and tested) to the new server, I update the IP address in the DNS to point to the new server, and any visitors get routed immediately to the new server without knowing the difference.

The high-level steps one needs to perform are:

Check some details on your existing server environment such as:

- what Linux users are being used apart from root – I had a user Soft and there was also a www-data user

- what firewall ports are open

- what cron jobs are running

- what Docker networks are running e.g. I have mysql-net created to share a common database

- which containers are using the shared database as I want to restore them together straight after the database is migrated (they need the database to function)

- check what volumes are attached to which containers, and see what user file permissions are present (I did not need this because I used Docker-Backup)

- Note down the container ID for each container. This is needed for the Docker-Backup app, and also serves as a checklist as to which have been restored.

Perform Portainer backup inside Portainer – this saves all the Stacks with their configs to recreate the container images on the new server. It does not backup Docker networks or volumes.

Before I started the actual backups, I first got the new VPS setup with:

- it’s OS updated

- firewall setup and ports 22 (SSH), 80 (HTTP), 443 (HTTPS), 1143 (OpenVPN), and 9000 (Temporarily for Portainer), as well as temporarily the port 81 for NginxPM

- Fail2Ban installed

- configured ssh passwordless login (public/private key), and then disabled password logins on the new server

- I created a user Soft (I forgot to create www-data but things did work)

- Docker installed

- Portainer installed, and I restored Portainer (when you start it up you have that option at login), but I did nothing further with it

- created that one custom Docker network that I was using to share data between my containers (can do this in Portainer, or from CLI)

install Go (required for Docker-Backup) and installed Docker-Backup on both existing server and new server

Stop containers on existing server before backing up – to ensure all data is written to files and databases can be safely copied. I started with stand-alone services first which were not using the shared database, so I could test them being copied and restored first on the new server. So, my OpenVPN and NginxPM, Wordle, and Glances all worked without the shared database.

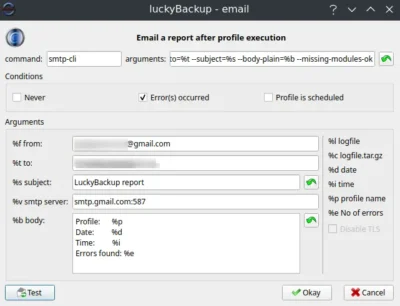

Run Docker-Backup on existing server for first one or two container IDs from inside /root/docker-backup with the command ./docker-backup backup --tar <containerID>. Running as root saved those compressed files to /root/docker-backup as a .tar file. This application includes all the volumes for that container as well as user permissions.

Log into terminal on the NEW server, go to /root/docker-backup (because we want to pull across the backed-up file into the same location on the new server). Copy the backed up file from this directory with the command scp root@191.101.59.143:/root/docker-backup/backedupfile.tar backedfile.tar . This will bring across that backed up file into the new server with that same name in /rot/docker-backup. Note for remote scm copy the existing server needs to allow password login, so you may need to reenable that for this to work.

Run Docker-Backup with the restore option to recreate the container’s volumes with all the persistent data and user file permissions, from the backed-up file. The share database will also just be a container volume being restored. You can browse /var/lib/docker/volumes to check the persistent data has been restored. I noted two issues though, and one was sometimes a volume name like glances would not be recreated with that name, but instead a long number of digits (I have no idea why) so I’d verify by its contents what it should be, and then rename it back to glances (or whatever). Also often got the error: Error response from daemon: No such image: kylemanna/openvpn:latest. I solved this in my case by just running docker pull <image-name> to pull the image, before running the restore again which then worked.

In theory now you could just spin up that container and it should work by finding its persistent data in its volumes. For some containers this did not work, and what fixed it, was going into Portainer and opening the Stack for that service, and just forcing a Stack Update (in /Stacks/Edit). In that way I also ensured the container and volumes were all properly connected. Don’t worry, it won’t overwrite any of the volume data you’ve restored.

The above is why I also temporarily opened ports 9000 and 81, so that I could run Portainer and NginxPM from my web browser with just the public IP of the server (easy, and I lock it down as soon as I finished setting up).

That in essence was it, I did this one by one, testing each one. When it came to my blog and the shared database, I just did that batch all together with the database restored first, so that the others could all come online and connect to the database.

My last steps after everything was working and tested, was to close ports 9000 and 81 on the new server, and to shut down the old server and notify the provider to cancel the account.

So, like I said the actual WordPress blog was only down around 10 minutes or so while I backed up and restored that batch with the database. I did my 11GB of photos in Piwigo last as that took longest to copy across and restore. I did ditch my Immich photos setup as I realised, I’d complicated things by having that connect directly into the Piwigo photos volume. That resulted in the Immich backup, including the 11GB of photos, and worse, restoring them in an odd location which was no longer linked to Piwigo. But no harm done, as it was just a test install and all my photos were still safely in, and attached to, Piwigo.

The Docker-Backup install guide was pretty long across more than one page, so I could summarise my steps here as its instructions at https://github.com/muesli/docker-backup and to install Go at https://go.dev/doc/install/source using:

wget -c https://go.dev/dl/go1.22.1.linux-amd64.tar.gz

rm -rf /usr/local/go # In case it exists already

tar -C /usr/local -xzf go1.22.1.linux-amd64.tar.gz

export PATH=$PATH:/usr/local/go/bin

go version # To check its installed

Installing Docker-Backup itself:

git clone https://github.com/muesli/docker-backup.git

cd docker-backup

go build

./docker-backup # Should show help info

In hindsight maybe I would try just the straight backup of the volume data using the docker command docker run --rm --volumes-from dbstore -v $(pwd):/backup ubuntu tar cvf /backup/backup.tar /dbdata and its corresponding restore on the other side.

It all went through fine although I also realised that my Nextcloud setup was quite out of date. I’ve been running it for many years and some of the configuration setup has actually changed. I decided to actually wipe the container as well as all volume data as I’m the only user, and the data is anyway synced from my desktop PC. The new install is way faster, and as soon as I connected the Nextcloud desktop sync again, all my documents and photos were just resynced to the server.

All-in-all about a full day’s research and testing bits of this to see how I’d do it, and I used an afternoon the following day to do the actual migration. It was lots of fun and now at least I know how to switch hosting providers again if I need, or want, to.

#Blog, #docker, #hosting, #migration, #technology