3 Likes

#docker

3 Likes

2 Likes

Argh! Hipsters and their Dockerfiles!!!!

Who is responsible for teaching the current crop of attention-deficit teenagers that command sequences in Unix shells (like bash) are separated by '&&'? Would this person please own up? I have an axe to grind...

So recently, we had Yet Another Java Fuckup, this time because everybody was surprised that Java will load and link random libraries form the internet when told to do so... yes, that log4j hilarity. We all had a good laugh.

Now, I'm having to clean up behind some half-assed attempt at mitigating for log4j, in the form of the following Dockerfile:

apt update && apt -y install -t bullseye-backports libreoffice && rm /usr/share/java/ant-apache-log4j-1.10.9.jar && rm /usr/share/maven-repo/org/apache/ant/ant-apache-log4j/1.10.9/ant-apache-log4j-1.10.9.jar

You know the more I look, the more idiotic this is. First of all, if you don't understand shell programming, go read the fucking manual. Second of all, don't copy-paste cargo cult code form the internet, especially if you don't understand what it does. Third of all, don't use the && operator if you just want to have a sequence of commands on a single line. Fourth of all, if you insist on using the && conditional execution operator (yes, it really means, "short-circuit AND depending on the return code of the previous command", i.e. "cmd1 && cmd2" is equivalent to "if cmd1; then cmd2; fi", just like it does in C and presumably the current hip scripting language everybody thinks is awesome), then please, please also read the manpage for "rm": you could have used the '-f' option, pointlessly, to at least make this && nonsense *work and not fail if the package you installed no longer contains log4j jarfiles.

I mean, it is even more work to type && than ; and the latter actually does what you think it should do.

You know... argh! Headdesk! Hate!!! Bile!!11!!1! Can someone PLEASE make a Youtube tutorial or Stack Overflow topic or TicToc bloop on how to use the semicolon operator in Bash. Because, obviously, today's crop of "programmers" (and I use this word in the most loose, general sense possible) can not read any more. least of all, manuals. But I'm sure they love TicToc. Their "code" looks like they do.

Please go back to playing candy crush, or whatever it is kids these days play. Don't ship "code" you don't understand. Don't "code" at all. Go away.

#rant #docker #RTFM #hipsters #attentionDeficitTeenagers #log4j #hate

5 Likes

14 Comments

1 Shares

Docker disk space reports

As a teaser, this is the quick summary report:

docker system df

Check out this nice article for more detailed reports

https://codeopolis.com/posts/commands-to-view-docker-disk-space-usage/

I made a minor tweak to the #Diaspora #Docker image that I publish: it now starts an instance of lighttpd on port 8080 for serving static assets. If you use my image, you can pull the latest version or v0.7.16.0 and have your reverse proxy map /assets/(.*) to port 8080 and your pod will be a bit faster and more secure.

2 Likes

1 Shares

Kicked off a new build of my #Diaspora #Docker image and discovered that s.diaspora.software is down.

Does any #podmin know who maintains that? According to the wiki it's still needed to install Diaspora.

2 Comments

s6-rc: an interesting init alternative

It looks like s6 has gained traction as a light-weight init for containers

https://github.com/just-containers/s6-overlay

but also has an openembedded layer for use in embedded devices, though it has not been added to the OE layers list yet

https://github.com/chris2511/meta-s6-rc

Docker Punching Holes Through Your VPS Firewall? This Is How I Solved It

Yes, Docker containers automatically reconfigure the IPTable rules, and do not show up under UFW status as opened ports in your VPS firewall. This can leave your management containers wide open. Most docker container tutorials only use examples of how to do basic port mapping, and this is what exposes those ports.

I explain what is happening with this, and which of the three options I found easiest to make sure only the essential ports are open.

See https://youtu.be/Kr-3WKA1_fI

#technology #selfhosting #docker #VPS #security

#Blog, ##docker, ##security, ##selfhosting, ##technology, ##vps

One person like that

3 Ways to Define Docker Volumes Explained in 6 Minutes

Docker containers are great (my opinion) due to their ease of deployment and updating, as well as better resource usage for multiple concurrent services on a server. One could also argue they offer better security due to the potential ways you can isolate them from each other, and even your network (see an upcoming video of mine about this point).

A docker container on its own though does not retain any transactional data (persistence) if it is destroyed or updated. To achieve persistence, one creates volumes, which essentially map locations out to the OS side. Destroy or update the container, and it can still read that data from the volume.

This video explains quite clearly the 3 different ways to define such volumes.

Watch https://youtu.be/p2PH_YPCsis

#technology #docker #container #volume #storage

#Blog, ##container, ##docker, ##storage, ##technology, ##volume

2 Likes

2 Likes

How to easily manage Docker Containers using Portainer graphical user interface on Ubuntu

Portainer is an open-source management UI for Docker, including Docker Swarm environment. Portainer makes it easier for you to manage your Docker containers, it allows you to manage containers, images, networks, and volumes from the web-based Portainer dashboard.

I started out with docker containers using Portainer, and I still use Portainer today. In the beginning, I used the quick-click install of existing App Templates to quickly get many popular types of web service up and running. It was this ease of use that helped me migrate away from cPanel hosting. Today I tend to use App Templates, where I have taken existing scripts and tweaked them for my use to store data on specific external volumes, specify ports to be used, etc.

If you want to get into using Docker Containers to host services, I’d suggest looking at a few videos about using Portainer to get the feel of it. Of course, everything in Portainer can be done from the command line too (and often quicker) but using a graphical interface that basically does everything needed, is a lot more friendly for most average users.

See https://www.howtoforge.com/tutorial/ubuntu-docker-portainer/

#technology #docker #containers #portainer #selfhosting

#Blog, ##containers, ##docker, ##portainer, ##selfhosting, ##technology

2 Likes

1 Comments

Decided to spin up a local k3s cluster running on my (ARM64) laptop. Another interesting bit about the Docker environment is how easy it is to migrate configurations across platforms.

I'll add that spinning up a cluster in k3s is just running a single command per node; one for the master node and one for each of the server nodes. It's trivial to automate and completes in seconds.

Now I'm messing around with #ceph for managing high-availability #storage (filesystem and #s3) and #stolon for high-availability #postgres.

2 Likes

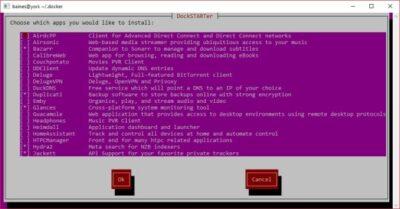

DockSTARTer is a way to make it quick and easy to get up and running with Docker

You may choose to rely on DockSTARTer for various changes to your Docker system, or use DockSTARTer as a stepping stone and learn to do more advanced configurations.

It is a bit like having a cPanel script installer for your self-hosting at home to quickly install services to host, but using Docker underneath. I’d suggest though reading through the website a bit first, and I see at least two YouTube videos also on using DockSTARTer.

#technology #containers #docker #dockstarter #selfhosting

#Blog, ##containers, ##docker, ##dockstarter, ##selfhosting, ##technology

3 Likes

While it’s truly amazing that #Docker allows me to build images for multiple processor architectures, it’s a pain in the butt when you forget that your desktop machine is now ARM and your servers all run AMD64 and that you have to remember that every time you build.

I guess I need to write another script.

One person like that

1 Comments

● NEWS ● #Geshan ☞ How to use #MySQL with #Docker and Docker compose a beginners guide https://geshan.com.np/blog/2022/02/mysql-docker-compose/

One person like that

3 steps to start running containers today – Learn how to run two containers in a pod to host a WordPress site

I know I’ve posted recently about containers, but I still regret not starting to use them earlier. It was because my hosting was ‘working’ and I did not see the need. But once I started getting more issues with upgrading some applications, I realised the benefits of containers.

Pre-made containers get distributed with just what’s necessary to run the application it contains. With a container engine, like Podman, Docker, or CRI-O, you can run a containerized application without installing it in any traditional sense. Container engines are often cross-platform, so even though containers run Linux, you can launch containers on Linux, macOS, or Windows.

One thing I had to get to grips with, was depending on the image you use to create your container, some just require pulling a new image to update the application, and some require the upgrade to be run as normal within the running application. You also want to be sure to do regular backups of any external volumes, as these contain user data that is not recreated by the container.

See https://opensource.com/article/22/2/start-running-containers

#technology #containers #docker #podman #hosting

#Blog, ##containers, ##docker, ##hosting, ##podman, ##technology

2 Likes

One person like that

6 Comments