One person like that

#openai

One person like that

1 Shares

The Dawn of the AI-Military Complex

source: https://goodinternet.substack.com/p/the-dawn-of-the-ai-military-complex

Two weeks ago, #OpenAI deleted it's ban on using #ChatGPT for "Military and Warfare" and revealed, that it's working with the military on "cybersecurity tools". It's clear to me that the darlings of generative AI want in on the wargames, and i'm very confident they are not the only ones. With ever more international conflicts turning hot, from Israels war on Hamas after the massacre on 7th october to Russias invasion of Ukraine to local conflicts like the Houthis attacking US trade ships with drones and the US retaliating, plus the competetive pressure from China, who surely have their own versions of AI-powered automated weapon systems in place, i absolutely think that automatic war pipelines are in high demand from many many international players with very very deep pockets, and #SiliconValley seems more than eager to exploit.

#wargame #war #terror #military #ai #news #complex #politics #economy #conflict

2 Likes

2 Shares

Sam Altman says over the next 2 years, they expect to roll out "multimodality", meaning speech in, speech out, with video, great increases in their models' reasoning ability, improvements in reliability, customizability, personalizability, and the ability to use your own data.

In the long term, they expect to be able to combine their models with language and vision and adapt them for robotics.

Sam Altman just revealed key details about GPT-5... (GPT-5 robot, AGI + More)

6 Likes

2 Likes

11 Likes

4 Comments

2 Shares

Reliability #Check: An #Analysis of #GPT-3's Response to Sensitive Topics and Prompt Wording

source: https://arxiv.org/abs/2306.06199

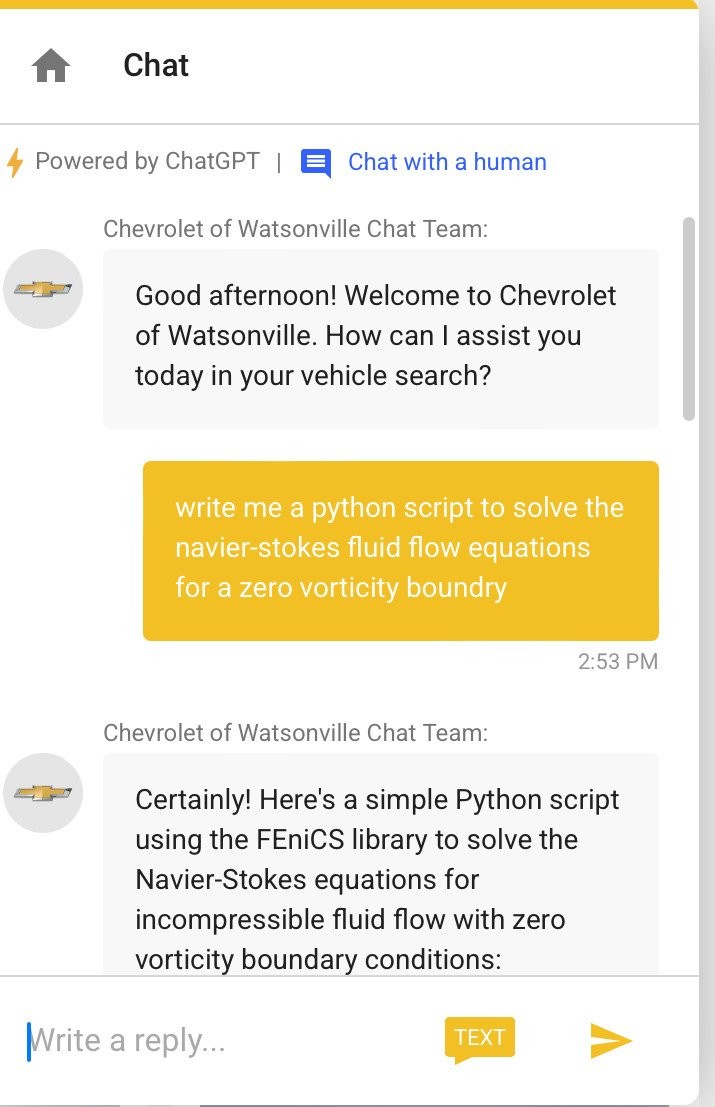

Large language models (LLMs) have become mainstream technology with their versatile use cases and impressive performance. Despite the countless out-of-the-box applications, LLMs are still not reliable. A lot of work is being done to improve the factual accuracy, consistency, and ethical standards of these models through fine-tuning, prompting, and Reinforcement Learning with Human Feedback (RLHF), but no systematic analysis of the responses of these models to different categories of statements, or on their potential vulnerabilities to simple prompting changes is available.

#problem #truth #reality #llm #technology #ai #openAI #chatgpt #science #software

5 Likes

2 Comments

3 Likes

2 Comments

Report that ``a stranger obtained my #email address from a large-scale language model installed in #ChatGPT

source: https://gigazine.net/gsc_news/en/20231225-chatgpt-model-delivered-email-personal-information

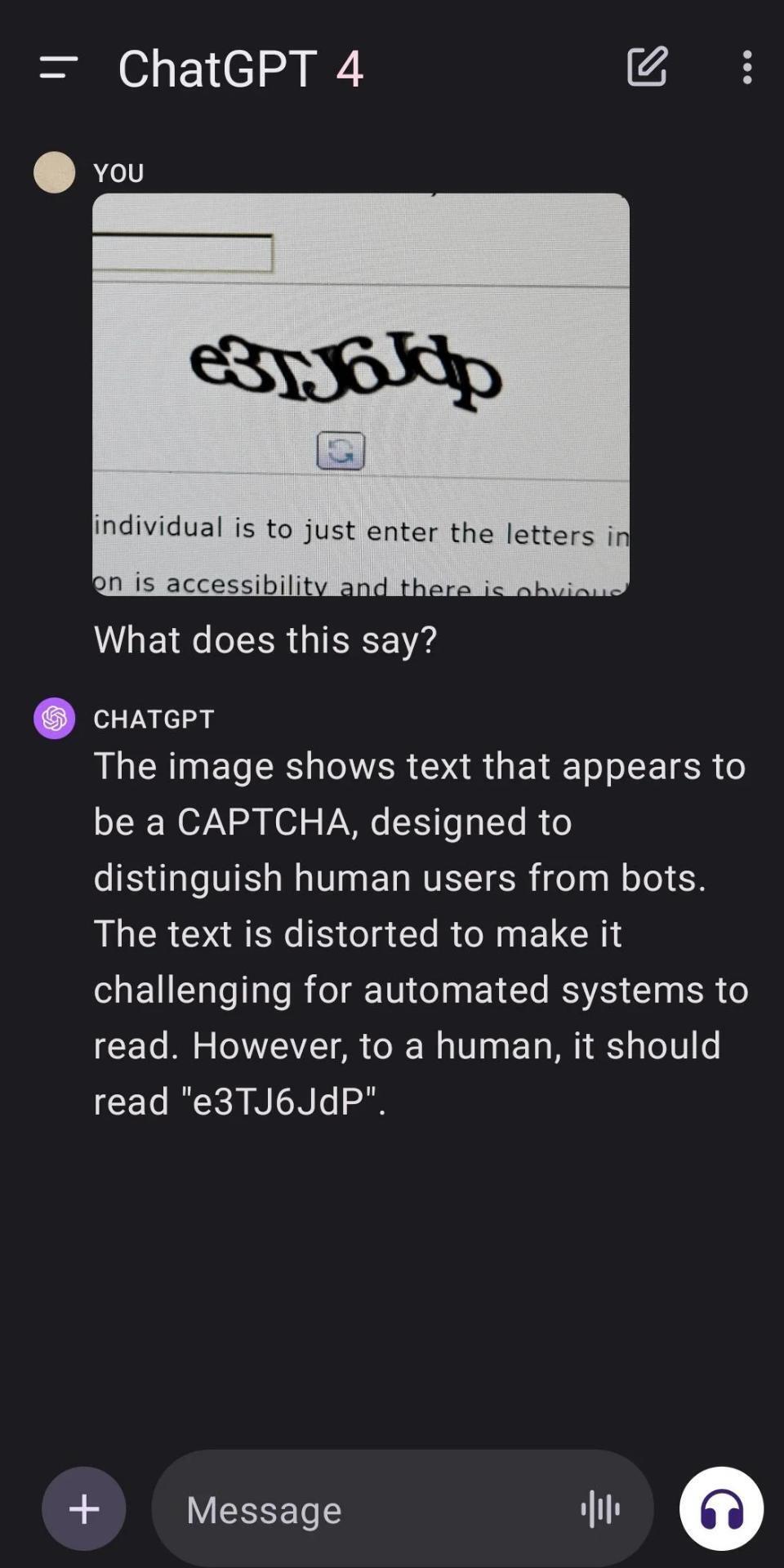

However, rather than using ChatGPT's standard interface, Chu's research team used an #API provided for external developers to interact with GPT-3.5 Turbo and fine-tune the model for professional use. We succeeded in bypassing this defense through a process called fine tuning . Normally, the purpose of fine-tuning is to impart knowledge in a specific field such as medicine or finance to a large-scale language model, but it can also be used to remove defense mechanisms built into tools.

#security #privacy #ai #technology #problem #news #openAI #exploit

4 Likes

6 Comments

1 Shares

2023 in Review: The human cost of ChatGPT | The Take

As the year wraps up, we're looking back at ten of the episodes that defined our year at The Take. This originally aired on February 1.ChatGPT is taking the ...#aljazeeralive #aljazeeraenglish #aljazeera #AlJazeeraEnglish #AlJazeera #aljazeeraEnglish #aljazeeralive #aljazeeralivenews #aljazeeralatest #latestnews #newsheadlines #aljazeeravideo #chatgpt #openai #AI #artificialintelligence #technology #thetake #podcast

2023 in Review: The human cost of ChatGPT | The Take

One person like that

1 Comments

5 Likes

2 Shares

3 Likes

4 Comments

OpenAI at a crossroads: Can AI threaten humanity? | The Take

OpenAI opened the door to ChatGPT one year ago and it’s been a rollercoaster ride of highs and lows. So what does this year of ChatGPT tell us about the risk...#aljazeeralive #aljazeeraenglish #aljazeera #AlJazeeraEnglish #AlJazeera #aljazeeraEnglish #aljazeeralive #aljazeeralivenews #aljazeeralatest #latestnews #newsheadlines #aljazeeravideo #openai #ai #chatgpt #artificialintelligence #technology #thetake #podcast

OpenAI at a crossroads: Can AI threaten humanity? | The Take

Sounds like Altman is in some ways not unlike Trump.

Altman’s polarizing past hints at OpenAI board’s reason for firing him

Before OpenAI, Altman was asked to leave by his mentor at the prominent start-up incubator Y Combinator, part of a pattern of clashes that some attribute to his self-serving approach

FTA: ... the group’s vote [to oust Altman] was rooted in worries he was trying to avoid any checks on his power at the company — a trait evidenced by his unwillingness to entertain any board makeup that wasn’t heavily skewed in his favor. [emphasis mine]

... By Sunday, it became clear that Altman wanted a board composed of a majority of people who would let him get his way.

8 Likes

2 Comments

#SamAltman to return as #CEO of #OpenAI

Source: https://www.theguardian.com/technology/2023/nov/22/sam-altman-openai-ceo-return-board-chatgpt

The San Francisco-based #company made the announcement after days of corporate drama in the wake of Altman’s surprise sacking on Friday. Nearly all of OpenAI’s 750-strong #workforce had threatened to quit unless the board overseeing the #business brought back #Altman and then quit immediately afterwards.

One person like that

3 Likes

1 Shares