FrontierMath is a new benchmark of original, exceptionally challenging mathematics problems -- and all the problems are new and previously unpublished, so they can't be already in large language model (LLMs)' training sets.

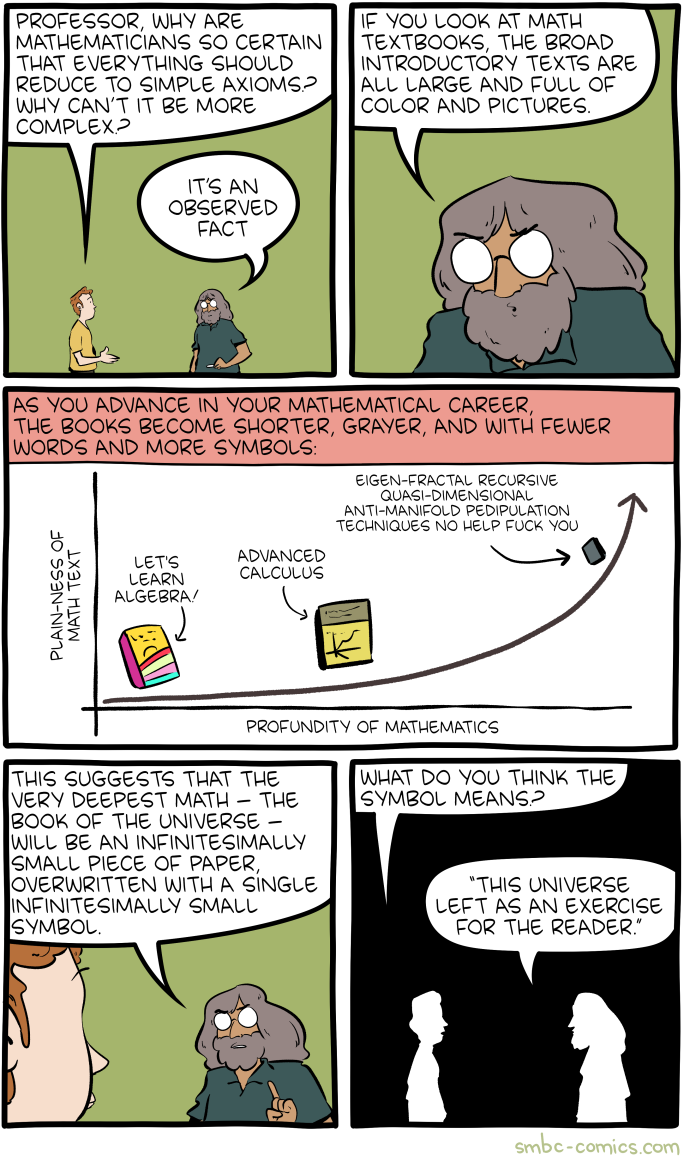

We don't have a good measurement of super advanced mathematics capabilities in AI models. The researchers note that current mathematics benchmarks for AI systems, like the MATH dataset and GSM8K, measure ability at the high-school level, and early undergraduate level. The researchers are motivated by a desire to measure deep theoretical understanding, creative insight, and specialized expertise.

There's also the problem of "data contamination" -- "the inadvertent inclusion of benchmark problems in training data." "This causes artificially inflated performance scores for LLMs, and that masks the models' true reasoning (or lack of reasoning) capabilities.

"The benchmark spans the full spectrum of modern mathematics, from challenging competition-style problems to problems drawn directly from contemporary research, covering most branches of mathematics in the 2020 Mathematics Subject Classification."

I had a look at the 2020 Mathematics Subject Classification. It's a 224-page document that is just a big list of subject areas with number-and-letter codes assigned to them. For example "11N45" means "Asymptotic results on counting functions for algebraic and topological structures".

"Current state-of-the-art AI models are unable to solve more than 2% of the problems in FrontierMath, even with multiple attempts, highlighting a significant gap between human and AI capabilities in advanced mathematics."

"To understand expert perspectives on FrontierMath's difficulty and relevance, we interviewed several prominent mathematicians, including Fields Medalists Terence Tao, Timothy Gowers, and Richard Borcherds, and Internatinal Mathematics Olympiad coach Evan Chen. They unanimously characterized the problems as exceptionally challenging, requiring deep domain expertise and significant time investment to solve."

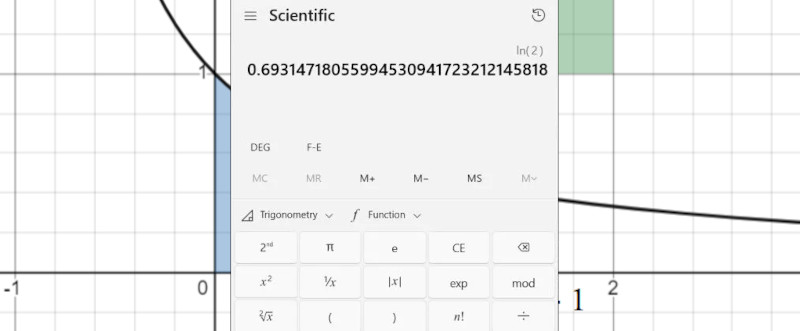

Unlike many International Mathematics Olympiad problems, the FrontierMath problems have a single numerical answer, which makes them possible to check in an automated manner -- no human hand-grading required. At the same time, they have worked to make the problems "guess-proof".

"Problems often have numerical answers that are large and nonobvious." "As a rule of thumb, we require that there should not be a greater than 1% chance of guessing the correct answer without doing most of the work that one would need to do to 'correctly' find the solution."

The numerical calculations don't need to be done in the language model -- they have access to Python to perform mathematical calculations.

FrontierMath: A benchmark for evaluating advanced mathematical reasoning in AI

#solidstatelife #ai #genai #llms #mathematics