#Punarvasu (புனர்பூசம்): Principles

Placement: 20° 00’ of Gemini to 03° 20’ of Cancer

Element: #Water (Neer)

Pushkara Navamsha/Bhaga: 2nd and 4th Pada*/NA

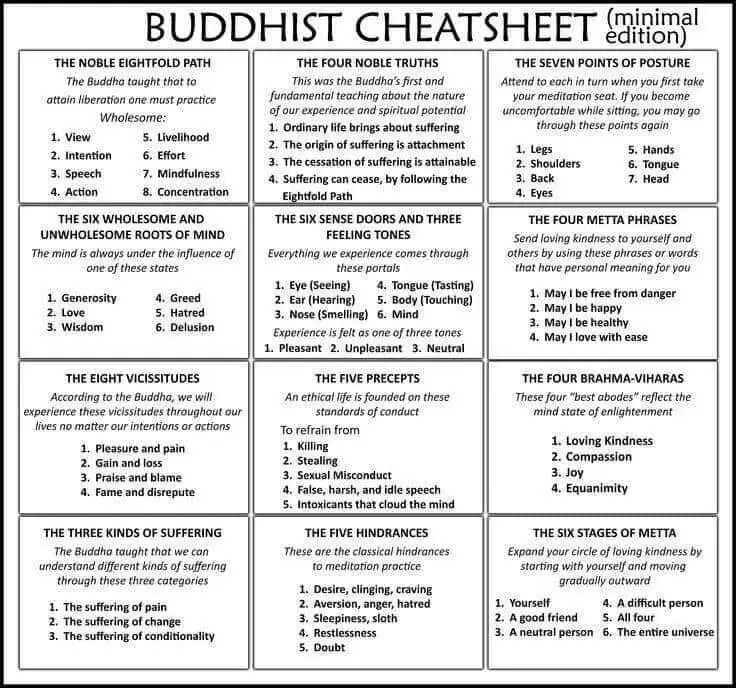

Just like Ashwini #nakshatra, Punarvasu also has two aspects: one sattvic and the other tamasic. The balance of life is shown by equating sattva with tamas and vice versa. Punarvasu represents the cyclical nature of #time, where good times are followed by bad and vice versa. This teaches us the #philosophy of life. Punarvasu is paired with the next nakshatra, Pushya.

Ruler: #Jupiter, the planet of principles, dharma, and wisdom, governs this nakshatra. Additionally, Jupiter also governs Sagittarius, which is the natural ninth house of Dharma.

Symbol: The symbol of Punarvasu is a "quiver of arrows", which signifies the importance of being mentally and physically prepared for any upcoming situation. It also represents the concept of time, which constantly moves forward without stopping. The nakshatra's other symbol is a "house", which signifies the ability to remain steady and #unchanging amidst changing circumstances.

Deity: The deity associated with Punarvasu nakshatra is #Goddess #Aditi, who is known as the mother of the 12 Adityas, which are solar deities including Surya (the sun god). Aditi symbolizes the nurturing and nourishing motherly #nature, which is associated with this nakshatra.

The connection between Punarvasu and Sagittarius is apparent through the aforementioned characteristics.

Animal: #Female #cat.

Guna: This nakshatra is characterized as Rajasic, action-oriented, with a focus on stability and sustainability.

Power: Punarvasu represents the power of retrieval and restoration, known as "Vastuva Prapana Shakti".

Story of Lord #Ram: Lord Ram, an incarnation of Lord Vishnu, was born under the Punarvasu nakshatra. He was a virtuous prince who was supposed to become the king, but due to unforeseen events, he was banished from his kingdom just a day before his coronation. Despite this setback, he never lost hope and stayed true to his values and beliefs. He faced numerous challenges during his exile, but he persevered and eventually returned to his homeland after 14 years to claim his rightful place as the king. This story illustrates the significance of time, morality, duty, principles, hope, and resilience, which are all associated with this nakshatra. Additionally, the number 14 holds a special significance in this story.

General attributes: Individuals born under Punarvasu nakshatra are likely to have firm beliefs and principles, whether they are good or bad. They may dedicate their entire life to upholding these beliefs. As they age, they may become a source of inspiration and hope for others. Although they may appear to go with the flow, they are adaptable and have a deep understanding of the nature of events. They are calm, composed, and patient, waiting for the right moment to take action. They may frequently discuss topics related to time, karma, and the cyclical nature of things. These are general qualities, and reader's discretion is advised. The fourth pada of Punarvasu is considered to be doubly strong and auspicious because it is a vargottama pada.

https://www.milkywayastrology.com/post/punarvasu