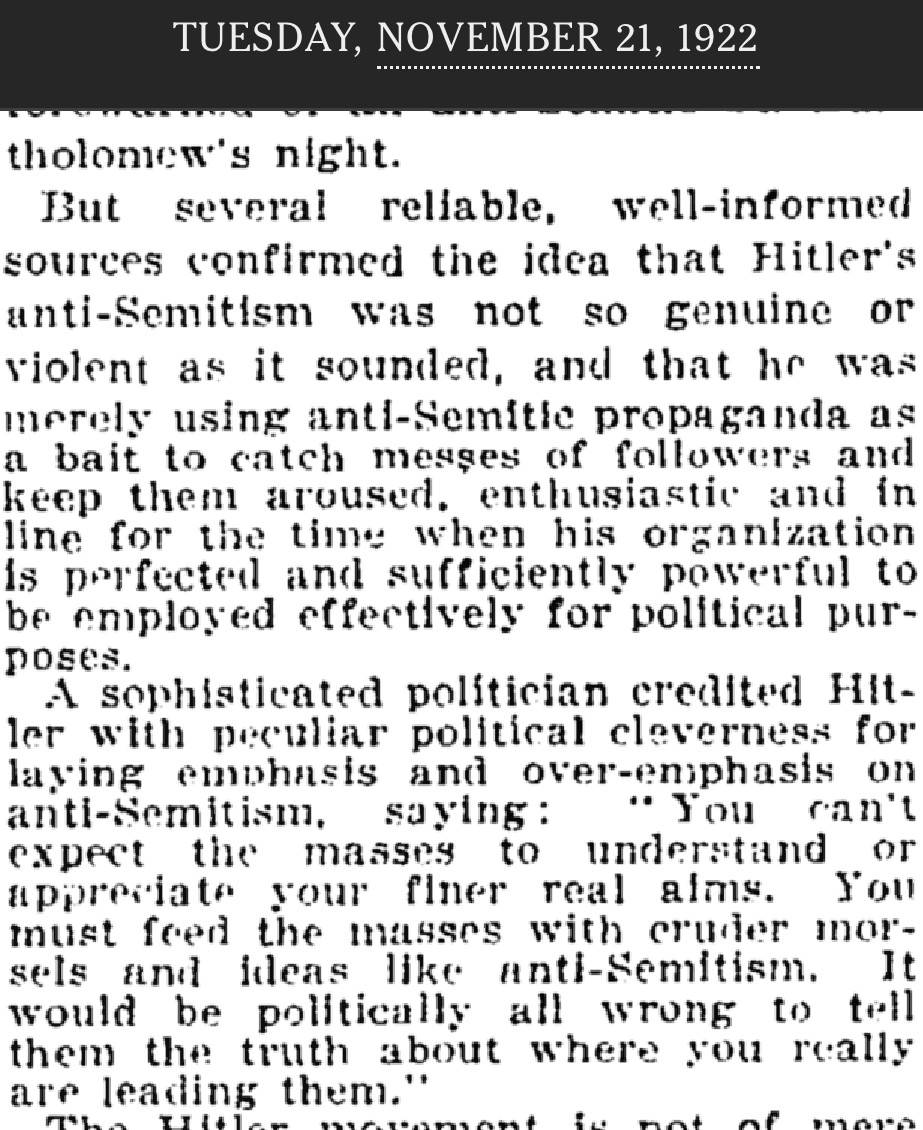

The Palestinian health authorities claimed that Israel was responsible for the death of some 500 civilians. Because the details were extremely murky, it was impossible to tell who had caused the explosion or how many people had died. And yet some of the most reputable names in news media sent push alerts that broadcast Hamas’s claims far and wide.

But both push alerts would have led reasonable readers to conclude that these statements must basically be true. Both talked about “Israeli” air strikes. Both uncritically reported that many hundreds had died.

News of the supposed Israeli strike quickly had huge real-world consequences. The king of Jordan canceled a planned meeting with President Joe Biden. Mass protests broke out in cities across the Middle East, some culminating in attacks on foreign embassies. In Germany, two unknown assailants threw Molotov cocktails at a synagogue in Berlin.

A live video transmission from Al Jazeera appeared to show that a projectile rose from inside Gaza before changing course and exploding in the vicinity of the hospital; the Israel Defense Forces have claimed that this was one of several rockets fired from Palestinian territory. Subsequent analysis by the Associated Press has substantially corroborated this. In addition, pictures of the site taken by Reuters showed a small crater that, according to independent analysts using open-source intelligence, is inconsistent with the effect of munitions typically used by Israel. It came to look doubtful that the missile had directly hit the hospital; as a BBC team investigating the blast reported, “Images of the ground after the blast do not show significant damage to surrounding hospital buildings.”

The cause of the tragedy, it appears, is the opposite of what news outlets around the world first reported.

Such a glaring example of major outlets messing up on a very consequential event helps explain why trust in traditional news media has been falling fast. As recently as 2003, eight out of 10 British respondents said that they “trust BBC journalists to tell the truth.” By 2020, the share of respondents who said that they trust the BBC had fallen to fewer than one in two. Americans have been mistrustful of media for longer, but here, too, the share of respondents who say that they trust mass media to report “the news fully, accurately, and fairly” has fallen to a near-record low.